Introduction

Website scrapers have become essential tools in the digital landscape, allowing businesses to efficiently gather extensive data from the web. These automated systems streamline information retrieval and empower companies to make data-driven decisions that enhance their competitive advantage. However, as the prevalence of web scraping increases, organisations face significant legal and ethical challenges. How can they navigate these complexities while fully leveraging the potential of web data extraction?

Define Website Scraper: Understanding the Core Concept

A website extractor, commonly known as a web extractor, is a sophisticated software tool designed to efficiently retrieve information from websites. This automated process involves navigating web pages, extracting content, and converting it into structured formats such as databases or spreadsheets. Web extraction tools can be tailored to target specific information points, including product prices, reviews, or contact details, making them essential for companies aiming to gather comprehensive information swiftly and accurately.

The technology underpinning web data harvesting utilises advanced techniques, such as HTML parsing, DOM manipulation, and HTTP requests, to access and extract the desired information from web pages. By 2026, it is projected that over 60% of businesses will be employing web tools for data extraction, indicating a significant shift towards data-driven decision-making across various sectors.

Recent advancements in web extraction tools have further improved their capabilities. For example, AI-driven features like adaptive retries and auto-labelling are becoming standard, allowing data collectors to navigate complex web structures and circumvent anti-bot defences more effectively. Real-world applications of web data extraction technology are particularly evident in industries such as e-commerce, where retailers use scrapers to monitor competitor pricing strategies, leading to enhanced market responsiveness and increased sales. A notable case is UK retailer John Lewis, which implemented competitor price analysis to evaluate market pricing strategies, resulting in a 4.00% increase in sales. Appstractor's advanced information extraction solutions exemplify this trend, offering features such as price monitoring and competitive assortment tracking that enable businesses to maintain a competitive edge while ensuring GDPR compliance.

As the web data extraction landscape continues to evolve, industry leaders stress the importance of compliance and ethical practises. The focus is shifting from mere information extraction to fostering collaborative relationships between information owners and gatherers, ensuring that scraping practises comply with legal standards and respect rights. This evolution underscores the critical role of web tools in modern business strategies, empowering organisations to leverage data for a competitive advantage.

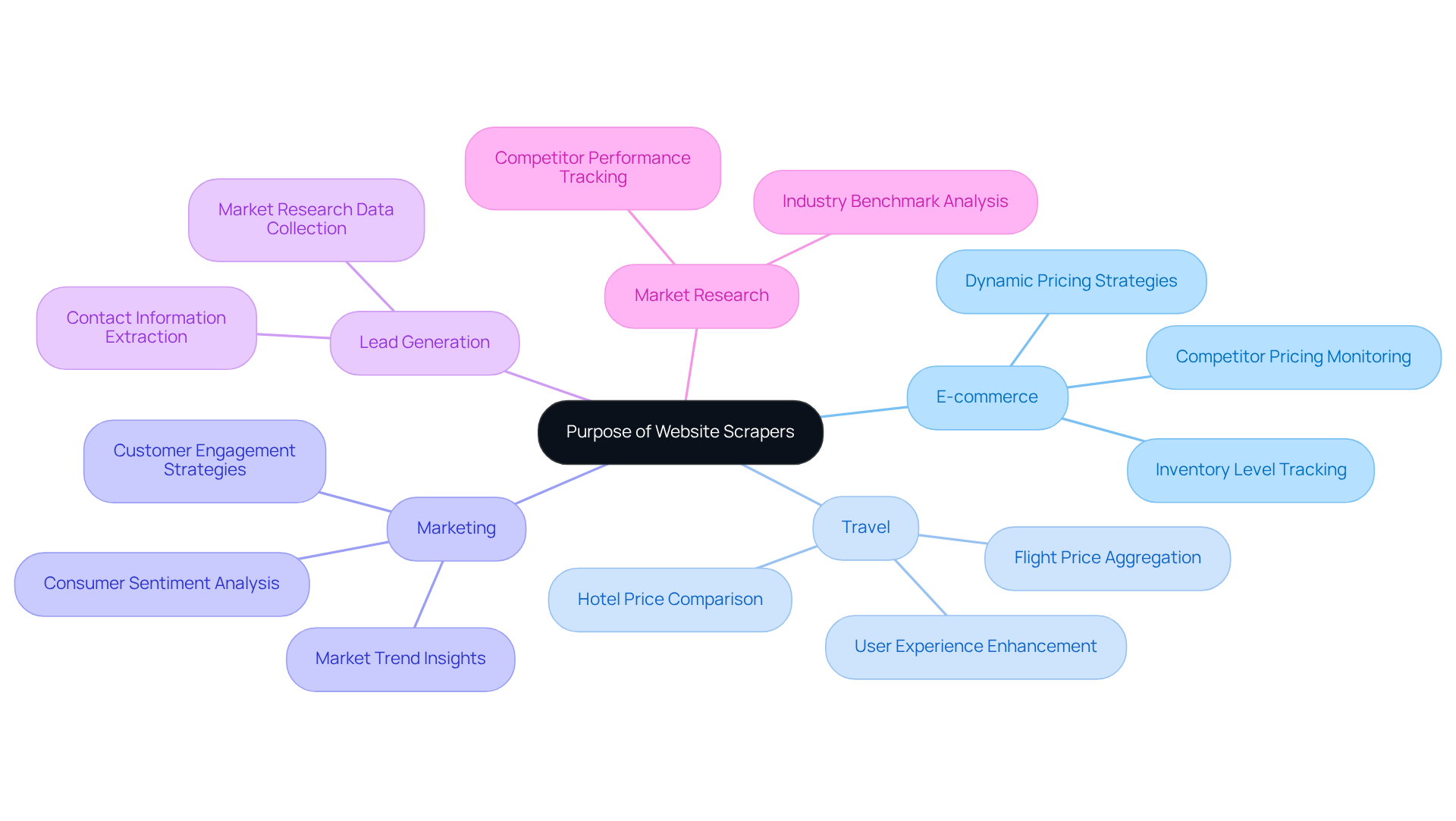

Explore the Purpose of Website Scrapers: Applications Across Industries

Website data extractors play a crucial role across various industries, significantly shaping business strategies. In e-commerce, companies utilise these tools to monitor competitor pricing and inventory levels, enabling them to adjust their pricing strategies in real time. This adaptability is vital, as nearly 30% of consumers are inclined to switch retailers for better prices, underscoring the importance of real-time data in maintaining competitiveness.

In the travel sector, these tools aggregate flight and hotel prices, allowing consumers to easily compare options and secure the best deals. This functionality not only enhances the user experience but also fosters competition among providers, resulting in improved pricing and service offerings.

Marketers are increasingly leveraging web tools to analyse consumer sentiment from social media platforms, yielding valuable insights into market trends and customer preferences. Such information is essential for shaping effective marketing strategies and enhancing customer engagement.

Additional applications include lead generation, where businesses extract contact information from online directories, and comprehensive market research, where scrapers collect data on industry benchmarks and competitor performance. As the web scraping landscape evolves, knowing what is a website scraper becomes essential for strategic decision-making and operational efficiency, making it an indispensable asset for businesses striving to excel in a competitive environment. By employing reliable proxy services, digital marketers can ensure the delivery of concise and accurate information, ultimately leading to more effective strategies.

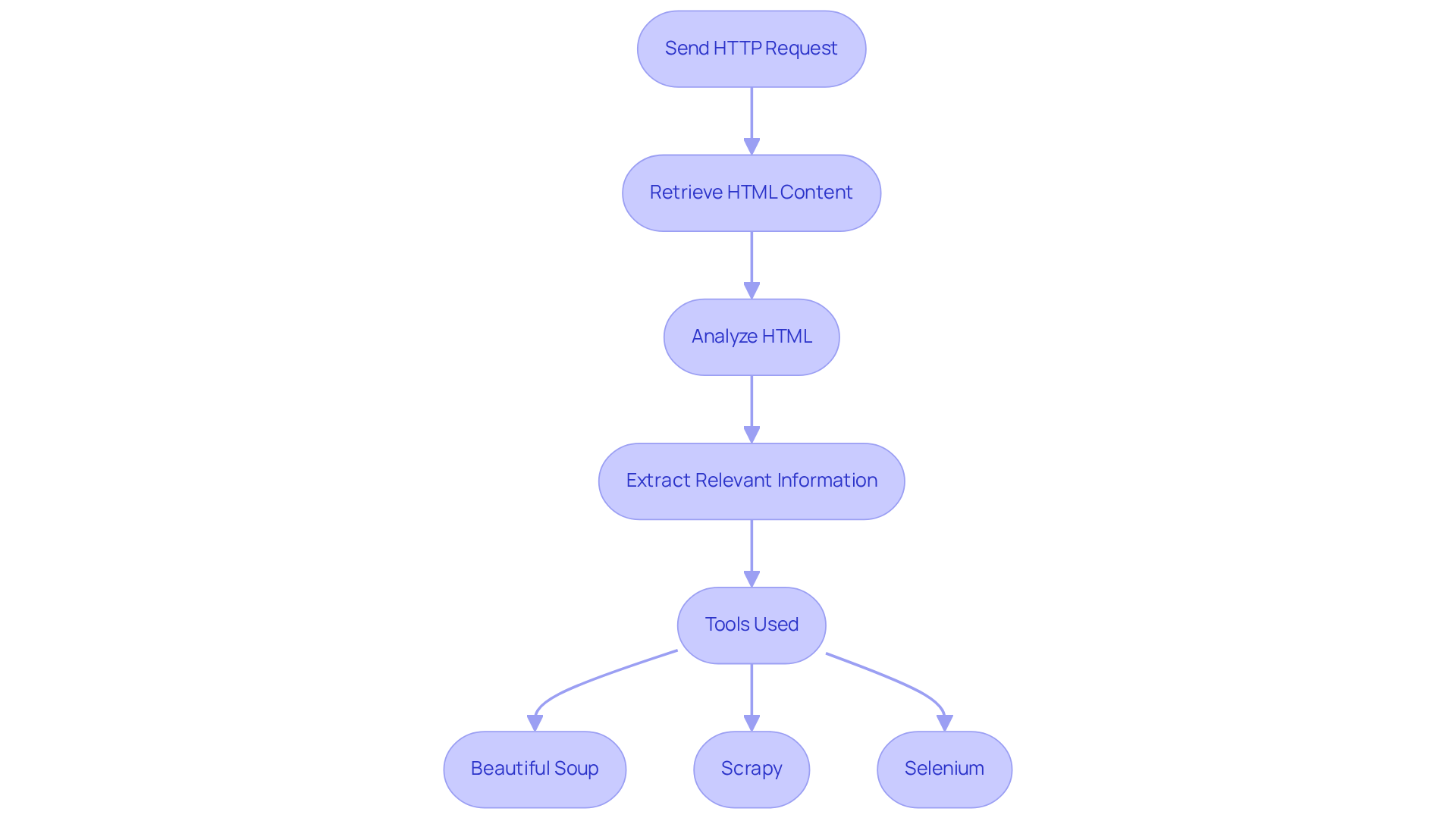

Examine How Website Scrapers Function: Techniques and Technologies

To understand what is a website scraper, one must know that website extractors operate through a systematic procedure that encompasses navigation and information retrieval. The process begins with sending an HTTP request to the target website, which retrieves the HTML content of the page. Once the content is obtained, tools analyse the HTML to identify and extract relevant information, demonstrating what is a website scraper, using methods such as XPath and CSS selectors.

Sophisticated scrapers, like those offered by Appstractor, leverage 14 years of enterprise-level extraction expertise alongside machine learning algorithms to enhance their precision and effectiveness in information identification. These tools adapt to changes in website structures, ensuring reliable data extraction. Furthermore, Appstractor's solutions are designed to manage dynamic content generated by JavaScript, facilitating effective information extraction from modern web applications.

Notably, Appstractor provides features such as:

- Real estate listing change alerts

- Compensation benchmarking

All while ensuring full GDPR compliance. Their global self-healing IP pool guarantees continuous uptime, and support is measured by time-to-resolution, ensuring that engineers remain engaged until issues are resolved.

Well-known tools and libraries, including:

- Beautiful Soup

- Scrapy

- Selenium

Play a crucial role in streamlining these processes. They empower developers to create robust extraction solutions that meet the evolving demands of information gathering.

Discuss Legal and Ethical Considerations in Web Scraping

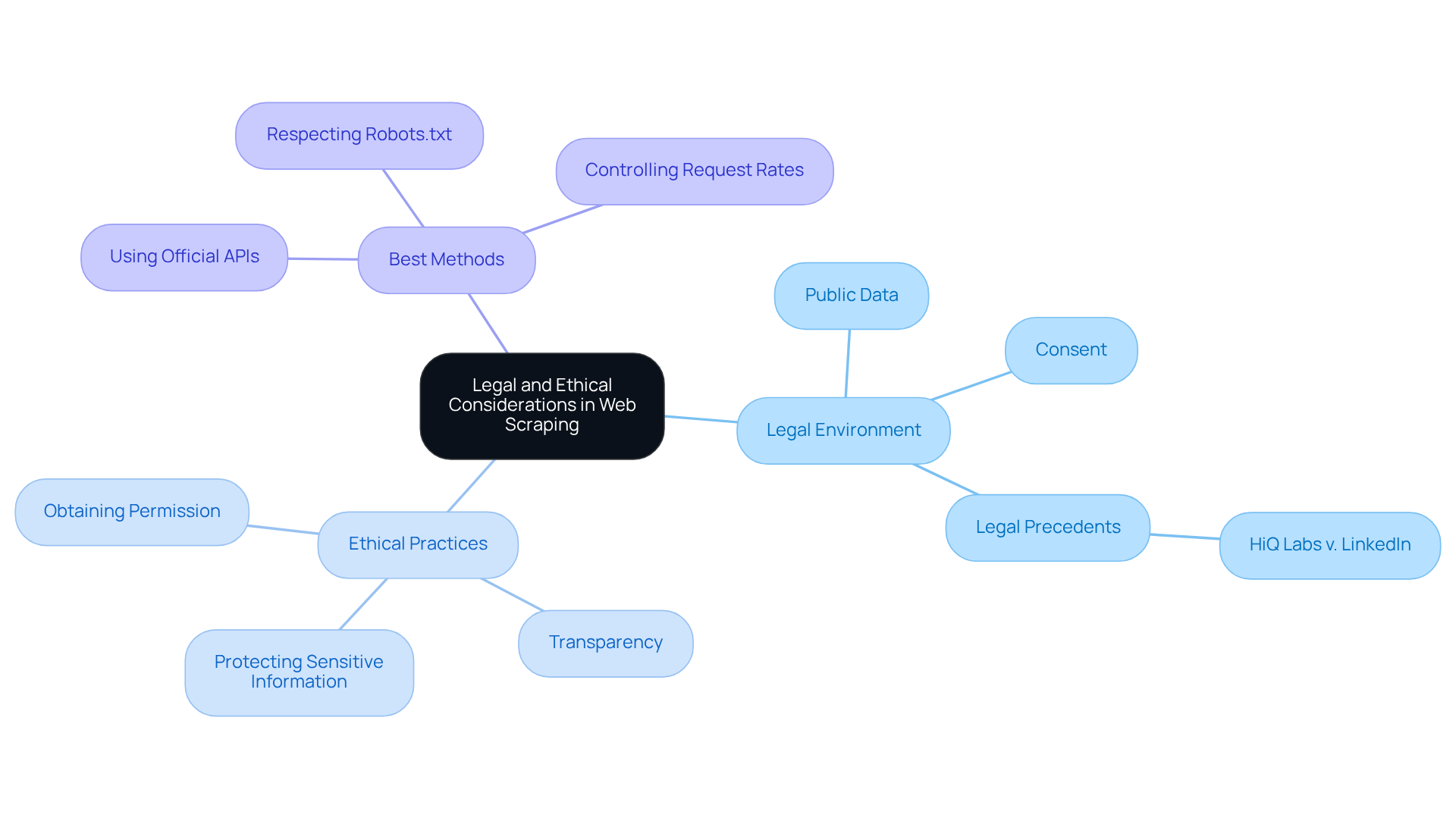

The legal and ethical environment of web data collection is complex and varies significantly across jurisdictions. Collecting publicly available data is generally permissible; however, complications arise when personal data is gathered without consent or when a website's terms of service are violated. Legal precedents indicate that irresponsible data extraction can lead to copyright infringement or breach of contract claims.

For instance, the HiQ Labs v. LinkedIn case emphasised that public information is not covered by the Computer Fraud and Abuse Act, while also underlining the importance of ethical considerations in data collection practises. Legal professionals stress that organisations frequently need to obtain clear permission from individuals when engaging in web extraction activities involving personal information. This approach not only aligns with GDPR requirements but also mitigates potential ethical violations.

Morally, companies must manage the consequences of their extraction activities, ensuring respect for content creators' rights and compliance with privacy regulations like GDPR. Reports indicate that approximately 62% of organisations have increased their cybersecurity investments due to GDPR, reflecting a growing awareness of the need for compliance.

Optimal methods for ethical web harvesting include:

- Obtaining permission when necessary to honour content ownership.

- Being transparent about usage to foster trust with content providers.

- Implementing measures to protect sensitive information, thereby minimising legal risks.

- Utilising official APIs when available to respect rate limits.

- Managing request rates to avoid overloading servers.

By adhering to these practises, businesses can navigate the complexities of web scraping while maintaining positive relationships with data sources and ensuring compliance with evolving legal standards.

Conclusion

In conclusion, website scrapers are indispensable tools that enable businesses to extract valuable data from websites, fundamentally transforming how organisations gather and utilise information. By automating content retrieval and converting it into structured formats, these tools facilitate data-driven decision-making, allowing companies to maintain a competitive edge in their respective markets.

The applications of web scraping span various industries, including e-commerce, travel, and marketing. Organisations utilise these tools to:

- Monitor competitor pricing

- Enhance customer engagement through sentiment analysis

- Conduct thorough market research

Furthermore, the discussion surrounding the technologies and techniques behind web scrapers underscores their sophistication, demonstrating how they adapt to evolving web environments while ensuring compliance with legal and ethical standards.

As the web scraping landscape continues to evolve, it is crucial for organisations to prioritise ethical practises and legal compliance to uphold trust with data providers and consumers. By adopting responsible web scraping methods, businesses can leverage the power of data extraction while nurturing positive relationships with content owners. This proactive approach not only mitigates potential legal issues but also positions companies to thrive in an increasingly data-centric world.

Frequently Asked Questions

What is a website scraper?

A website scraper, also known as a web extractor, is a software tool designed to efficiently retrieve information from websites by navigating web pages, extracting content, and converting it into structured formats like databases or spreadsheets.

How do web extraction tools work?

Web extraction tools utilise advanced techniques such as HTML parsing, DOM manipulation, and HTTP requests to access and extract desired information from web pages.

What types of information can be targeted by web extractors?

Web extractors can be tailored to target specific information points, including product prices, reviews, and contact details.

What is the projected trend for businesses using web tools for data extraction by 2026?

It is projected that over 60% of businesses will be employing web tools for data extraction by 2026, indicating a significant shift towards data-driven decision-making.

What advancements have been made in web extraction tools?

Recent advancements include AI-driven features like adaptive retries and auto-labelling, which help data collectors navigate complex web structures and circumvent anti-bot defenses more effectively.

In which industries is web data extraction technology particularly evident?

Web data extraction technology is particularly evident in industries such as e-commerce, where it is used to monitor competitor pricing strategies.

Can you provide an example of a company that successfully used web scraping?

UK retailer John Lewis implemented competitor price analysis through web scraping, which resulted in a 4.00% increase in sales.

What features do advanced information extraction solutions offer?

Advanced information extraction solutions, like those from Appstractor, offer features such as price monitoring and competitive assortment tracking to help businesses maintain a competitive edge while ensuring GDPR compliance.

What is the importance of compliance and ethical practises in web data extraction?

Compliance and ethical practises are crucial as the focus shifts from mere information extraction to fostering collaborative relationships between information owners and gatherers, ensuring that scraping practises comply with legal standards and respect rights.

List of Sources

- Define Website Scraper: Understanding the Core Concept

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://kanhasoft.com/blog/web-scraping-statistics-trends-you-need-to-know-in-2025)

- Web Scraping Market Size, Growth Report, Share & Trends 2025 - 2030 (https://mordorintelligence.com/industry-reports/web-scraping-market)

- News Scraping Guide: Tools, Use Cases, and Challenges (https://infatica.io/blog/news-scraping)

- Exploring Business Benefits of Web Scraping: Key Stats & Trends (https://browsercat.com/post/business-benefits-web-scraping-statistics-trends)

- Web Scraping Report 2026: Market Trends, Growth & Key Insights (https://promptcloud.com/blog/state-of-web-scraping-2026-report)

- Explore the Purpose of Website Scrapers: Applications Across Industries

- | Scrapfly (https://scrapfly.io/use-case/media-and-news-web-scraping)

- Web Scraping Trends for 2025 and 2026 (https://ficstar.medium.com/web-scraping-trends-for-2025-and-2026-0568d38b2b05?source=rss------ai-5)

- Web Scraping Report 2026: Market Trends, Growth & Key Insights (https://promptcloud.com/blog/state-of-web-scraping-2026-report)

- Examine How Website Scrapers Function: Techniques and Technologies

- Web Scraping Market Size, Growth Report, Share & Trends 2025 - 2030 (https://mordorintelligence.com/industry-reports/web-scraping-market)

- 2026 Web Scraping Industry Report | AI Data Trends | Actowiz Solutions (https://actowizsolutions.com/web-scraping-industry-report-data-first-ai-revolution.php)

- State of Web Scraping 2026: Trends, Challenges & What’s Next (https://browserless.io/blog/state-of-web-scraping-2026)

- GETHOOKD Releases 2026 Report Comparing Ad Library Scraper Tools For Ecommerce (https://markets.businessinsider.com/news/stocks/gethookd-releases-2026-report-comparing-ad-library-scraper-tools-for-ecommerce-1035819917)

- Web Scraping Report 2026: Market Trends, Growth & Key Insights (https://promptcloud.com/blog/state-of-web-scraping-2026-report)

- Discuss Legal and Ethical Considerations in Web Scraping

- IAB Releases Draft Legislation Addressing AI Scraping (https://iab.com/news/iab-releases-draft-legislation-to-address-ai-content-scraping-and-protect-publishers)

- Importance and Best Practices of Ethical Web Scraping (https://secureitworld.com/article/ethical-web-scraping-best-practices-and-legal-considerations)

- GDPR Statistics Worldwide 2024: Key Trends and Insights into Global Data Privacy (https://privacyengine.io/gdpr-statistics-worldwide-2024)

- Is Website Scraping Legal? All You Need to Know - GDPR Local (https://gdprlocal.com/is-website-scraping-legal-all-you-need-to-know)