Introduction

Web scraping has become an essential tool for digital marketers, allowing them to leverage the vast amounts of information available online. This guide explores the intricacies of web scraping using Python, providing readers with a thorough understanding of the process - from setting up the necessary environment to extracting and cleaning data. As the web continues to evolve into a more regulated landscape, it raises an important question: how can marketers ensure their scraping practises are both effective and ethically sound?

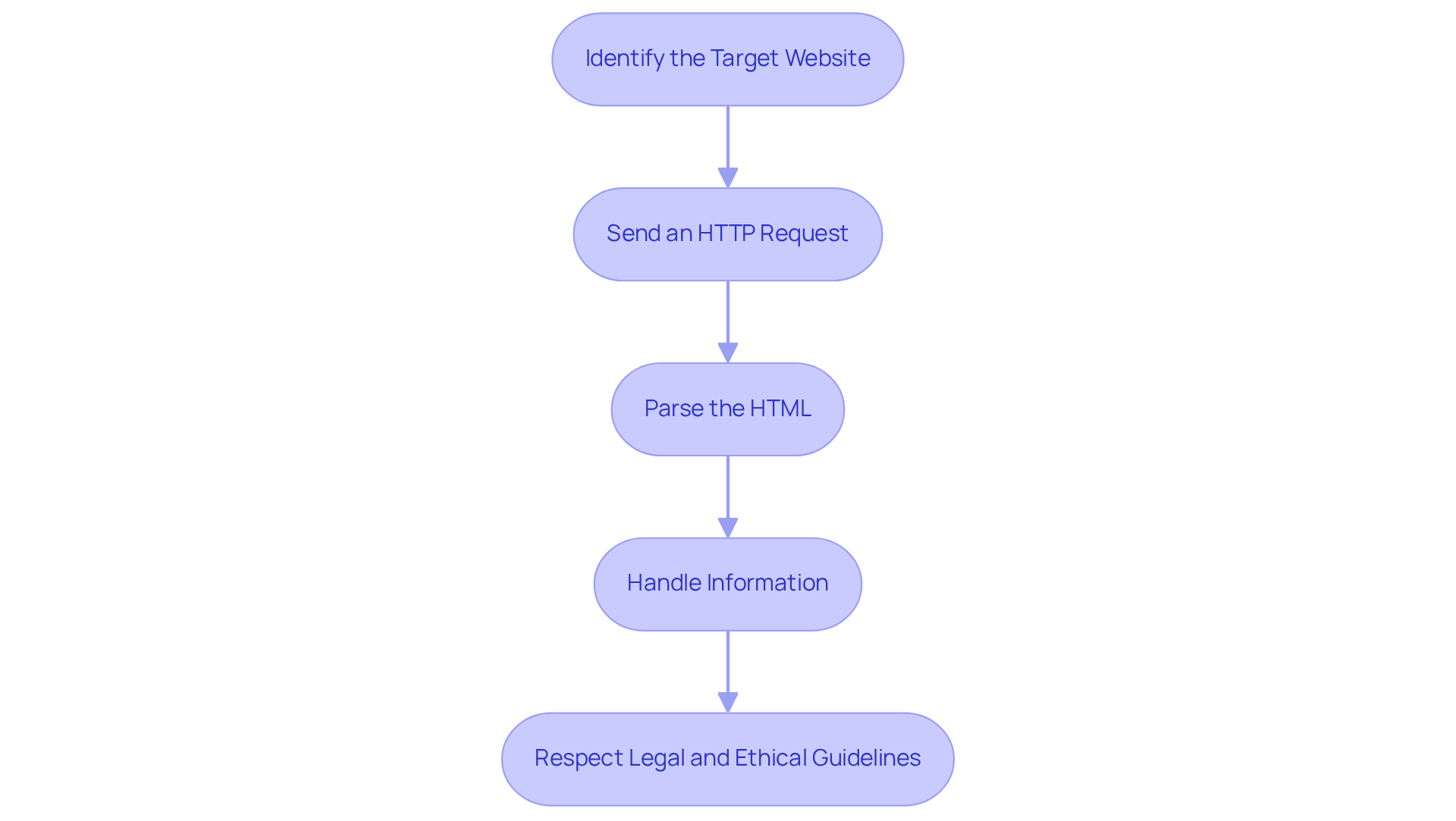

Understand the Web Scraping Process

Web harvesting is an automated method for retrieving information from websites, essential for various applications in digital marketing and information analysis. The process consists of several key components:

- Identify the Target Website: Start by selecting the website from which you want to extract data and specify the exact information needed. Understanding the site's structure and content is crucial for effective scraping.

- Send an HTTP Request: Utilise tools like

requestsin Python to send a request to the server hosting the website. This action retrieves the markup content of the page, serving as the foundation for information extraction. - Parse the HTML: After obtaining the HTML, employ a parsing library such as

BeautifulSoupto navigate through the HTML structure. This allows you to efficiently pinpoint and extract the desired information. - Handle Information: Once extracted, the information often requires cleaning and formatting to ensure it is suitable for analysis or storage. This step is vital for maintaining information integrity and usability. Appstractor's information mining service automates this process, delivering clean, de-duplicated information through advanced proxy networks and extraction technology, eliminating the need for manual data collection. Options include Rotating Proxy Servers for self-serve IPs, Full Service for turnkey data delivery, or Hybrid if you have in-house scrapers but require additional scale or expertise.

- Respect Legal and Ethical Guidelines: Always review the website's

robots.txtfile and terms of service to ensure compliance with their data collection policies. As we approach 2026, adhering to these guidelines is increasingly important as the web transitions towards a permission economy, where ethical data collection practises are paramount.

The web data extraction market is projected to grow significantly, with estimates suggesting it could reach between USD 1 billion and USD 1.1 billion by 2026. This growth underscores its critical role in data-driven decision-making across industries. Industry leaders emphasise that the focus is shifting from mere data extraction to responsible and sustainable practises that respect content ownership and privacy.

Set Up Your Python Environment and Libraries

To start web data extraction with Python, following a Python tutorial for web scraping will help you configure your environment and install the required packages. Follow these steps:

-

Install Python: Download and install the latest version of Python from the official website. Ensure you check the box to add Python to your system PATH during installation.

-

Create a Virtual Environment: Open your command line interface (CLI) and create a virtual environment to manage your project dependencies. Use the command:

python -m venv myenv -

Activate the Virtual Environment: Activate the environment using the following commands:

- On Windows:

myenv\Scripts\activate - On macOS/Linux:

source myenv/bin/activate

- On Windows:

-

Install Necessary Packages: Utilize pip to install the tools crucial for web extraction. The most popular libraries in 2026 include:

pip install requests beautifulsoup4 pandas newspaper3kNewsPaper3k is particularly useful for scraping news articles, providing clean content without HTML tags and junk data.

-

Verify Installation: After installation, confirm that the libraries are correctly installed by running:

import requests import bs4 import pandas as pd import newspaperIf no errors occur, your setup is complete and ready for web scraping projects.

Additionally, inspecting the HTML source code of the web pages you intend to scrape is important. This will assist you in recognizing the specific information you wish to extract, such as quotes or product prices. As noted by Aditya Singh, 'Four easy-to-use open-sourced Python web scraping tools to help you create your own news mining solution.

Extract Data Using Python Libraries

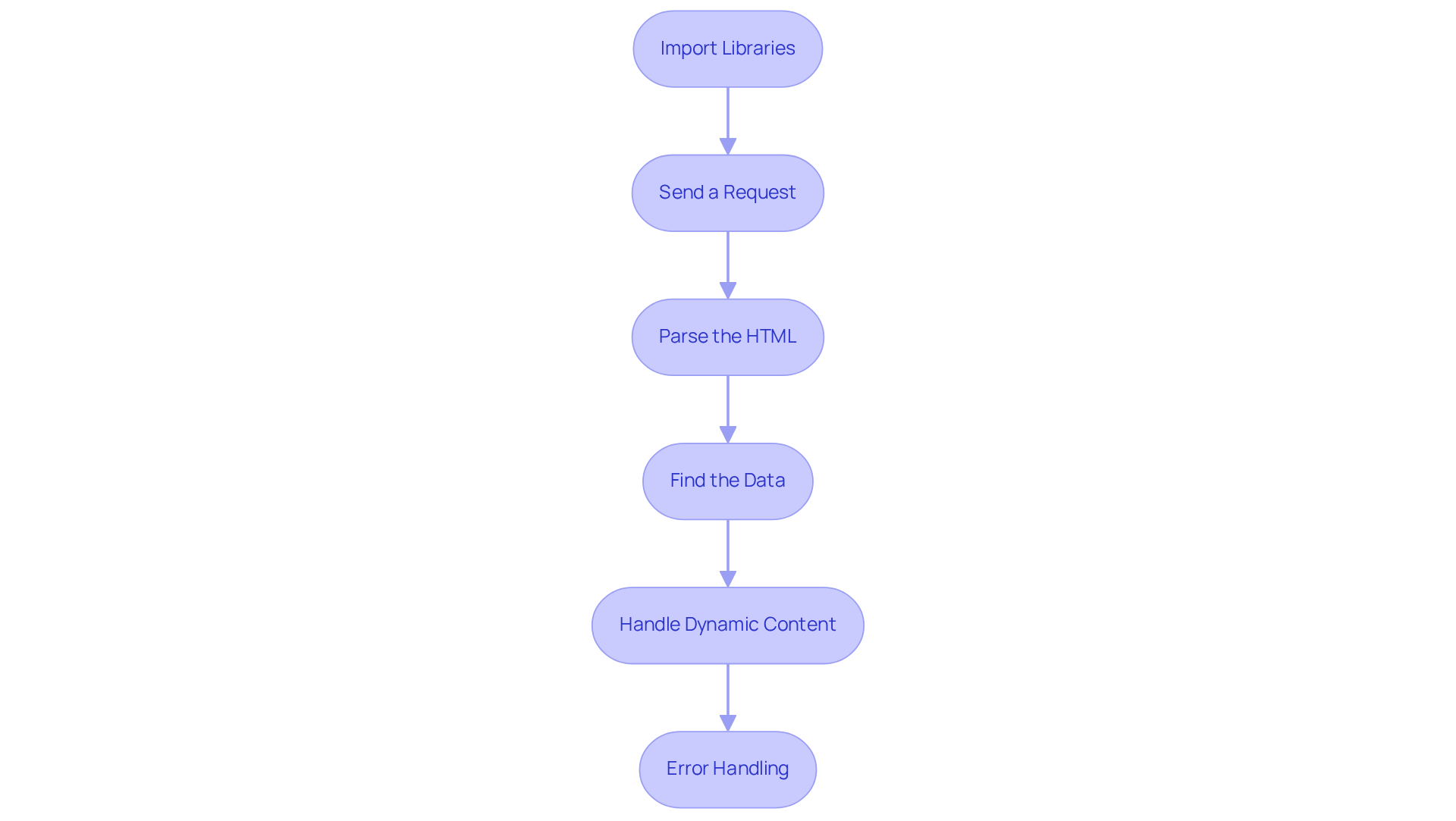

To begin extracting data, follow these steps:

-

Import Libraries: Start by importing the necessary libraries in your Python script:

import requests from bs4 import BeautifulSoup -

Send a Request: Use the

requestslibrary to fetch the HTML content of the target webpage:url = 'https://example.com' response = requests.get(url) html_content = response.text -

Parse the HTML: Create a

BeautifulSoupobject to parse the HTML:soup = BeautifulSoup(html_content, 'html.parser') -

Find the Data: Utilise BeautifulSoup methods to locate the desired data. For instance, to find all the headings on the page:

headings = soup.find_all('h2') for heading in headings: print(heading.text) -

Handle Dynamic Content: If the website employs JavaScript to load content, consider using

Seleniumto automate a browser and scrape the rendered HTML. This method is crucial as numerous contemporary websites depend on JavaScript for content delivery, creating a frequent obstacle in web data extraction. -

Error Handling: Implement error handling to manage potential issues, such as connection errors or missing elements. This step is essential for ensuring your data collection process runs smoothly and efficiently.

By following this python tutorial for web scraping, you can effectively obtain information from various websites, leveraging the power of Python libraries like BeautifulSoup and handling dynamic content with tools like Selenium. Furthermore, employing Appstractor's sophisticated information scraping solutions can enhance your efforts in collecting real estate listing alerts and compensation benchmarking, all while ensuring GDPR compliance.

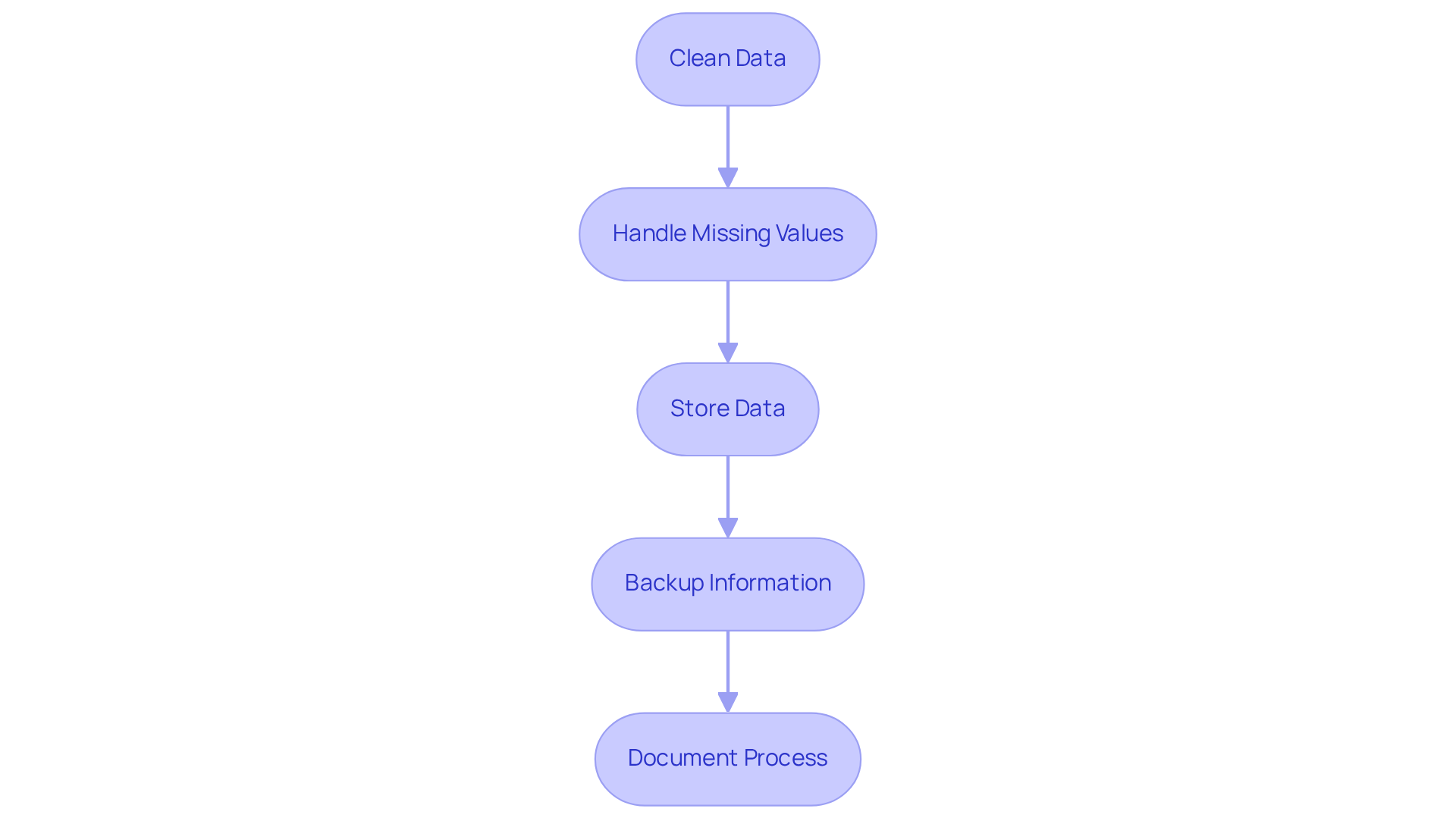

Clean and Store Your Scraped Data

After retrieving information, proper cleaning and storage are crucial for effective analysis. Follow these steps:

-

Utilise a Python tutorial for web scraping, specifically using libraries like

pandas, for information cleaning. For instance, to eliminate any leading or trailing whitespace, you can use:import pandas as pd data = {'headings': [heading.text.strip() for heading in headings]} df = pd.DataFrame(data)Appstractor’s automated data mining service ensures that the data you collect is already normalised and free from duplicates, enhancing the quality of your dataset.

-

Handle Missing Values: Identify and manage any missing values in your DataFrame. A common approach is to remove rows with missing data:

df.dropna(inplace=True)With Appstractor’s advanced data extraction solutions, you can rely on structured data delivery that minimises the occurrence of missing values.

-

Store the Data: Select a storage format that suits your requirements. For straightforward applications, saving the data as a CSV file is sufficient:

df.to_csv('scraped_data.csv', index=False)For larger datasets, consider using a database like SQLite or PostgreSQL. Appstractor supports various output formats, including JSON, CSV, and Parquet, allowing you to choose the best option for your project.

-

Backup Your Information: Regular backups are crucial to avoid information loss. Utilise cloud storage options or version control systems to improve information management and security. Appstractor’s service ensures that only essential billing metadata is retained, safeguarding your actual information.

-

Document Your Process: Maintain a detailed record of your scraping activities, including the URLs accessed, the information extracted, and any transformations applied. This documentation is invaluable for future reference and guarantees reproducibility in your information management strategies. By utilising Appstractor’s services, you can streamline this process and maintain a clear record of your data extraction efforts.

Conclusion

Mastering web scraping with Python is a crucial skill for digital marketers aiming to leverage data effectively. By grasping the web scraping process, establishing a Python environment, and employing the right libraries, marketers can extract valuable information from websites to enhance their strategies and decision-making.

This guide has explored key components of web scraping, including:

- Identifying target websites

- Sending HTTP requests

- Parsing HTML with BeautifulSoup

- Cleaning and storing data effectively

Additionally, it has emphasised the importance of adhering to legal and ethical guidelines, especially as the digital landscape shifts towards a permission economy. The insights provided underscore the significance of responsible data collection practises and highlight the growing market for web data extraction, projected to reach up to USD 1.1 billion by 2026.

As the demand for data-driven insights continues to escalate, marketers are encouraged to adopt web scraping as a powerful tool in their toolkit. By following best practises and utilising advanced solutions like Appstractor, professionals can ensure their data collection efforts are efficient, compliant, and impactful. Engaging in this practise not only enhances marketing strategies but also fosters a deeper understanding of market trends and consumer behaviour, ultimately driving success in an increasingly competitive landscape.

Frequently Asked Questions

What is web scraping?

Web scraping, also known as web harvesting, is an automated method for retrieving information from websites, essential for various applications in digital marketing and information analysis.

What are the key components of the web scraping process?

The key components of the web scraping process include identifying the target website, sending an HTTP request to retrieve the page content, parsing the HTML to extract information, handling the extracted information, and respecting legal and ethical guidelines.

How do I identify the target website for scraping?

To identify the target website, select the site from which you want to extract data and specify the exact information needed. Understanding the site's structure and content is crucial for effective scraping.

What tools can be used to send an HTTP request?

Tools like the requests library in Python can be used to send an HTTP request to the server hosting the website, which retrieves the markup content of the page.

How do I parse HTML after retrieving it?

After obtaining the HTML, you can use a parsing library such as BeautifulSoup to navigate through the HTML structure and efficiently pinpoint and extract the desired information.

What should I do with the extracted information?

Once extracted, the information often requires cleaning and formatting to ensure it is suitable for analysis or storage, which is vital for maintaining information integrity and usability.

What services does Appstractor offer for information mining?

Appstractor's information mining service automates the process of data extraction, delivering clean, de-duplicated information through advanced proxy networks and extraction technology. They offer options like Rotating Proxy Servers for self-serve IPs, Full Service for turnkey data delivery, or Hybrid solutions for those with in-house scrapers needing additional scale or expertise.

Why is it important to respect legal and ethical guidelines in web scraping?

It is important to review the website's robots.txt file and terms of service to ensure compliance with their data collection policies. Adhering to these guidelines is increasingly important as the web transitions towards a permission economy, where ethical data collection practises are paramount.

What is the projected growth of the web data extraction market?

The web data extraction market is projected to grow significantly, with estimates suggesting it could reach between USD 1 billion and USD 1.1 billion by 2026.

What is the focus of industry leaders regarding data extraction practises?

Industry leaders emphasise that the focus is shifting from mere data extraction to responsible and sustainable practises that respect content ownership and privacy.

List of Sources

- Understand the Web Scraping Process

- Web data for scraping developers in 2026: AI fuels the agentic future (https://zyte.com/blog/web-data-for-scraping-developers)

- News companies are doubling down to fight against AI Web scrapers (https://inma.org/blogs/Product-and-Tech/post.cfm/news-companies-are-doubling-down-to-fight-against-ai-web-scrapers)

- Web Scraping Report 2026: Market Trends, Growth & Key Insights (https://promptcloud.com/blog/state-of-web-scraping-2026-report)

- IAB Releases Draft Legislation Addressing AI Scraping (https://iab.com/news/iab-releases-draft-legislation-to-address-ai-content-scraping-and-protect-publishers)

- How AI Is Changing Web Scraping in 2026 (https://kadoa.com/blog/how-ai-is-changing-web-scraping-2026)

- Set Up Your Python Environment and Libraries

- 4 Python Web Scraping Libraries To Mining News Data | NewsCatcher (https://newscatcherapi.com/blog-posts/python-web-scraping-libraries-to-mine-news-data)

- Scraping ‘Quotes to Scrape’ website using Python (https://medium.com/@kshamasinghal/scraping-quotes-to-scrape-website-using-python-c8a616b244e7)

- Scraping Google news using Python (2026 Tutorial) (https://serpapi.com/blog/scraping-google-news-using-python-tutorial)

- Extract Data Using Python Libraries

- Web Scraping Statistics & Trends You Need to Know in 2026 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- Python Web Scraping: Full Tutorial With Examples (2026) (https://scrapingbee.com/blog/web-scraping-101-with-python)

- Web Scraping Report 2026: Market Trends, Growth & Key Insights (https://promptcloud.com/blog/state-of-web-scraping-2026-report)

- 101 Data Science Quotes (https://dataprofessor.beehiiv.com/p/101-data-science-quotes)

- Web Scrape Inspirational Quotes using Python (https://python.plainenglish.io/web-scrape-inspirational-quotes-using-python-e9c10d22a018)

- Clean and Store Your Scraped Data

- 30 Data Hygiene Statistics for 2026 | PGM Solutions (https://porchgroupmedia.com/blog/data-hygiene-statistics)

- 101 Data Science Quotes (https://dataprofessor.beehiiv.com/p/101-data-science-quotes)

- Data Transformation Challenge Statistics — 50 Statistics Every Technology Leader Should Know in 2026 (https://integrate.io/blog/data-transformation-challenge-statistics)

- 23 Must-Read Quotes About Data [& What They Really Mean] (https://careerfoundry.com/en/blog/data-analytics/inspirational-data-quotes)