Introduction

Web scraping has emerged as a powerful tool for marketers, allowing them to extract valuable insights from the vast array of online data. By mastering scrapers, professionals can harness competitive intelligence, track market trends, and understand customer preferences with remarkable efficiency. However, challenges arise in navigating data quality, compliance, and effective tool configuration.

To ensure that marketers not only gather data but also utilise it strategically to enhance their campaigns, it is essential to adopt best practises. These practises can help in maximising the value derived from web scraping efforts.

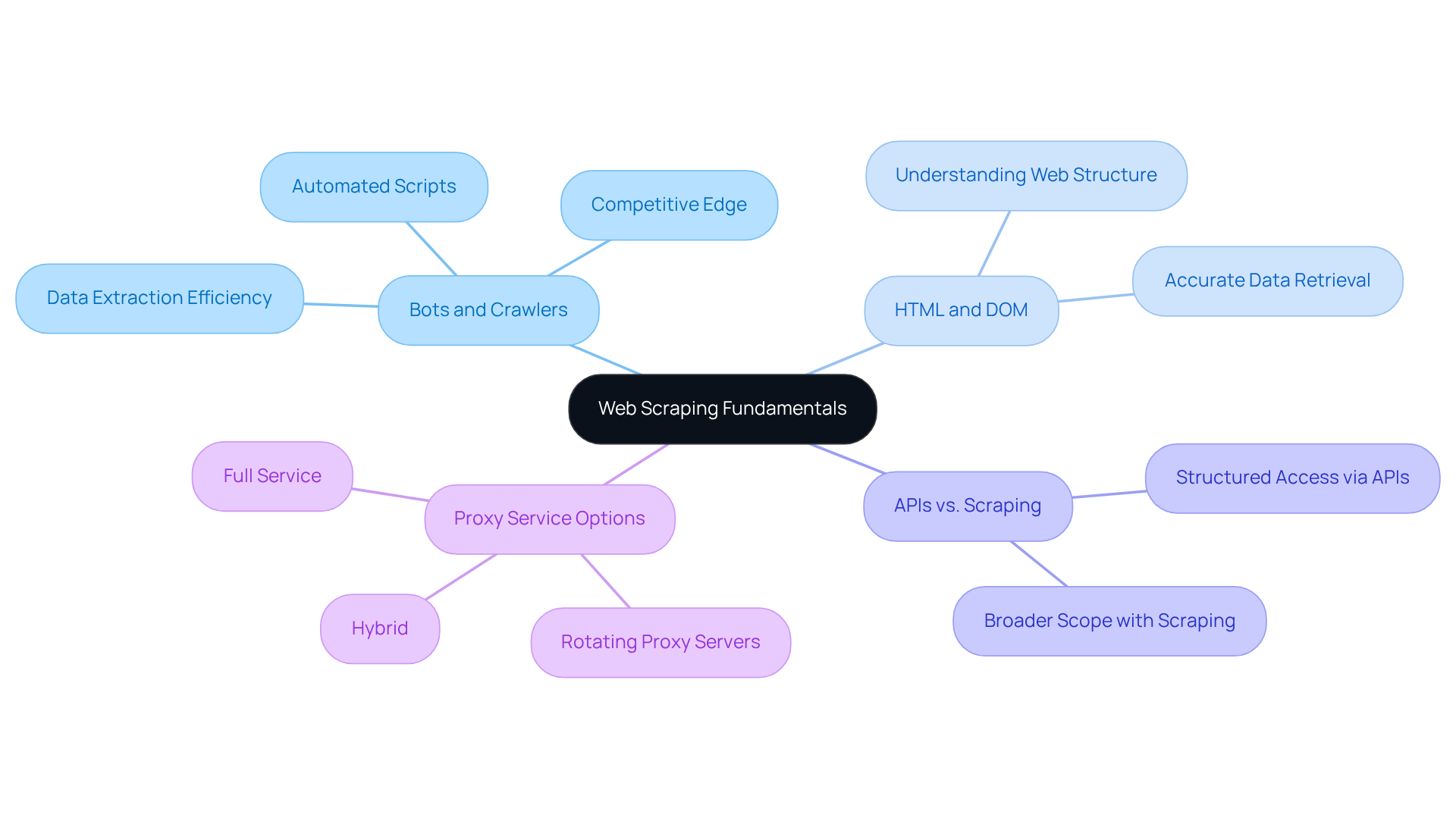

Understand Web Scraping Fundamentals

Web harvesting is an automated procedure that retrieves information from websites, which often involves the use of scraper to collect content that can be organised into usable formats. This technique, which includes the use of scraper, is invaluable for professionals seeking insights into competitors, market trends, and customer preferences. Appstractor's information mining service automates this process, gathering and providing organised information from the internet using advanced proxy networks and extraction technology, which eliminates the need for manual information collection.

Key concepts include:

- Bots and Crawlers: These automated scripts efficiently navigate web pages to extract data, enabling marketers to gather large volumes of information quickly and maintain a competitive edge.

- HTML and DOM: A solid understanding of the structure of web pages is crucial for effective data extraction. Knowledge of HTML and the Document Object Model (DOM) allows professionals to pinpoint and retrieve relevant information accurately.

- APIs vs. Scraping: While APIs offer structured access to information, the use of scraper provides a broader scope for information collection from various sources. This flexibility enables professionals to gather diverse datasets with the use of scraper that can inform strategic decisions.

The provider offers various proxy service options, including:

- Rotating Proxy Servers for self-serve IPs

- Full Service for turnkey information delivery

- Hybrid for those with in-house scrapers needing additional scale or expertise

Service delivery timelines vary: Rotating Proxy Servers become operational within 24 hours, Hybrid audits take 1-4 days, and Full Service projects commence in 5-7 business days. By mastering these fundamentals and leveraging advanced mining solutions, marketers can enhance their collection efforts and improve their marketing campaigns.

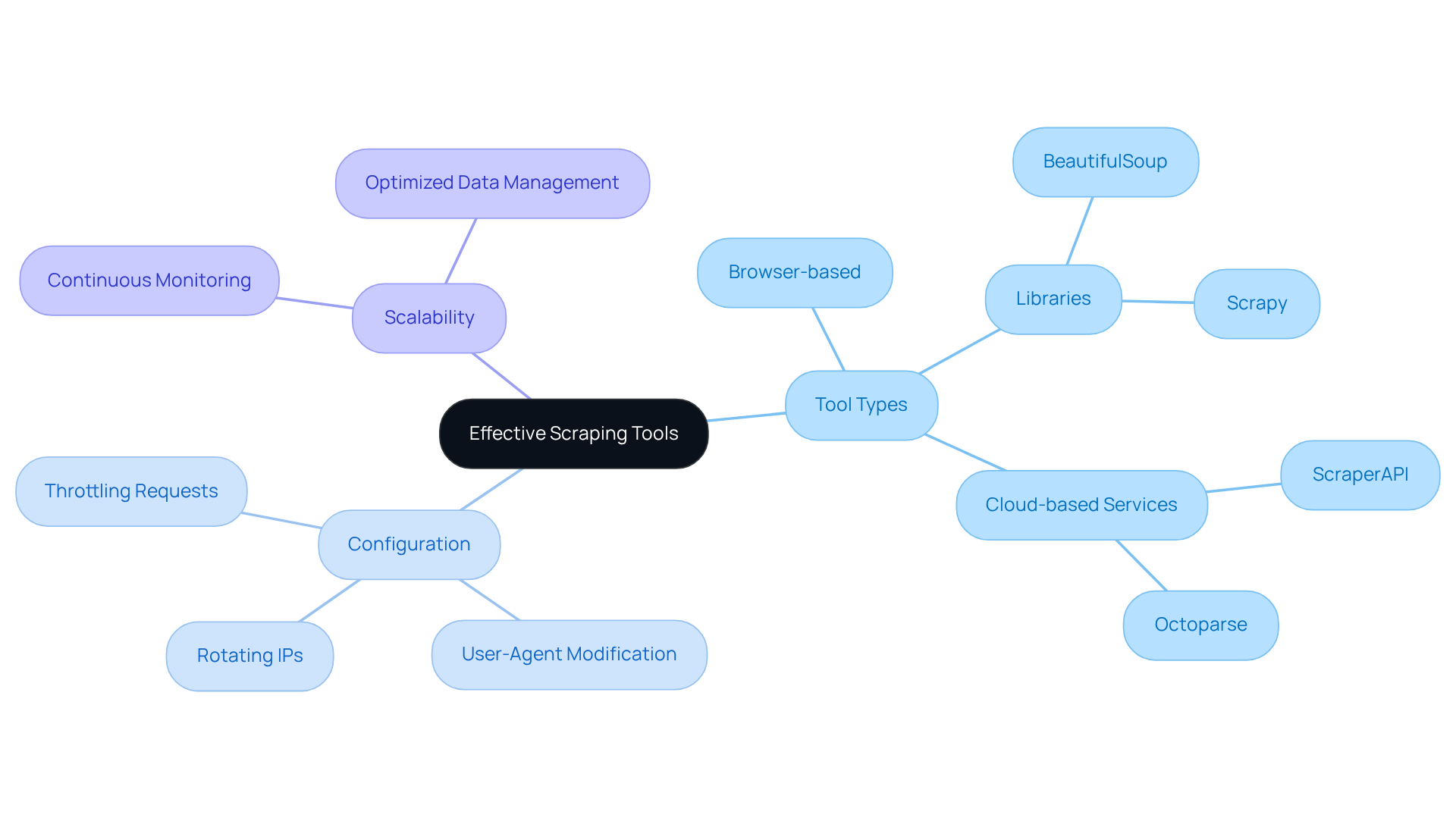

Choose and Configure Effective Scraping Tools

Choosing the appropriate tools is vital for the use of scraper to enhance information extraction efficiency. Here are key considerations:

- Tool Types: Depending on your technical expertise and requirements, choose from browser-based tools, libraries like BeautifulSoup or Scrapy, or cloud-based services. Each type offers unique advantages tailored to different user needs with the use of scraper. The company provides sophisticated information extraction solutions specifically designed for real estate and job market insights, which include the use of scraper to ensure you have the right tools at your disposal.

- Configuration: Proper configuration is essential for navigating anti-bot measures effectively. Implement strategies such as rotating IPs and modifying user-agent strings to minimise the risk of being blocked. Appstractor's rotating proxy servers offer self-serve IPs that go live within 24 hours, making them ideal for marketers seeking efficiency. Their full-service option provides turnkey information delivery, allowing you to focus on analysis rather than collection. Additionally, the Hybrid option is available for those needing extra scale or expertise through the use of scraper for their in-house scrapers.

- Scalability: Ensure that the selected tool can grow with your information needs, especially if you anticipate collecting large volumes. Appstractor's infrastructure supports continuous monitoring and optimised data management, allowing you to adapt to increasing data demands seamlessly.

For instance, a mid-sized e-commerce retailer enhanced its competitive response time from days to hours by implementing a custom data extraction system that monitored competitor websites every 15 minutes. This underscores the effectiveness of well-configured data collection tools, including the use of scraper, in driving marketing success. With 86% of organisations raising their compliance expenditure in 2024, it is crucial to factor in adherence in your harvesting practises. Appstractor's operations are entirely GDPR-compliant, ensuring that your information practises meet regulatory standards. As Kanhasoft aptly states, "scraping is a powerful engine, but it needs a skilled driver," highlighting the importance of expertise in configuring these tools effectively.

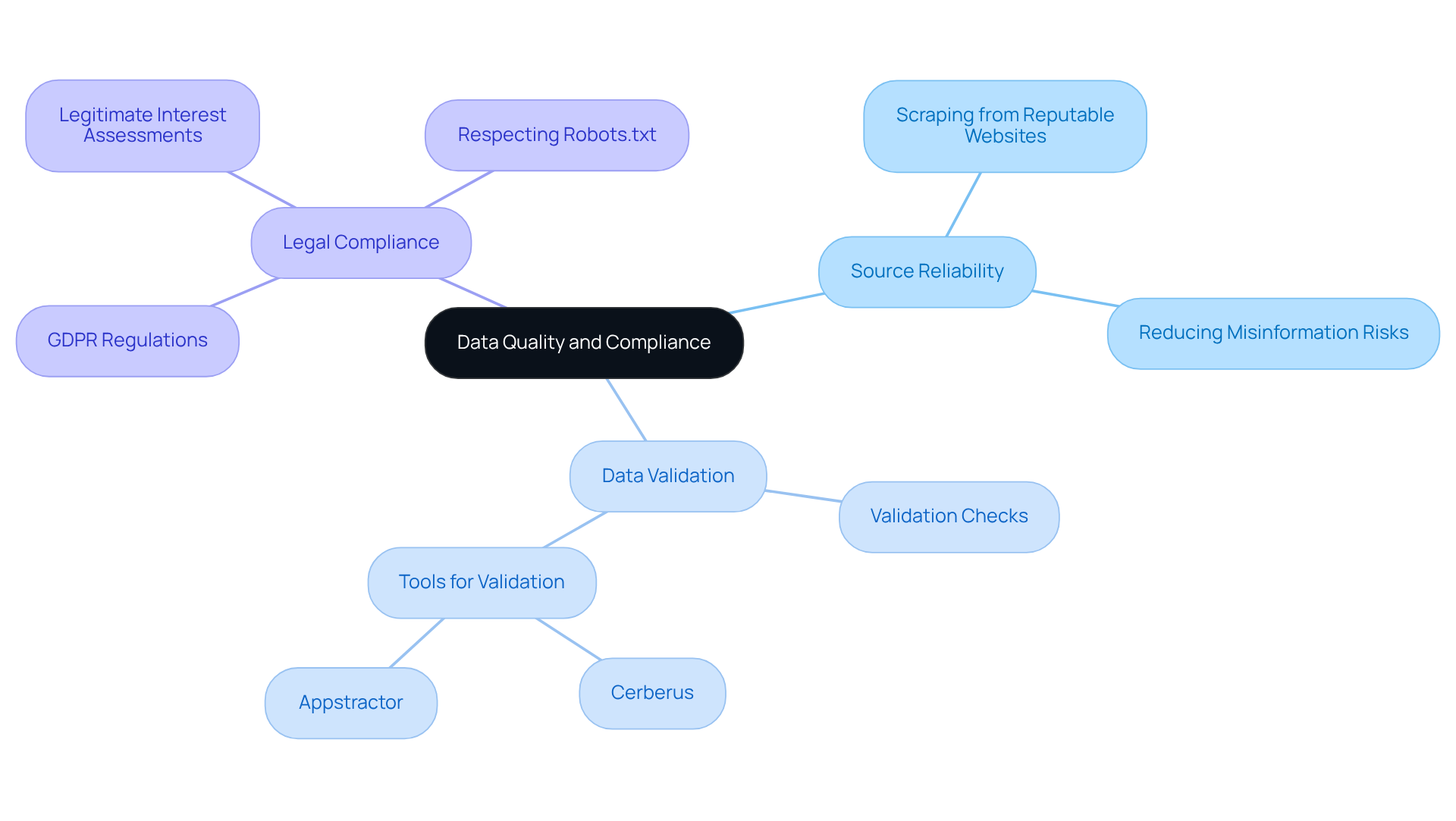

Ensure Data Quality and Compliance

To maintain high-quality data and adhere to legal standards, marketers should implement the following best practices:

- Source Reliability: Scraping data from reputable websites is crucial for ensuring accuracy. Dependable sources not only improve information integrity but also reduce risks related to misinformation.

- Data Validation: Implement validation checks to filter out inaccuracies and outliers. Utilising tools like Cerberus can automate this process, ensuring that the information collected is both accurate and actionable. The service enhances this process with its rotating proxy servers, which feature integrated rotation and sticky sessions for smooth authentication, ensuring that information extraction remains effective and dependable. Furthermore, Appstractor provides a white-glove service and tailored strategy and crawler development to enhance information collection.

- Legal Compliance: Understanding and complying with regulations such as the General Data Protection Regulation (GDPR) is essential. This includes respecting robots.txt files and the terms of service of targeted websites. Organisations must navigate complex legal frameworks to justify their use of scraper activities, especially when handling personal information. Carrying out a Legitimate Interest Assessment (LIA) is essential when managing personal information. GDPR violations can lead to significant penalties, including fines up to €20 million or 4% of global revenue.

By emphasising information quality and adherence to regulations, professionals can improve their credibility and the impact of their campaigns, presenting themselves as responsible information custodians in an increasingly analytics-driven economy. Appstractor's method for information cleaning and enrichment, along with continuous maintenance and support, guarantees that marketers can depend on clear and de-duplicated information for their strategies.

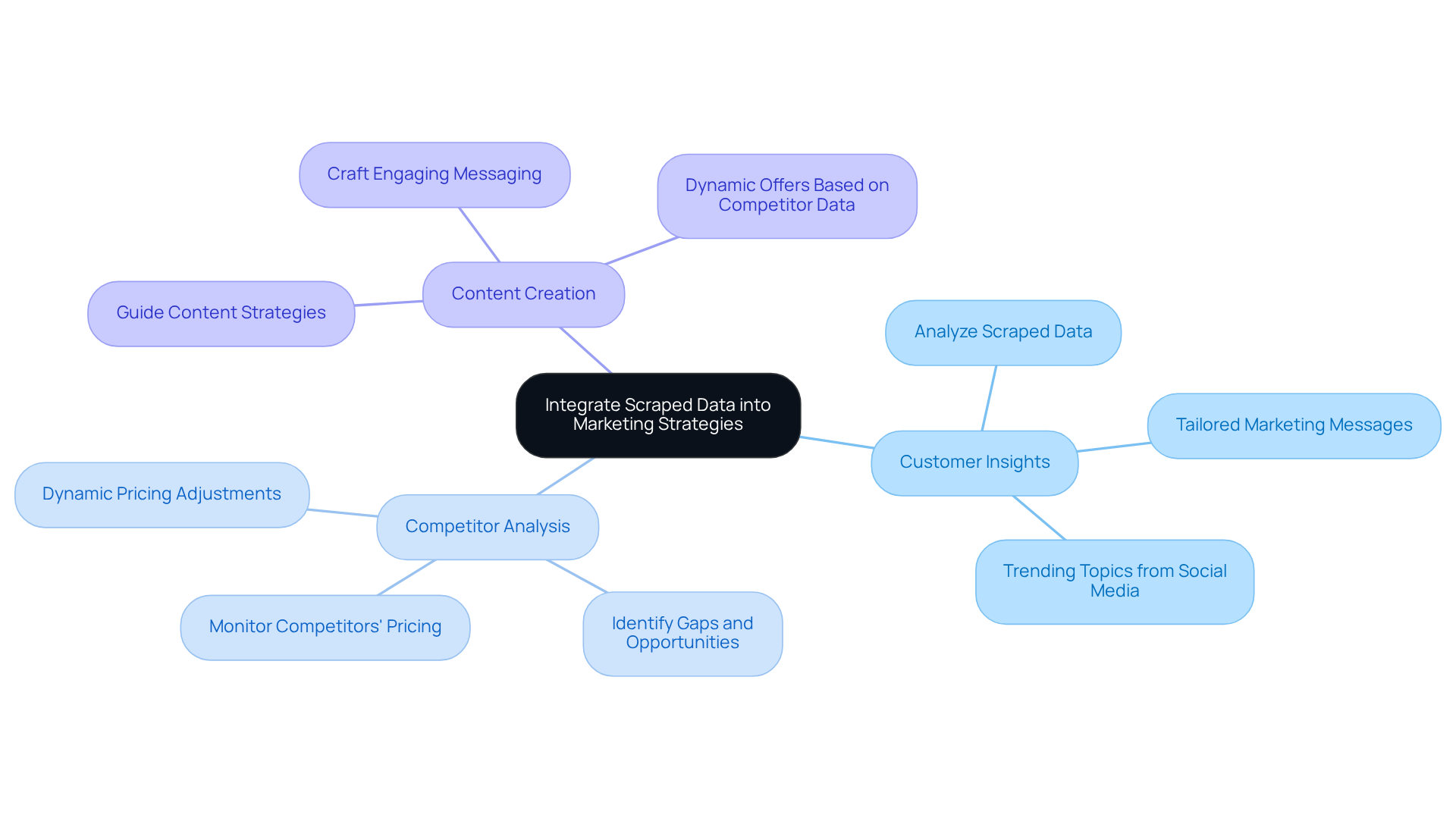

Integrate Scraped Data into Marketing Strategies

Incorporating validated extracted information into marketing strategies is crucial for maximising its value. Here are several effective methods to consider:

-

Customer Insights: Analyse scraped data to uncover customer behaviour and preferences. This allows for tailored marketing messages that resonate with your audience. For instance, insights from social media data collection can reveal trending topics, enabling the creation of timely content that engages users and drives traffic.

-

Competitor Analysis: The use of scraper enables web data extraction to monitor competitors’ pricing, product offerings, and marketing strategies. This analysis helps identify gaps and opportunities in your approach, ensuring you remain competitive in the market. In 2025, businesses that reported significant improvements in their pricing strategies and market positioning attributed their success to the use of scraper for competitor analysis.

-

Content Creation: Use insights obtained from collected information to guide your content strategies. By understanding what resonates with your audience, you can craft messaging that not only attracts attention but also fosters engagement. For instance, a travel agency might employ the use of a scraper to gather competitor pricing data to adjust their offers dynamically, ensuring they provide the best deals to customers.

By effectively integrating these insights into your marketing strategies, you can enhance your decision-making processes and drive better results.

Conclusion

In conclusion, mastering web scraping is crucial for marketers who wish to excel in a data-driven environment. By effectively utilising web scraping techniques, professionals can gain valuable insights that shape their strategies and improve decision-making processes. This article has highlighted the fundamental principles of web scraping, the importance of selecting the right tools, and the necessity of ensuring data quality and compliance.

Key practises include:

- Understanding the mechanics of web scraping.

- Choosing appropriate tools tailored to specific needs.

- Ensuring the accuracy and legal compliance of collected data.

Moreover, integrating this data into marketing strategies fosters a deeper understanding of customer behaviour and competitive positioning, ultimately leading to enhanced results.

As the digital marketing landscape evolves, the importance of web scraping remains paramount. By adopting these best practises, marketers can effectively leverage data, navigate compliance challenges, and enhance their campaigns. Embracing the power of web scraping not only streamlines data collection but also empowers marketers to make informed decisions that resonate with their target audience.

Frequently Asked Questions

What is web scraping?

Web scraping is an automated procedure that retrieves information from websites, typically using a scraper to collect content that can be organised into usable formats.

Why is web scraping important for professionals?

Web scraping is invaluable for professionals seeking insights into competitors, market trends, and customer preferences, allowing them to gather organised information from the internet efficiently.

What are bots and crawlers in the context of web scraping?

Bots and crawlers are automated scripts that navigate web pages to extract data, enabling marketers to gather large volumes of information quickly and maintain a competitive edge.

Why is understanding HTML and the Document Object Model (DOM) important for data extraction?

A solid understanding of HTML and the DOM is crucial for effective data extraction, as it allows professionals to pinpoint and retrieve relevant information accurately from web pages.

How do APIs differ from web scraping?

APIs offer structured access to information, while web scraping provides a broader scope for information collection from various sources, enabling professionals to gather diverse datasets for strategic decisions.

What proxy service options are offered for web scraping?

The provider offers several proxy service options, including Rotating Proxy Servers for self-serve IPs, Full Service for turnkey information delivery, and Hybrid services for those with in-house scrapers needing additional scale or expertise.

What are the service delivery timelines for the different proxy options?

Rotating Proxy Servers become operational within 24 hours, Hybrid audits take 1-4 days, and Full Service projects commence in 5-7 business days.

How can mastering web scraping fundamentals enhance marketing efforts?

By mastering web scraping fundamentals and leveraging advanced mining solutions, marketers can enhance their collection efforts and improve their marketing campaigns.

List of Sources

- Understand Web Scraping Fundamentals

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://kanhasoft.com/blog/web-scraping-statistics-trends-you-need-to-know-in-2025)

- Web Scraping for Marketing | Data-Driven Marketing Insights 2025 (https://blog.datahut.co/post/webscraping_for_marketing_2025)

- Web Scraping Report 2025: Market Trends, Growth & Key Insights (https://promptcloud.com/blog/state-of-web-scraping-2025-report)

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- Web Scraping: Unlocking Business Insights In A Data-Driven World (https://forbes.com/councils/forbestechcouncil/2025/01/27/web-scraping-unlocking-business-insights-in-a-data-driven-world)

- Choose and Configure Effective Scraping Tools

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://kanhasoft.com/blog/web-scraping-statistics-trends-you-need-to-know-in-2025)

- 7 Best Web Scraping Tools Ranked (2025) (https://scrapingbee.com/blog/web-scraping-tools)

- Web Scraping without getting blocked (2025 Solutions) (https://scrapingbee.com/blog/web-scraping-without-getting-blocked)

- Top Ten Web Scraping Tools for 2025: Free and Paid — Retail Technology Innovation Hub (https://retailtechinnovationhub.com/home/2025/10/12/top-ten-web-scraping-tools-for-2025)

- The State of Web Crawling in 2025: Key Statistics and Industry Benchmarks (https://thunderbit.com/blog/web-crawling-stats-and-industry-benchmarks)

- Ensure Data Quality and Compliance

- The state of web scraping in the EU | IAPP (https://iapp.org/news/a/the-state-of-web-scraping-in-the-eu)

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://kanhasoft.com/blog/web-scraping-statistics-trends-you-need-to-know-in-2025)

- Is Web Scraping Legal? Laws, Compliance & Best Practices (https://infomineo.com/services/data-analytics/is-web-scraping-legal-laws-compliance-best-practices)

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- Web Scraping in 2025: The €20 Million GDPR Mistake You Can’t Afford to Make (https://medium.com/deep-tech-insights/web-scraping-in-2025-the-20-million-gdpr-mistake-you-cant-afford-to-make-07a3ce240f4f)

- Integrate Scraped Data into Marketing Strategies

- Web Scraping: Unlocking Business Insights In A Data-Driven World (https://forbes.com/councils/forbestechcouncil/2025/01/27/web-scraping-unlocking-business-insights-in-a-data-driven-world)

- Web Scraping for Marketing | Data-Driven Marketing Insights 2025 (https://blog.datahut.co/post/webscraping_for_marketing_2025)

- June 2025 Digital Marketing Industry Update | Loop Digital (https://loop-digital.co.uk/marketing-insights-news/digital-marketing-update-june-2025)