Introduction

Mastering the art of web scraping presents a significant opportunity for data collection, especially in tracking Amazon prices. This guide provides a structured, step-by-step approach to establishing a web scraping environment, gathering product URLs, and extracting essential price information. As the digital landscape continues to evolve, however, challenges such as website changes and data compliance emerge. This raises an important question: how can one effectively navigate these complexities while ensuring ethical and efficient data collection?

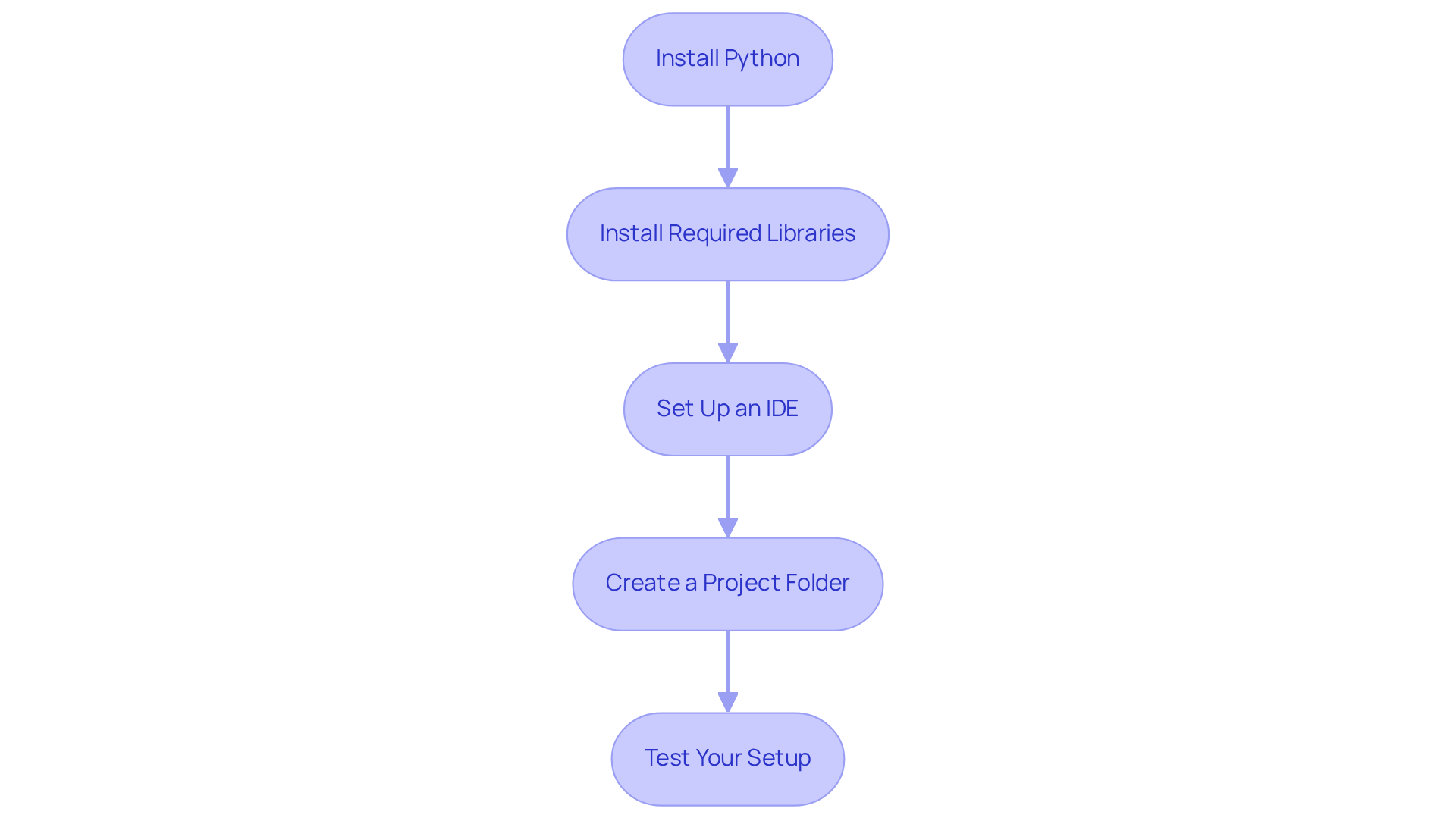

Set Up Your Web Scraping Environment

To begin scraping Amazon prices, it is essential to establish your web scraping environment. Follow these steps:

- Install Python: Ensure Python is installed on your machine. Download it from python.org.

- Install Required Libraries: Open your command line interface (CLI) and install the necessary libraries using pip:

pip install requests beautifulsoup4 pandasrequests: Used for making HTTP requests.beautifulsoup4: For parsing HTML and retrieving information.pandas: Useful for manipulation and storage of data.

- Set Up an IDE: Choose an Integrated Development Environment (IDE) such as PyCharm, VSCode, or Jupyter Notebook to write and execute your code.

- Create a Project Folder: Organise your files by creating a dedicated folder for your scraping project. This will help keep your scripts and data files structured.

- Test Your Setup: Write a simple script for scraping Amazon prices to ensure that everything is functioning correctly. For example:

If you receive a status code of 200, your setup is successful.import requests response = requests.get('https://www.amazon.com/') print(response.status_code)

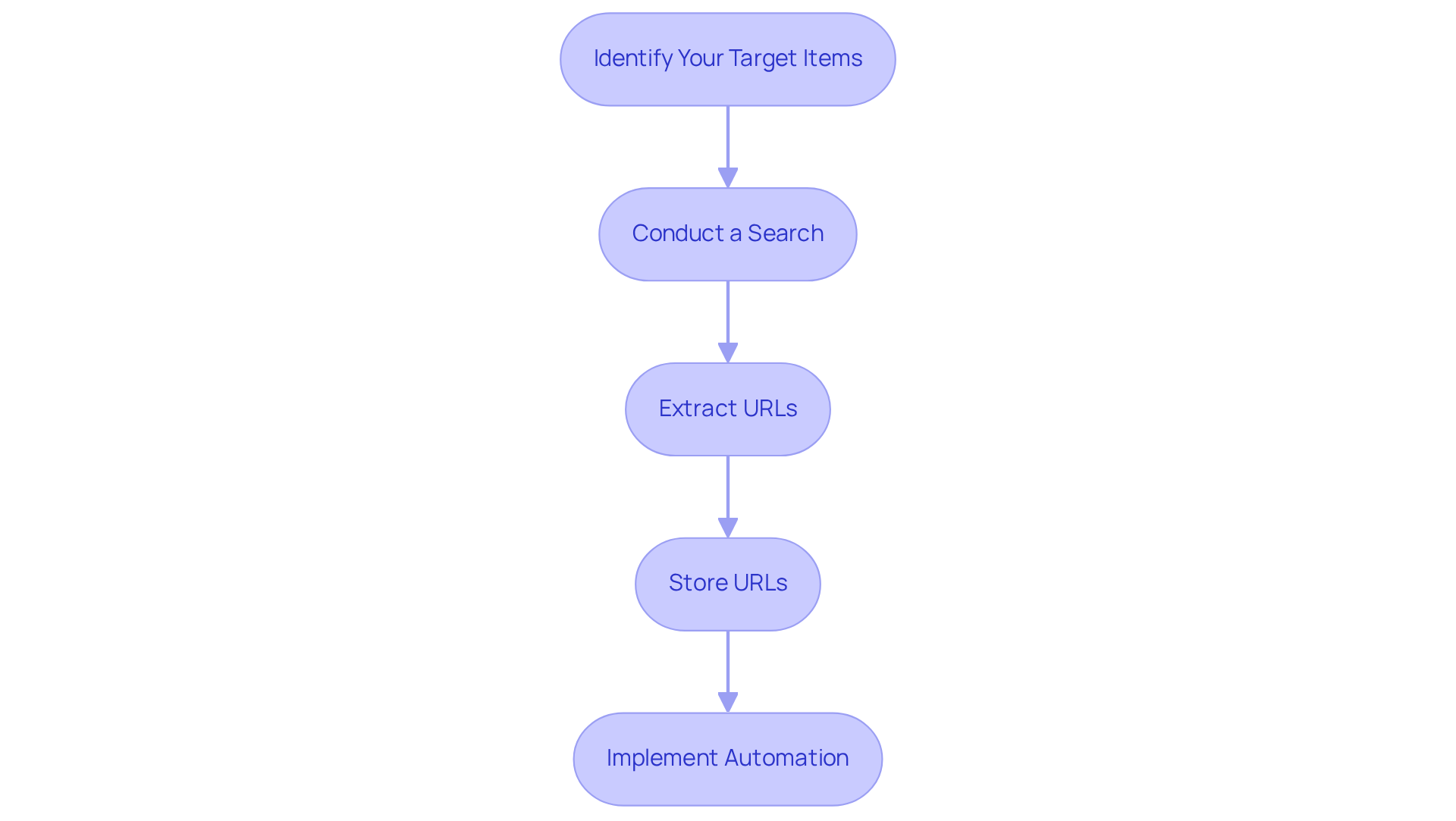

Collect Product URLs for Scraping

Once your environment is established, the next step is to gather the URLs you wish to scrape. Here’s how:

- Identify Your Target Items: Decide on the category or specific items you want to monitor. For example, if you are interested in electronics, you can begin scraping Amazon prices in the electronics section.

- Conduct a Search: Use the Amazon search bar to locate items. For instance, search for 'laptops'.

- Extract URLs: Automate the process of extracting URLs using a script to enhance efficiency. Automating extraction scripts can improve efficiency by 50%. Here’s a simple example using BeautifulSoup:

This script fetches the search results page and extracts the product links, allowing for a more streamlined data collection process. Recognising item URLs enhances efficiency by 30%, making this step essential in your extraction approach.from bs4 import BeautifulSoup import requests search_url = 'https://www.amazon.com/s?k=laptops' response = requests.get(search_url) soup = BeautifulSoup(response.content, 'html.parser') product_links = [a['href'] for a in soup.find_all('a', class_='a-link-normal')] print(product_links) - Store URLs: Save the gathered URLs in a list or a CSV file for easy access during the data collection process. On average, a well-optimised session for scraping Amazon prices can yield hundreds of product URLs, significantly enhancing your monitoring capabilities. Efficient information management is crucial, as processing and cleaning time can affect businesses by 45%.

Implementing automated URL extraction not only saves time but also enhances the precision of your information collection efforts. As noted by digital marketing experts, effective monitoring strategies hinge on the ability to gather and analyse data efficiently, making automation a key component of successful e-commerce approaches.

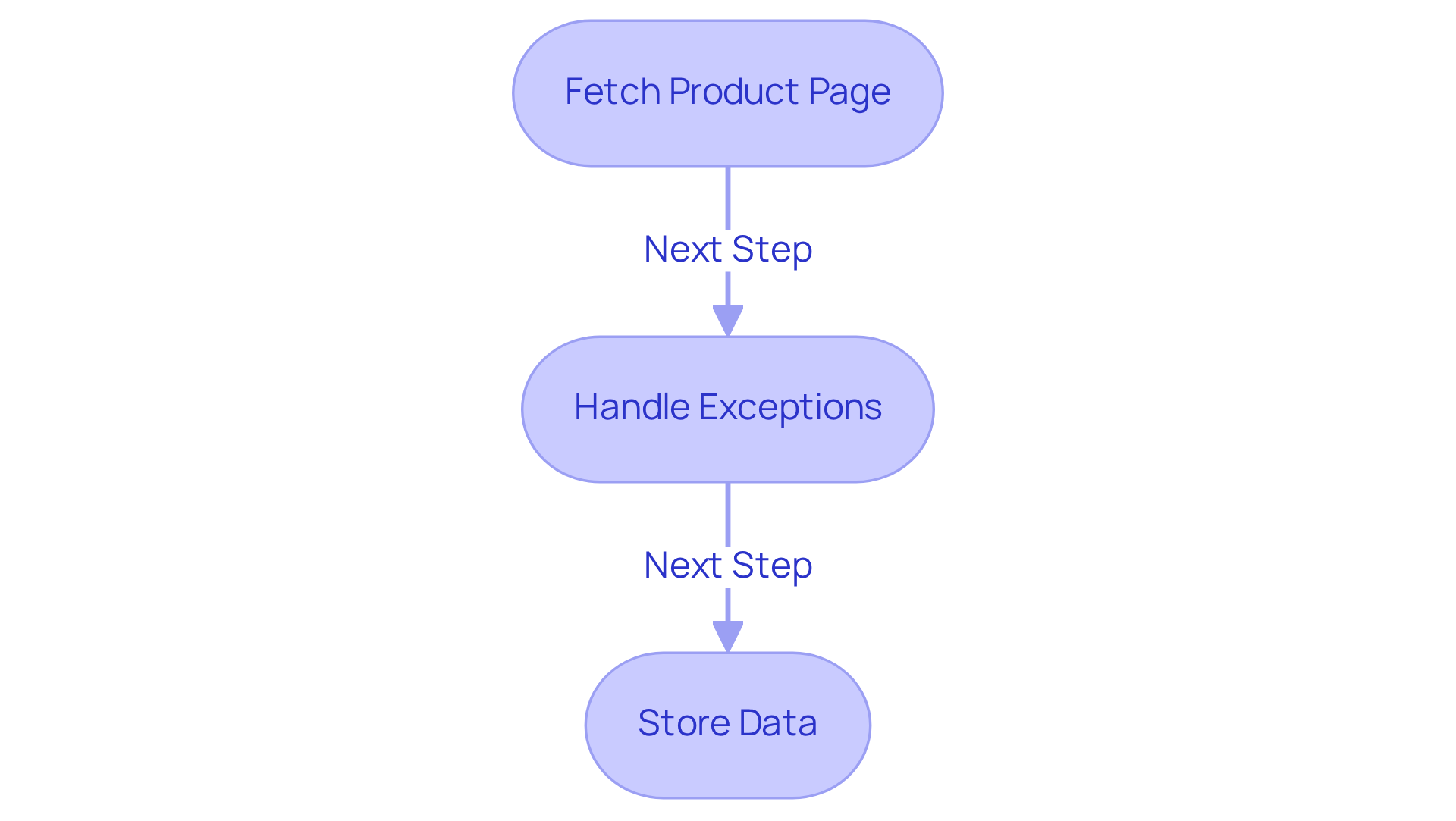

Scrape Prices from Collected URLs

To scrape prices from your collected product URLs, follow these structured steps:

-

Iterate through URLs to fetch the product page and extract the cost, which is essential for scraping amazon prices. Here’s an example:

import pandas as pd prices = [] for url in product_links: response = requests.get(url) soup = BeautifulSoup(response.content, 'html.parser') price = soup.find('span', class_='a-price-whole').text prices.append(price) -

Handle Exceptions: Implement robust error handling to manage potential issues during scraping, such as missing elements or connection errors:

try: price = soup.find('span', class_='a-price-whole').text except AttributeError: price = 'N/A' -

Store the Data: After scraping the prices, organise and store them in a structured format, such as a DataFrame:

df = pd.DataFrame({'URL': product_links, 'Price': prices}) df.to_csv('amazon_prices.csv', index=False)

This process will save your scraped data into a CSV file for further analysis, reflecting Appstractor's advanced e-commerce solutions.

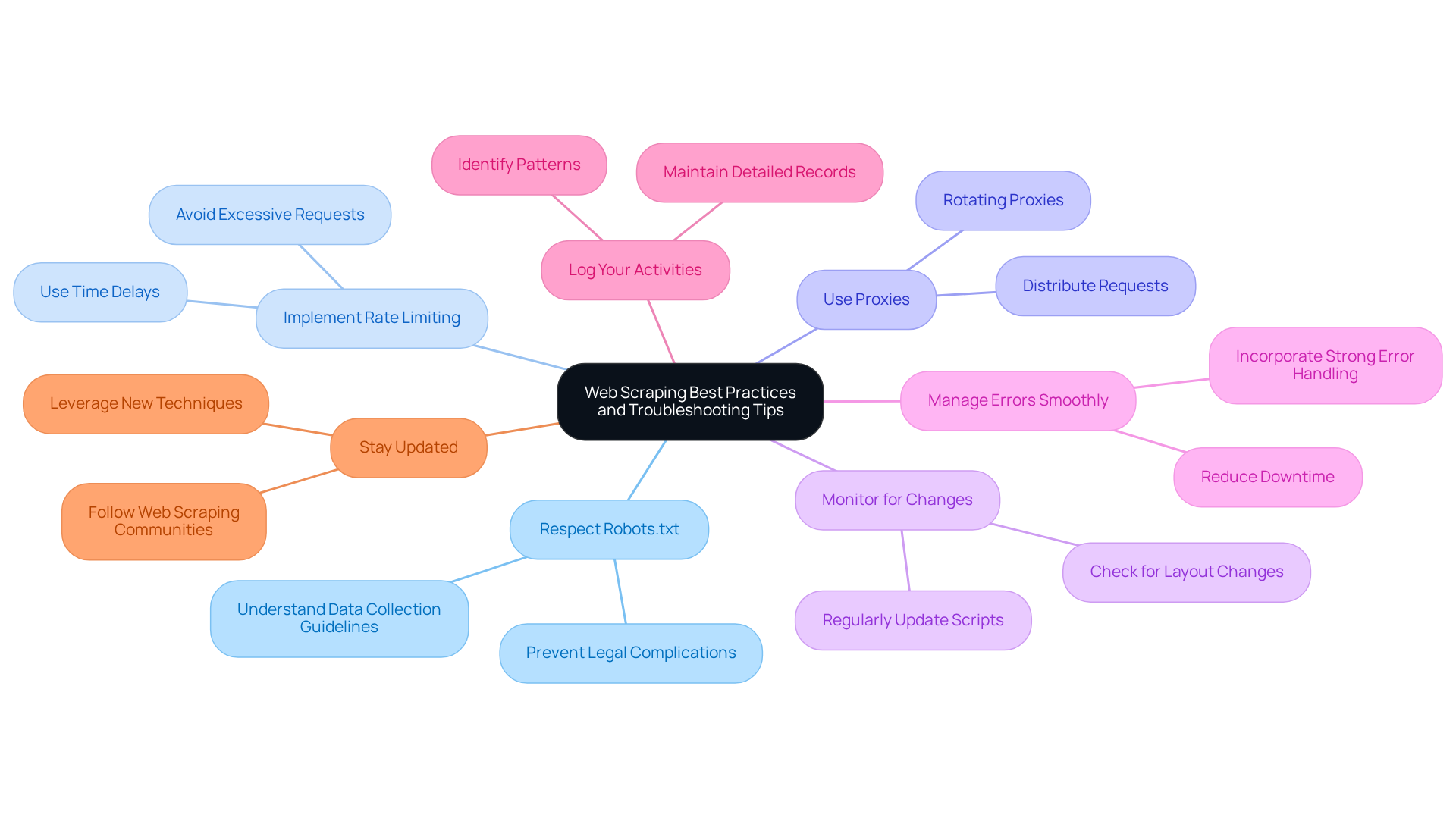

Implement Best Practices and Troubleshooting Tips

To ensure successful and sustainable web scraping, consider the following best practices and troubleshooting tips:

-

Respect Robots.txt: Always review the

robots.txtfile of the website to understand the data collection guidelines and prevent legal complications. Adhering to these guidelines is crucial for maintaining compliance and ethical standards in data collection. -

Implement Rate Limiting: Avoid sending too many requests in a short period to prevent getting blocked. Industry leaders emphasise that effective rate limiting is essential. As one expert noted, "Your goal isn’t zero errors: it’s fast containment." Use time delays between requests to manage traffic effectively:

import time time.sleep(2) # Sleep for 2 seconds -

Use Proxies: To avoid IP bans, consider using rotating proxies, such as those offered by Appstractor, to distribute your requests across multiple IP addresses. In 2026, a significant percentage of web scrapers are utilising proxies to improve their data extraction capabilities and reduce the risk of detection. Appstractor's rotating proxy servers can become operational within 24 hours, offering a swift solution to enhance your data collection efforts.

-

Monitor for Changes: Websites often refresh their layouts, which can interfere with data extraction scripts. Regularly check and update your scraping scripts to ensure they function correctly. As one expert pointed out, "What worked last week might fail tomorrow with no visible clue."

-

Manage Errors Smoothly: Incorporate strong error handling in your scripts to address unforeseen problems, such as connection issues or absent information. This proactive approach can significantly reduce downtime and improve data reliability.

-

Log Your Activities: Maintain a detailed record of your data collection efforts to monitor successes and failures. This practice aids in troubleshooting and helps identify patterns that may indicate underlying issues.

-

Stay Updated: Follow web scraping communities and resources to stay informed about the latest techniques and challenges in web scraping. Engaging with these communities can provide valuable insights and strategies for overcoming common obstacles. Additionally, consider leveraging Appstractor's full-service options for turnkey data delivery, which can streamline your data extraction process, such as scraping Amazon prices. For practical guidance, refer to Appstractor's user manuals and FAQs.

Conclusion

Mastering the art of scraping Amazon prices is a crucial skill that empowers users to gather valuable data for informed decision-making in e-commerce. By adhering to the structured steps outlined in this guide, individuals can effectively set up their web scraping environment, efficiently collect product URLs, and seamlessly extract pricing information. This comprehensive approach not only simplifies the data collection process but also enhances the accuracy and reliability of the information gathered.

Key insights from this article emphasise the importance of a well-organised setup, the utilisation of automation for URL extraction, and the implementation of best practises to ensure compliance and efficiency. These strategies include:

- Respecting website guidelines

- Employing robust error handling techniques

These are essential for successful scraping endeavours. Additionally, staying updated with the latest trends and tools in web scraping can significantly improve the effectiveness of data collection efforts.

Ultimately, the ability to scrape Amazon prices effectively opens doors to informed business strategies and competitive advantages. Embracing these methods and best practises will empower individuals and businesses alike to harness the power of data, drive insights, and make more strategic decisions in the ever-evolving e-commerce landscape. Engaging with the web scraping community and leveraging advanced tools can further enhance these capabilities, ensuring a successful and sustainable approach to data extraction.

Frequently Asked Questions

What is the first step to set up a web scraping environment for Amazon prices?

The first step is to install Python on your machine, which can be downloaded from python.org.

What libraries are required for web scraping Amazon prices?

The required libraries are requests, beautifulsoup4, and pandas. You can install them using the command: pip install requests beautifulsoup4 pandas.

What is the purpose of the requests library?

The requests library is used for making HTTP requests.

How does the beautifulsoup4 library assist in web scraping?

The beautifulsoup4 library is used for parsing HTML and retrieving information from web pages.

What role does the pandas library play in web scraping?

The pandas library is useful for manipulation and storage of data.

What is an Integrated Development Environment (IDE) and why is it needed?

An IDE, such as PyCharm, VSCode, or Jupyter Notebook, is needed to write and execute your web scraping code.

Why should you create a project folder for web scraping?

Creating a project folder helps organise your files and keeps your scripts and data files structured.

How can you test if your web scraping setup is successful?

You can test your setup by writing a simple script to scrape Amazon prices. For example, use the code:

import requests

response = requests.get('https://www.amazon.com/')

print(response.status_code)

``` If you receive a status code of 200, your setup is successful.

## List of Sources

1. Set Up Your Web Scraping Environment

- 2026 Web Scraping Industry Report - PDF (https://zyte.com/whitepaper-ebook/2026-web-scraping-industry-report)

- Web Scraping Report 2026: Market Trends, Growth & Key Insights (https://promptcloud.com/blog/state-of-web-scraping-2025-report)

- Web Scraping News Articles with Python (2026 Guide) (https://capsolver.com/blog/web-scraping/web-scraping-news)

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://kanhasoft.com/blog/web-scraping-statistics-trends-you-need-to-know-in-2025)

- | Scrapfly (https://scrapfly.io/use-case/media-and-news-web-scraping)

2. Collect Product URLs for Scraping

- Amazon Product Data Extraction Case Study (https://browseract.com/blog/case-study-amazon-product-data-extraction)

- Target Web Scraping for Product Data – Complete Guide (https://actowizsolutions.com/target-web-scraping-product-data.php)

- Use of scanner data and webscraping in price statistics (https://statistik.at/en/about-us/innovations-new-data-sources/use-of-scanner-data-and-webscraping-in-price-statistics)

- Amazon tests listing sites that sell products it doesn’t (https://independent.co.uk/news/world/americas/amazon-products-data-scraping-b2896495.html)

3. Scrape Prices from Collected URLs

- 20 Ecommerce Quotes From Industry Experts (https://whidegroup.com/blog/inspirational-ecommerce-quotes)

- Amazon's AI shopping tool sparks backlash from online retailers that didn't want websites scraped (https://cnbc.com/2026/01/06/amazons-ai-shopping-tool-sparks-backlash-from-some-online-retailers.html)

- The Global Growth of Web Scraping Industry (2014–2024) (https://browsercat.com/post/web-scraping-industry-growth-2014-2024)

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://kanhasoft.com/blog/web-scraping-statistics-trends-you-need-to-know-in-2025)

- Web Scraping Market Size, Growth Report, Share & Trends 2025 - 2030 (https://mordorintelligence.com/industry-reports/web-scraping-market)

4. Implement Best Practices and Troubleshooting Tips

- News companies are doubling down to fight against AI Web scrapers (https://inma.org/blogs/Product-and-Tech/post.cfm/news-companies-are-doubling-down-to-fight-against-ai-web-scrapers)

- How to Fix Web Scraping Errors: 2026 Complete Troubleshooting Guide (https://promptcloud.com/blog/how-to-fix-web-scraping-errors-2026)

- State of Web Scraping 2026: Trends, Challenges & What’s Next (https://browserless.io/blog/state-of-web-scraping-2026)

- IAB Releases Draft Legislation Addressing AI Scraping (https://iab.com/news/iab-releases-draft-legislation-to-address-ai-content-scraping-and-protect-publishers)

- Top Web Scraping Challenges in 2026 (https://scrapingbee.com/blog/web-scraping-challenges)