Introduction

Web scraping has become an essential technique for businesses aiming to leverage the vast information available online. By automating data collection from various websites, organisations can obtain critical insights that inform strategic decisions across sectors such as market research and e-commerce.

As the demand for effective web data extraction increases, so do the complexities associated with its implementation, including ethical and legal considerations. Businesses must navigate these challenges carefully to maximise the advantages of web scraping in a competitive landscape.

Define Web Scraping: Core Concepts and Importance

Web harvesting is an automated method for retrieving information from websites, illustrating how web scraping works by utilising software tools or scripts to collect data from web pages. This information can be organised into usable formats, such as spreadsheets or databases, making it accessible for analysis. The significance of how web scraping works lies in its ability to facilitate large-scale data collection, enabling businesses to swiftly gain insights from vast online information. By 2026, web data extraction has become crucial for various applications, including market research, price monitoring, and social media analysis, establishing itself as an essential skill for data-driven decision-making.

Recent trends indicate that businesses are increasingly leveraging web data extraction to adapt to dynamic market conditions. For example, a global B2B SaaS company improved its lead generation by automating data extraction, resulting in a 40% increase in qualified leads and faster sales cycles. This reflects a broader shift where companies are using web extraction to monitor competitors’ pricing and promotional strategies in real time, a necessity in today’s competitive landscape where 44% of consumers are spending more time comparing prices online.

The web data extraction market is projected to expand significantly, with estimates indicating a compound annual growth rate (CAGR) between 11.9% and 18.7%, potentially reaching between $363 million and $3.52 billion by 2037. This growth is driven by the increasing demand for timely and relevant information, particularly in sectors like retail and e-commerce, where dynamic pricing strategies are essential for retaining value-focused customers. As Scott Vahey notes, "AI is set to transform the environment," suggesting that advancements in artificial intelligence will further enhance the capabilities of web extraction tools, enabling companies to make more informed decisions based on real-time data.

With the introduction of Appstractor's MobileHorizons API, digital marketers can now access hyper-local insights by extracting and analysing tailored information from native mobile apps. This API provides valuable data, including native app display ads, shopping intelligence, and comparison pricing, which are crucial for understanding user intent and refining marketing strategies. Furthermore, Appstractor ensures that these advanced information-gathering solutions comply with GDPR regulations, making it a reliable option for professionals navigating the complexities of extraction in today’s digital landscape.

In summary, how web scraping works not only streamlines information collection but also empowers businesses to remain agile in a rapidly changing market, making it a vital component of contemporary information strategies.

Explore Types of Web Scrapers: Tools and Technologies

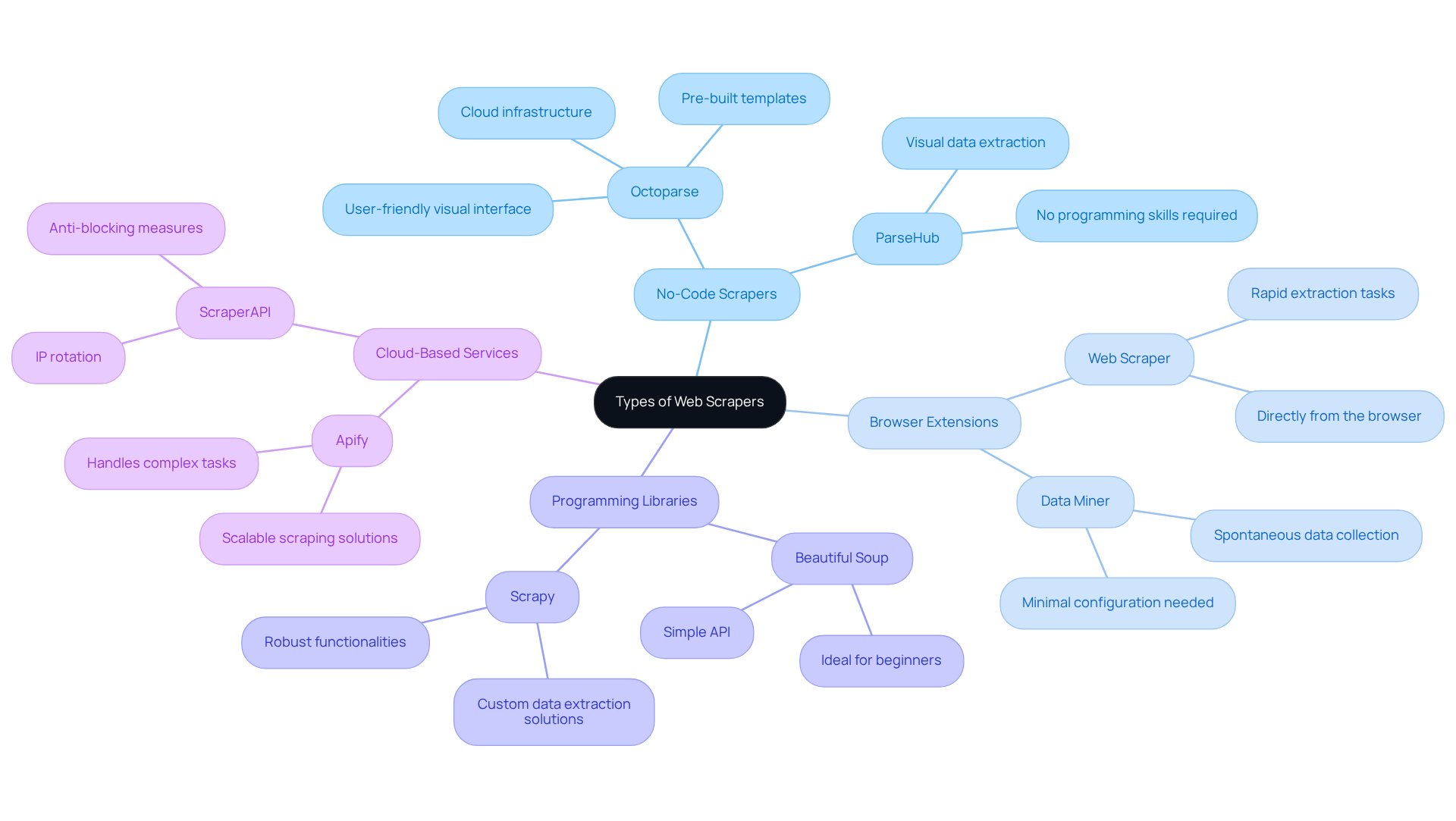

Web scrapers come in various forms, each tailored to specific use cases and user expertise levels:

- No-Code Scrapers: Tools like Octoparse and ParseHub empower users to extract data without any programming skills. These platforms feature user-friendly visual interfaces that simplify the selection of elements on web pages, making information collection accessible to non-technical users.

- Browser Extensions: Extensions such as Web Scraper and Data Miner facilitate rapid extraction tasks directly from the browser. These tools are ideal for individuals who need to collect information spontaneously without extensive configuration.

- Programming Libraries: For those with coding expertise, libraries like Beautiful Soup and Scrapy in Python offer robust functionalities for developing custom data extraction solutions. These tools provide flexibility and control, enabling developers to tailor their data extraction processes to meet specific project requirements.

- Cloud-Based Services: Platforms like Apify and ScraperAPI deliver scalable scraping solutions capable of handling complex tasks, including IP rotation and anti-blocking measures. These services are particularly suitable for large-scale projects that require reliable information extraction.

By understanding these diverse options, users can select the most appropriate tool based on their technical skills and project needs, ensuring efficient and effective information gathering.

Understand Web Scraping Mechanics: Processes and Techniques

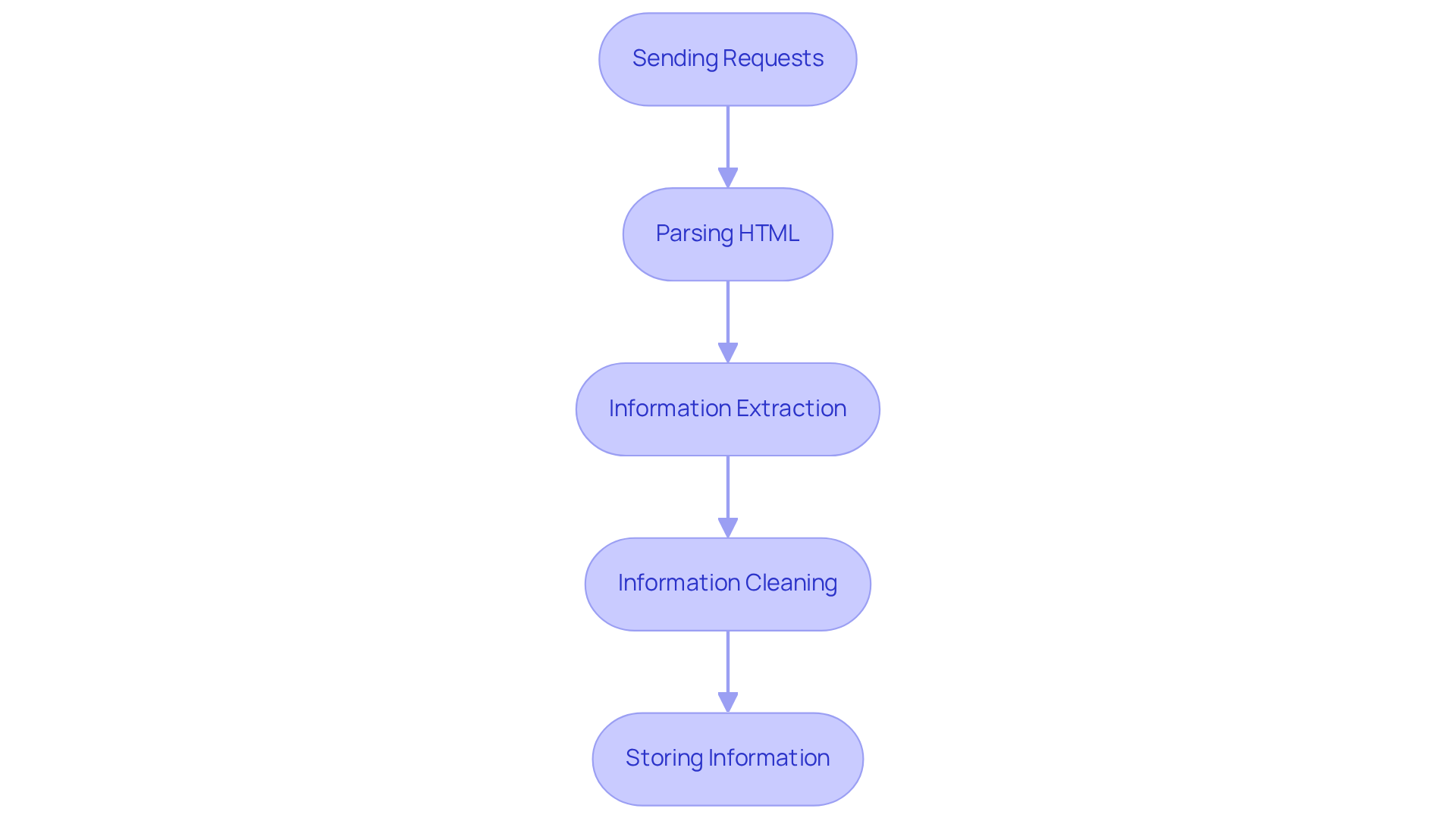

Web scraping involves several essential processes crucial for effective data extraction:

- Sending Requests: The scraper begins by sending HTTP requests to the target website, retrieving the HTML content of the desired page.

- Parsing HTML: Once the HTML is received, it is parsed to extract relevant information, demonstrating how web scraping works. Libraries such as Beautiful Soup and lxml are commonly employed to navigate the HTML structure effectively.

- Information Extraction: Specific information points are identified using selectors, such as CSS selectors or XPath, to illustrate how web scraping works by isolating the necessary details.

- Information Cleaning: The extracted information often requires thorough cleaning and normalisation to ensure consistency and usability. This process may involve eliminating duplicates, addressing missing values, and transforming types into suitable formats.

- Storing Information: Finally, the cleaned information is saved in a structured format, such as CSV files or databases, facilitating further analysis.

Mastering these mechanics is essential for understanding how web scraping works in order to implement effective web extraction projects and overcome common challenges. By leveraging advanced techniques and tools, practitioners can significantly enhance their information extraction capabilities.

Implement Web Scraping Techniques: Overcoming Challenges

When implementing web scraping techniques, several challenges may arise:

-

IP Blocking: Websites often employ measures to block scrapers, making it essential to use rotating proxies. This strategy distributes requests across multiple IP addresses, significantly reducing the risk of detection. A Marketing Director at Koozai noted that using trusted proxies allowed them to manage a vast number of keywords and gain extensive monitoring insights, contributing to larger SEO projects.

-

CAPTCHA: Many sites implement CAPTCHAs to prevent automated access. Solutions include using CAPTCHA-solving services or employing headless browsers like Selenium or Puppeteer that can interact with the page as a human would. An Internet Marketer from Israel commended the exceptional service of reliable proxies, emphasising their efficiency in handling information collection tasks.

-

Dynamic Content: Certain websites retrieve information dynamically via JavaScript, which conventional extraction methods may find difficult to access. Using tools such as Selenium or Puppeteer enables scrapers to render the page completely and extract the required information efficiently. A CEO from eData Web Development shared that using trusted proxies made their reports run four times faster and more accurately, showcasing the efficiency gained through reliable proxy services.

-

Rate Limiting: To avoid being flagged as a bot, implement rate limiting by spacing out requests and mimicking human browsing behaviour. Introducing random delays and unpredictable mouse movements can further enhance the stealth of data extraction operations.

-

Data Structure Changes: Websites frequently update their layouts, which can disrupt scrapers. Consistently overseeing and refreshing your extraction scripts is essential to adjust to these changes and uphold information integrity. Speedy Solutions highlighted the significance of possessing dependable proxy IPs to guarantee precise information delivery, which is vital for adjusting to such changes.

By understanding these challenges and their solutions, readers can enhance their web data collection capabilities and ensure successful data extraction by learning how web scraping works, leveraging the benefits of trusted proxies to improve their SEO efficiency and data accuracy.

Address Ethical and Legal Considerations in Web Scraping

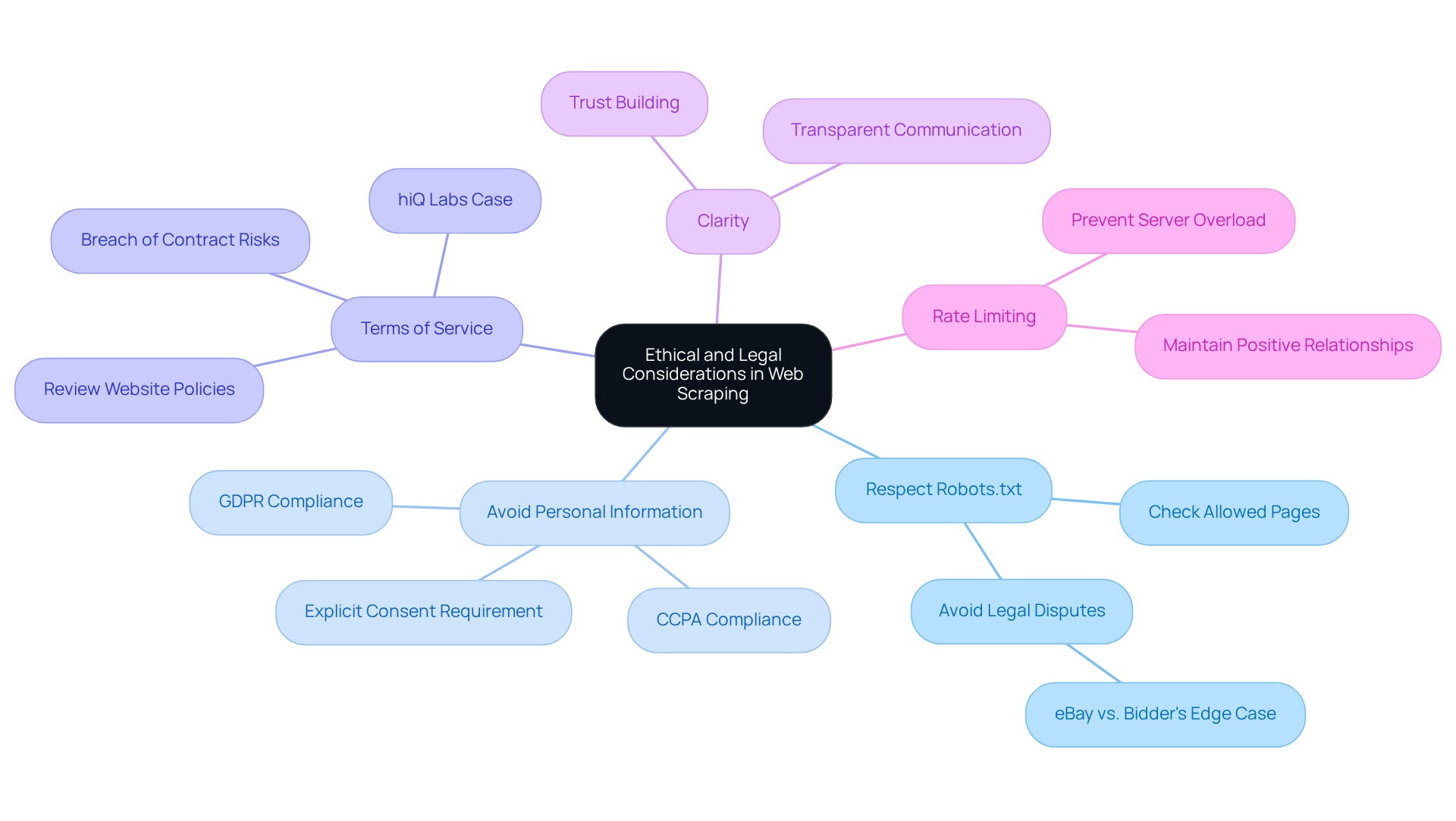

When engaging in web scraping, it is essential to consider the following ethical and legal aspects:

-

Respect Robots.txt: Always check the robots.txt file of a website to understand which pages are allowed to be scraped and which are off-limits. This practise aligns with ethical standards and helps avoid potential legal disputes, as seen in the eBay vs. Bidder's Edge case, where unauthorised scraping led to legal action.

-

Avoid Personal Information: Scraping personal information without explicit consent can lead to significant legal consequences. Adherence to privacy protection regulations, such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA), is essential. These laws mandate that personal information must be processed lawfully, fairly, and transparently, emphasising the need for scrapers to ensure they have a legitimate basis for collection. As stated in the GDPR, 'You can only use personal information for specific, explicit, and legitimate purposes.'

-

Terms of Service: It is vital to review the website's terms of service to confirm that data extraction activities do not breach any contractual agreements. Breach of these conditions can lead to legal measures, including allegations of contract violation, as emphasised by the Ninth Circuit Court decision supporting hiQ, which confirmed that harvesting publicly available information does not breach the CFAA.

-

Clarity: Maintaining clarity in information collection practises is essential, particularly if the information will be published or utilised for commercial purposes. Transparent communication regarding information usage can nurture trust and reduce potential conflicts with information providers.

-

Rate Limiting: Implementing rate limiting is important to prevent overwhelming the server, which can lead to service disruptions. This practise not only honours the website's resources but also aids in preserving a positive relationship with the information source.

By adhering to these ethical and legal guidelines, web scrapers can operate responsibly and learn how web scraping works, minimising the risk of legal challenges while maximising the effectiveness of their data collection efforts.

Conclusion

Web scraping serves as an invaluable tool that significantly enhances data collection capabilities. It enables businesses to efficiently extract and analyse vast amounts of information from the web. By mastering various techniques, tools, and ethical considerations surrounding web scraping, organisations can leverage this skill to maintain competitiveness and stay informed in a rapidly evolving digital landscape.

The article underscores the significance of web scraping in modern data strategies, showcasing its applications across market research, pricing analysis, and competitive monitoring. It outlines the diverse types of web scrapers available, ranging from no-code solutions to advanced programming libraries, catering to users of all technical backgrounds. Furthermore, understanding the mechanics of web scraping - including the processes involved and the challenges faced - equips practitioners with the knowledge necessary to navigate potential obstacles effectively.

In conclusion, embracing web scraping not only fosters agility in data-driven decision-making but also necessitates a commitment to ethical practises. As the landscape of web scraping continues to evolve, staying informed about best practises and legal considerations will be crucial for responsible data extraction. By prioritising these aspects, professionals can harness the power of web scraping while maintaining integrity and respect for the digital environment.

Frequently Asked Questions

What is web scraping and why is it important?

Web scraping, or web harvesting, is an automated method for retrieving information from websites using software tools or scripts. It is important because it facilitates large-scale data collection, allowing businesses to gain insights from vast online information quickly, which is essential for data-driven decision-making.

What are some applications of web scraping?

Web scraping is used for various applications, including market research, price monitoring, and social media analysis. It has become crucial for businesses to adapt to dynamic market conditions and improve lead generation and sales cycles.

How has web scraping impacted businesses?

Businesses are increasingly leveraging web scraping to monitor competitor pricing and promotional strategies in real time, which is vital in today's competitive landscape. For instance, a global B2B SaaS company improved its lead generation by automating data extraction, resulting in a 40% increase in qualified leads.

What is the projected growth of the web data extraction market?

The web data extraction market is projected to expand significantly, with a compound annual growth rate (CAGR) between 11.9% and 18.7%, potentially reaching between $363 million and $3.52 billion by 2037, driven by the demand for timely and relevant information.

How is artificial intelligence influencing web scraping?

Advancements in artificial intelligence are expected to enhance the capabilities of web extraction tools, allowing companies to make more informed decisions based on real-time data.

What is the Appstractor's MobileHorizons API?

Appstractor's MobileHorizons API provides digital marketers with hyper-local insights by extracting and analysing tailored information from native mobile apps, including display ads, shopping intelligence, and comparison pricing.

What are the different types of web scrapers available?

There are several types of web scrapers: - No-Code Scrapers: Tools like Octoparse and ParseHub allow users to extract data without programming skills using visual interfaces. - Browser Extensions: Extensions such as Web Scraper and Data Miner enable quick data extraction directly from the browser. - Programming Libraries: Libraries like Beautiful Soup and Scrapy in Python offer robust functionalities for custom data extraction for users with coding expertise. - Cloud-Based Services: Platforms like Apify and ScraperAPI provide scalable scraping solutions for complex tasks, including IP rotation and anti-blocking measures.

How can users choose the right web scraping tool?

Users can select the most appropriate web scraping tool based on their technical skills and project needs, ensuring efficient and effective information gathering.

List of Sources

- Define Web Scraping: Core Concepts and Importance

- 3 Reasons Why Web Scraping is Key for Data-Driven Business Growth (https://news.designrush.com/3-reasons-web-scraping-fuels-business-growth)

- Web Scraping Trends for 2025 and 2026 (https://ficstar.medium.com/web-scraping-trends-for-2025-and-2026-0568d38b2b05?source=rss------ai-5)

- How Web Scraping is Transforming Modern Market Research in 2026 (https://tagxdata.com/how-web-scraping-is-transforming-modern-market-research-in-2026)

- Web Scraping Statistics & Trends You Need to Know in 2026 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- Explore Types of Web Scrapers: Tools and Technologies

- Web Scraping Statistics & Trends You Need to Know in 2026 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- Top Web Crawler Tools in 2026 (https://scrapfly.io/blog/posts/top-web-crawler-tools)

- No Code Web Scraper Tool Market Key Insights, Trends, Drivers, and Regional Growth Factors (https://linkedin.com/pulse/code-web-scraper-tool-market-key-insights-trends-anm2f)

- Understand Web Scraping Mechanics: Processes and Techniques

- Why 60% of Web Scraping Tasks Will Be Automated by 2026 (https://scrapegraphai.com/blog/automation-web-scraping)

- Web Scraping Roadmap: Steps, Tools & Best Practices (2026) (https://brightdata.com/blog/web-data/web-scraping-roadmap)

- How Web Scraping is Transforming Modern Market Research in 2026 (https://tagxdata.com/how-web-scraping-is-transforming-modern-market-research-in-2026)

- Real-Time Data Scraping: The Ultimate Guide for 2026 - AI-Driven Data Intelligence & Web Scraping Solutions (https://hirinfotech.com/real-time-data-scraping-the-ultimate-guide-for-2026)

- Web Scraping Statistics & Trends You Need to Know in 2026 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- Implement Web Scraping Techniques: Overcoming Challenges

- How to Bypass CAPTCHA in Web Scraping in 2026 (https://research.aimultiple.com/how-to-bypass-captcha)

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://kanhasoft.com/blog/web-scraping-statistics-trends-you-need-to-know-in-2025)

- Top Web Scraping Challenges and How to Overcome Them (https://eminenture.com/blog/challenges-in-web-scraping-and-how-to-overcome-them)

- From Banned IPs to Success: Real Web Scraping Success Rates Across Industries - ScrapingAPI.ai (https://scrapingapi.ai/blog/real-web-scraping-success-rates-across-industries)

- Address Ethical and Legal Considerations in Web Scraping

- Is Web Scraping Legal in 2026? Best Practices for Legal Web Scraping (https://dataprixa.com/is-web-scraping-legal)

- The Hidden Costs and Ethical Pitfalls of Content Scraping | Akamai (https://akamai.com/blog/security/the-hidden-costs-and-ethical-pitfalls-of-content-scraping)

- Importance and Best Practices of Ethical Web Scraping (https://secureitworld.com/article/ethical-web-scraping-best-practices-and-legal-considerations)

- Web Scraping Statistics & Trends You Need to Know in 2026 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- Web scraping in the hotels, hospitality and leisure industry (https://farrer.co.uk/news-and-insights/web-scraping-in-the-hotels-hospitality-and-leisure-industry)