Introduction

Web scraping has become an essential tool for businesses aiming to leverage data in a fast-changing digital environment. This automated technique not only facilitates the efficient extraction of valuable insights from websites but also aids in strategic decision-making across diverse industries.

However, as organisations engage with web scraping, they frequently face challenges related to:

- Ethical practises

- Technical obstacles

- The necessity for effective data management

To navigate these complexities successfully, businesses must understand how to maximise the potential of web data extraction.

Understand Web Scraping: Definition and Importance

Web harvesting is an automated method that teaches how to scrape a website for data, allowing businesses to efficiently obtain web pages and extract specific details. This capability facilitates the rapid collection of vast amounts of data, which can be structured for analysis. The importance of web extraction is underscored by its role in market research, competitor monitoring, and trend analysis, especially when considering how to scrape a website for data. By 2026, the global web data extraction market is expected to expand significantly, with projections suggesting it could exceed USD 2 billion by 2030, driven by the increasing demand for data-driven decision-making across various sectors.

Businesses that leverage web data extraction can gain valuable insights that inform strategic decisions. For example, e-commerce retailers utilise data extraction to monitor competitors' pricing, allowing them to dynamically adjust their strategies and maintain competitiveness. Similarly, travel agencies employ data extraction to track flight prices, ensuring they offer the best deals to customers. These practical applications illustrate how to scrape a website for data, which serves as a strategic asset empowering organisations to identify trends and optimise operations.

Recent trends in web extraction technology indicate a shift towards AI-driven solutions and cloud-native environments, which enhance the efficiency and effectiveness of information collection processes. As companies increasingly prioritise compliance and ethical data practises, the focus is shifting from mere data extraction to fostering transparent relationships with data sources. By embracing these advancements, businesses can fully harness the potential of web data extraction, driving growth and innovation in their respective fields.

Set Up Your Environment: Tools and Configuration

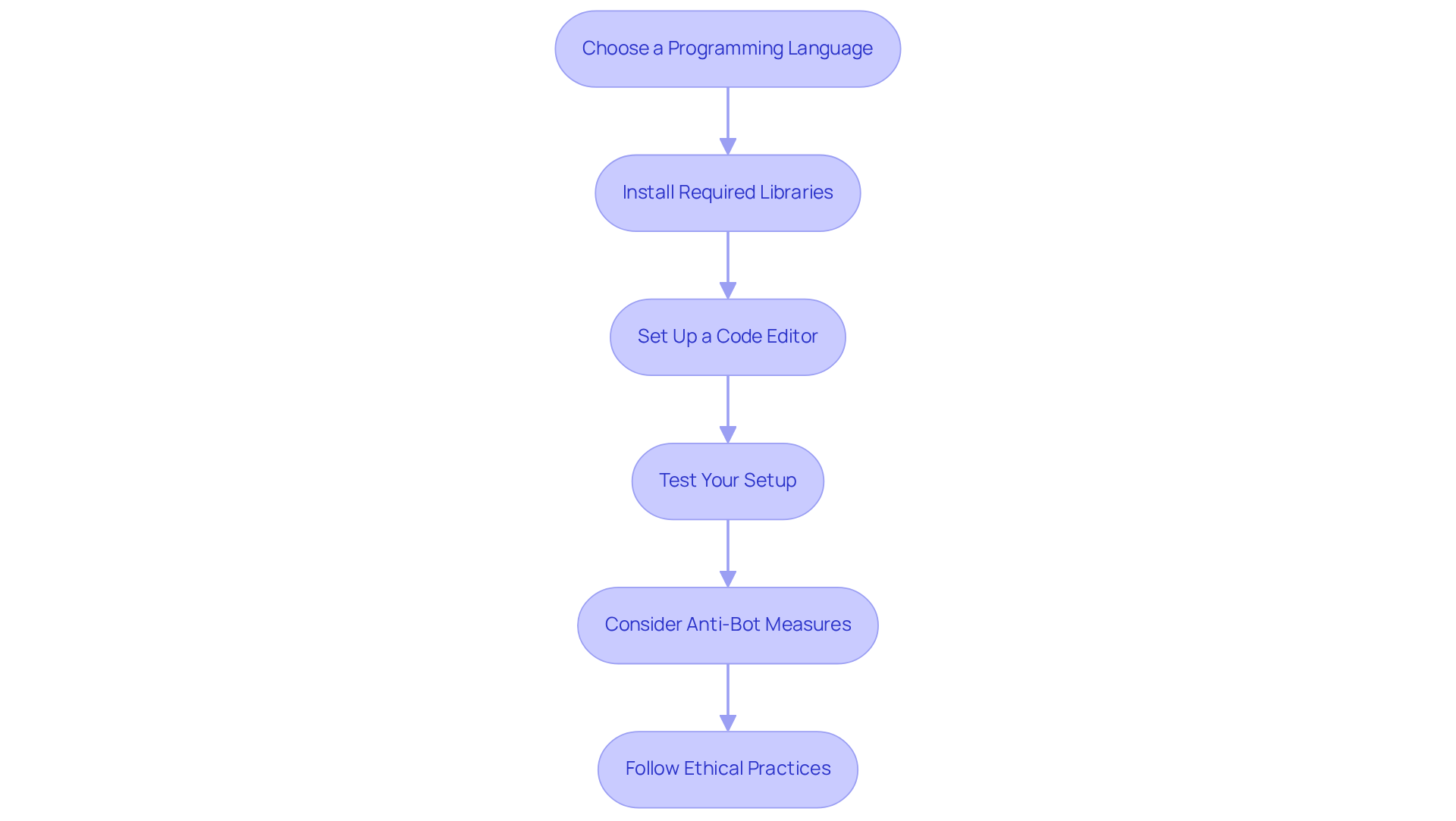

To start web data extraction, it's essential to understand how to scrape a website for data by setting up your environment with the right tools. Here’s how to do it:

-

Choose a Programming Language: Python is highly recommended due to its simplicity and the availability of powerful libraries like Beautiful Soup and Scrapy. In 2026, Python continues to be the most popular language for web data extraction, favoured for its extensive support and versatility. As Lucas Mitchell states, "Python is still the best all-around coding language for web scraping in 2026."

-

Instal Required Libraries: Use pip to instal necessary libraries. For example:

pip instal requests beautifulsoup4 scrapy lxml aiohttpThese libraries provide powerful tools for making HTTP requests and parsing HTML content. Lxml is particularly noted for its speed and efficiency, while Aiohttp excels in handling asynchronous requests, allowing for faster data extraction.

-

Set Up a Code Editor: Utilise an IDE like PyCharm or a text editor like VSCode to write your scripts effectively.

-

Test Your Setup: Write a simple script to fetch a webpage and print its content. This initial test will confirm that your environment is correctly configured and ready for more complex data extraction tasks.

-

Consider Anti-Bot Measures: Be aware of challenges such as IP blocking and CAPTCHAs that may arise during data extraction. Tools like CapSolver can help manage these issues, ensuring your data extraction process remains uninterrupted. Additionally, consider utilising Appstractor's rotating proxy servers to avoid IP bans and enhance your data collection efficiency. Their full-service option can also provide turnkey information delivery, streamlining the process.

-

Follow Ethical Practises: Always adhere to responsible data collection practises, including respecting robots.txt rules and limiting request rates to avoid overwhelming servers.

By following these steps and utilising Appstractor's advanced information mining solutions, you will establish a solid foundation for your web scraping projects.

Scrape Data: Step-by-Step Extraction Techniques

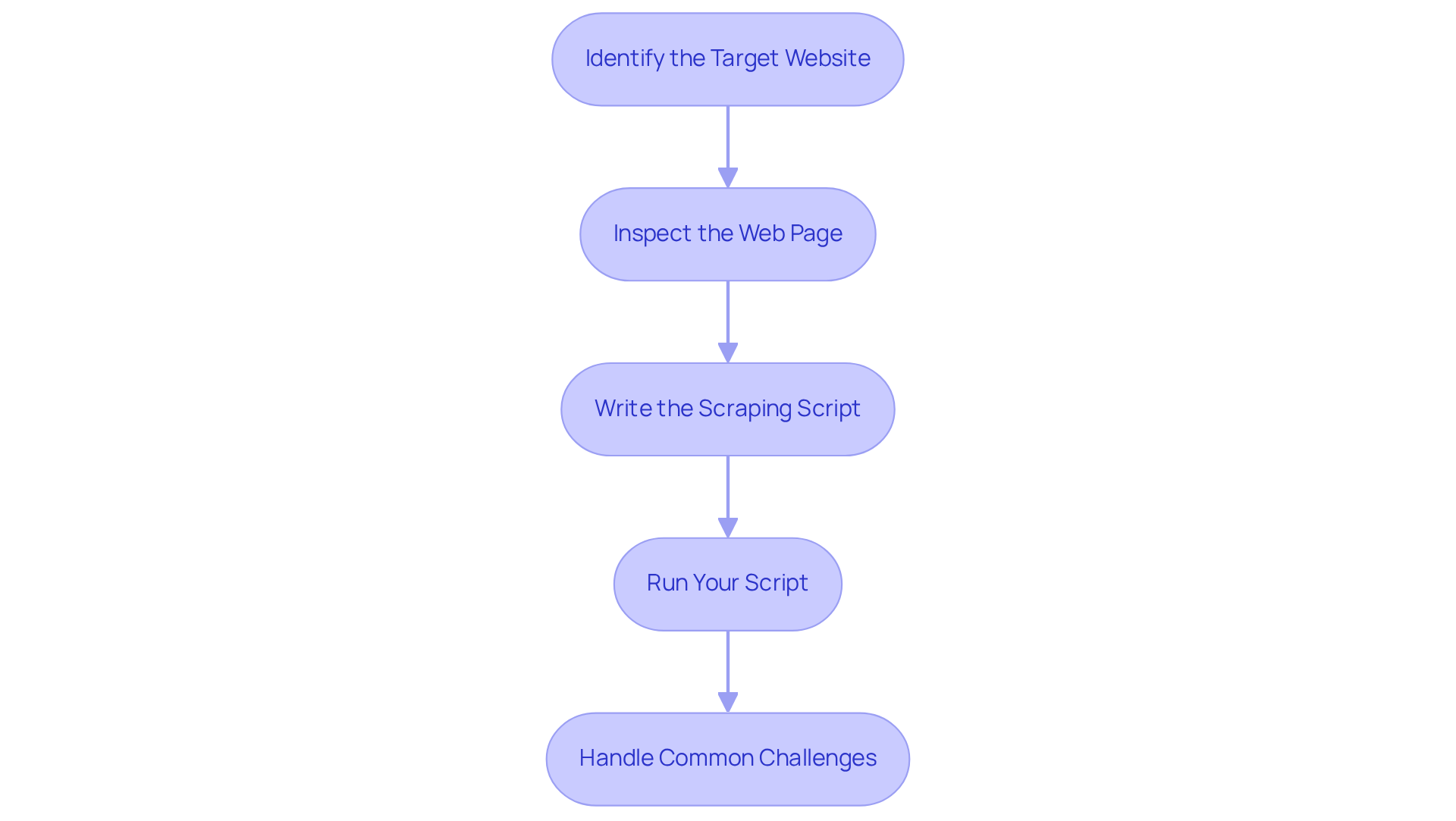

To successfully scrape data from a website, particularly in the realms of real estate and job market insights, follow these essential steps:

-

Identify the Target Website: Select a website from which you wish to extract information. Before proceeding, ensure that scraping is permitted by reviewing the site's robots.txt file, which outlines the rules for automated access.

-

Inspect the Web Page: Utilise your browser's developer tools to analyse the HTML structure of the page. This allows you to identify the specific elements that contain the information you wish to scrape. Understanding the layout is crucial, as many sites employ anti-scraping tactics that can complicate information extraction.

-

Write the Scraping Script: Below is a basic example using Python, a popular choice among developers for web scraping due to its simplicity and powerful libraries:

import requests from bs4 import BeautifulSoup url = 'http://example.com' response = requests.get(url) soup = BeautifulSoup(response.text, 'html.parser') data = soup.find_all('div', class_='data-class') for item in data: print(item.text)This script fetches the HTML content of the specified URL and extracts text from all div elements with the class 'data-class'.

-

Run Your Script: Execute your script to retrieve and display the information. Be prepared to make adjustments based on the output, as websites frequently change their structures. For instance, if the layout alters, your script may need to adapt to continue functioning effectively.

Common challenges in web data extraction include navigating complex HTML structures and dealing with anti-bot technologies. Approximately 70% of large AI models depend on collected information, underscoring the significance of efficient collection strategies. As highlighted by specialists, AI-driven extraction solutions, such as those provided by Appstractor, can greatly improve data retrieval precision and reduce maintenance efforts by up to 85%. Moreover, tens of millions of web pages are extracted daily, illustrating the scale of data collection in today's digital landscape. As Antonello Zanini indicates, "No matter how advanced your news collection script is, most sites can still detect automated activity and block your access." By following these steps, you can efficiently extract valuable information from your selected website and understand how to scrape a website for data while leveraging Appstractor's advanced content collection solutions for real estate listing alerts and compensation benchmarking, all while ensuring GDPR compliance.

Store Your Data: Best Practices for Data Management

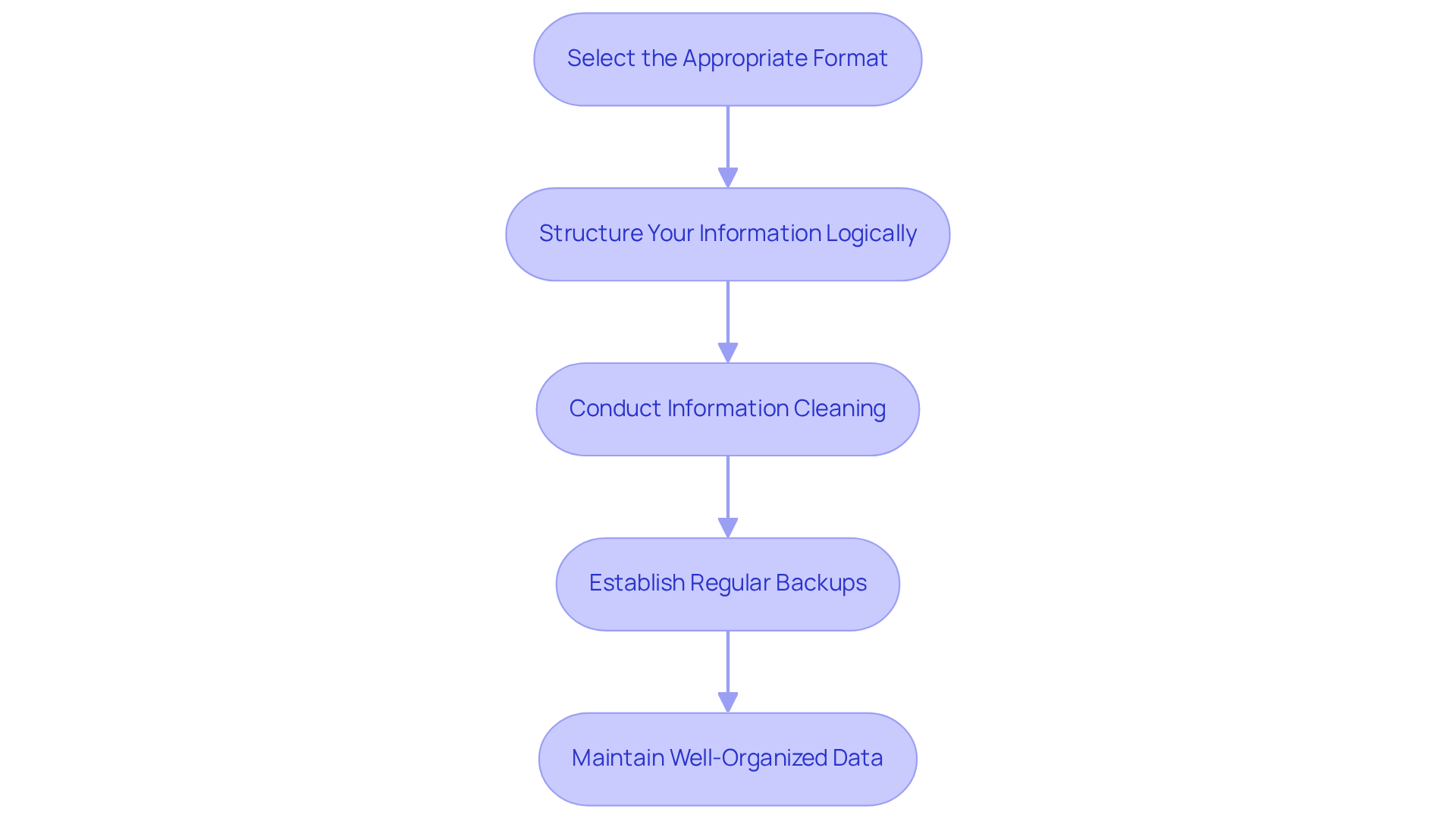

Proper information storage is crucial after scraping to maximize its utility. Here are essential best practices for effective data management:

-

Select the Appropriate Format: Choose a storage format that aligns with your project requirements. Common options include CSV for simplicity, JSON for hierarchical information, or databases like SQL and NoSQL for structured storage.

-

Structure Your Information Logically: Organise your information in a way that facilitates easy access and analysis. For instance, when scraping product details, create distinct fields for attributes such as name, price, and description to enhance clarity.

-

Conduct Information Cleaning: Before storage, ensure your information is clean by removing duplicates and irrelevant entries. Employing libraries such as Pandas can simplify this process, enabling effective manipulation and preparation of information.

-

Establish Regular Backups: Safeguard your information from loss by implementing a consistent backup strategy. Cloud storage options provide an additional layer of security, ensuring your information remains safe and accessible.

By adhering to these best practices, you can maintain well-organised, clean, and analysis-ready data, which ultimately enhances your understanding of how to scrape a website for data effectively.

Conclusion

Web scraping stands out as an essential tool for businesses looking to leverage data in a competitive environment. This guide has clarified the process of extracting valuable information from websites, underscoring its importance in market research, competitor analysis, and trend identification. By mastering the art of web scraping, organisations can gain insights that inform strategic decision-making and drive innovation.

Key components include:

- Setting up an efficient environment with the appropriate tools.

- Implementing step-by-step scraping techniques.

- Adhering to best practises for data management.

From choosing programming languages like Python to ensuring ethical data collection, each aspect is vital in the web scraping journey. Moreover, with technological advancements such as AI-driven solutions and cloud-based tools, the field of web data extraction is set for significant growth and enhanced efficiency.

The necessity of mastering web scraping is paramount. As data remains a cornerstone of business strategy, equipping oneself with the knowledge and tools for effective data extraction and management is crucial. By embracing these techniques and best practises, organisations can not only enhance their data capabilities but also maintain a competitive edge in an ever-evolving digital landscape.

Frequently Asked Questions

What is web scraping?

Web scraping is an automated method used to extract data from websites, allowing businesses to efficiently collect web pages and specific details for analysis.

Why is web scraping important?

Web scraping is important because it facilitates rapid data collection for market research, competitor monitoring, and trend analysis, helping businesses make informed, data-driven decisions.

What is the projected growth of the web data extraction market?

The global web data extraction market is expected to grow significantly, with projections suggesting it could exceed USD 2 billion by 2030.

How do businesses use web data extraction?

Businesses use web data extraction to gain insights for strategic decisions, such as e-commerce retailers monitoring competitor pricing and travel agencies tracking flight prices to offer the best deals.

What are recent trends in web extraction technology?

Recent trends include a shift towards AI-driven solutions and cloud-native environments that enhance the efficiency of data collection processes.

How are companies addressing compliance and ethical data practises in web scraping?

Companies are increasingly prioritising compliance and ethical data practises by focusing on building transparent relationships with data sources rather than just extracting data.

List of Sources

- Understand Web Scraping: Definition and Importance

- State of Web Scraping 2026: Trends, Challenges & What’s Next (https://browserless.io/blog/state-of-web-scraping-2026)

- Market Leaders and Laggards: Global Web Scraping Services Market Trends and Forecast (2026 - 2033) (https://linkedin.com/pulse/market-leaders-laggards-global-web-scraping-services-trends-lgfpe)

- Web Scraping Report 2026: Market Trends, Growth & Key Insights (https://promptcloud.com/blog/state-of-web-scraping-2026-report)

- 2026 Web Scraping Industry Report - PDF (https://zyte.com/whitepaper-ebook/2026-web-scraping-industry-report)

- Web Scraping: Unlocking Business Insights In A Data-Driven World (https://forbes.com/councils/forbestechcouncil/2025/01/27/web-scraping-unlocking-business-insights-in-a-data-driven-world)

- Set Up Your Environment: Tools and Configuration

- Programming Language Popularity Statistics 2026 (https://codegnan.com/programming-language-popularity-statistics)

- None (https://capsolver.com/blog/web-scraping/best-coding-language-for-web-scraping)

- 8 Best Python Web Scraping Libraries in 2026 (https://iproyal.com/blog/python-web-scraping-libraries)

- Best Python Web Scraping Tools 2026 (Updated) (https://medium.com/@inprogrammer/best-python-web-scraping-tools-2026-updated-87ef4a0b21ff)

- 14 Most In-demand Programming Languages for 2026 (https://itransition.com/developers/in-demand-programming-languages)

- Scrape Data: Step-by-Step Extraction Techniques

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://open.substack.com/pub/scrapetalk/p/web-scraping-statistics-and-trends?r=1m1mci&utm_campaign=post&utm_medium=web)

- How to Scrape News Articles With AI and Python (https://brightdata.com/blog/web-data/how-to-scrape-news-articles)

- Web Scraping: Industry Stats and Trends in 2023 (https://browsercat.com/post/web-scraping-industry-stats-and-trends-2023)

- The Rise of AI in Web Scraping: Key Statistics and Trends (2025-2026) - ScrapingAPI.ai (https://scrapingapi.ai/blog/the-rise-of-ai-in-web-scraping)

- Web Scraping Market Size, Growth Report, Share & Trends 2025 - 2030 (https://mordorintelligence.com/industry-reports/web-scraping-market)

- Store Your Data: Best Practices for Data Management

- 19 Inspirational Quotes About Data | The Pipeline | ZoomInfo (https://pipeline.zoominfo.com/operations/19-inspirational-quotes-about-data)

- Data Management Quotes To Live By | InfoCentric (https://infocentric.com.au/2022/04/28/data-management-quotes)

- 20 Data Science Quotes by Industry Experts (https://coresignal.com/blog/data-science-quotes)

- News Scraping Guide: Tools, Use Cases, and Challenges (https://infatica.io/blog/news-scraping)