Introduction

Web scraping has become an essential skill in the digital age, allowing individuals and businesses to leverage the vast amounts of data available online. This guide explores the intricacies of extracting information from websites, providing insights into the tools and techniques that facilitate this process. As the demand for efficient data collection increases, so do the challenges that accompany it.

How can one effectively navigate the legal and technical hurdles while ensuring ethical scraping practises?

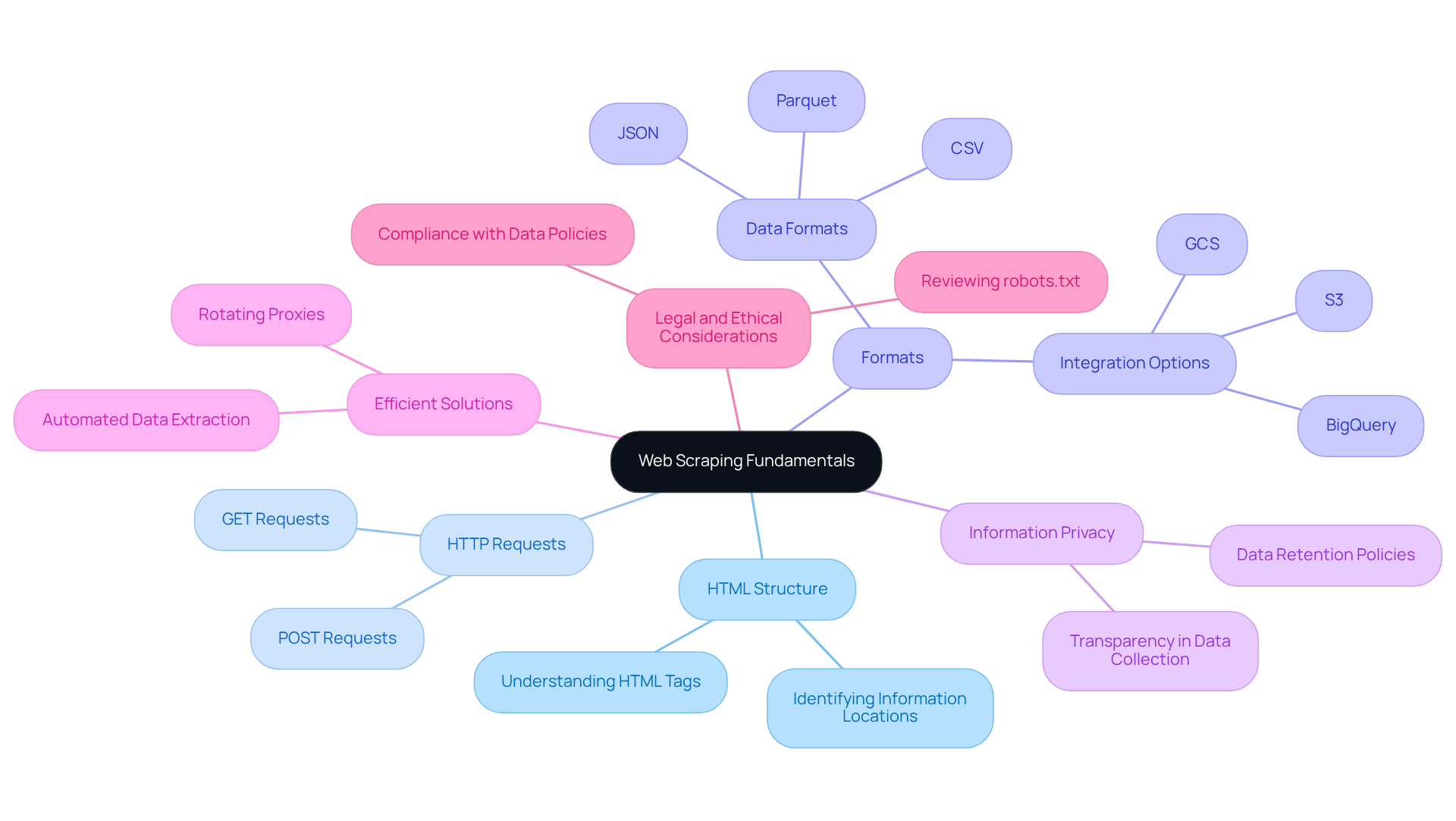

Understand Web Scraping Fundamentals

Web scraping is the automated process that demonstrates how to extract information from a website. It involves retrieving a web page and understanding how to extract information from a website using its markup structure. Below are some key concepts:

- HTML Structure: Websites are constructed using HTML, which defines the layout of web pages. A solid understanding of HTML tags (such as

<div>,<span>,<table>, etc.) is essential for identifying the location of the information you seek. - HTTP Requests: Web scraping generally requires sending HTTP requests to a server to access web pages. Familiarity with techniques like GET and POST is important, as these methods are used to request information from a server.

- Formats: The information extracted can be in various formats, including JSON, CSV, Parquet, S3, GCS, BigQuery, or direct database insertion. Appstractor provides a range of options to meet your integration needs. Understanding how to manage these formats is crucial for effective data manipulation, particularly when considering how to extract information from a website.

- Information Privacy: Appstractor ensures that only billing metadata (IP, timestamp, byte count) is retained, never the response body, thereby upholding transparency and confidentiality in your extraction processes.

- Efficient Solutions: Appstractor offers advanced information mining solutions that show how to extract information from a website through automated web information extraction, utilising rotating proxies and comprehensive service options tailored for businesses. This approach eliminates the need for manual data collection and significantly enhances the efficiency of your data retrieval efforts.

- Legal and Ethical Considerations: It is vital to review a website's

robots.txtfile to understand its data collection policies. Adhering to these guidelines is essential to avoid legal issues and ensure ethical data collection practises.

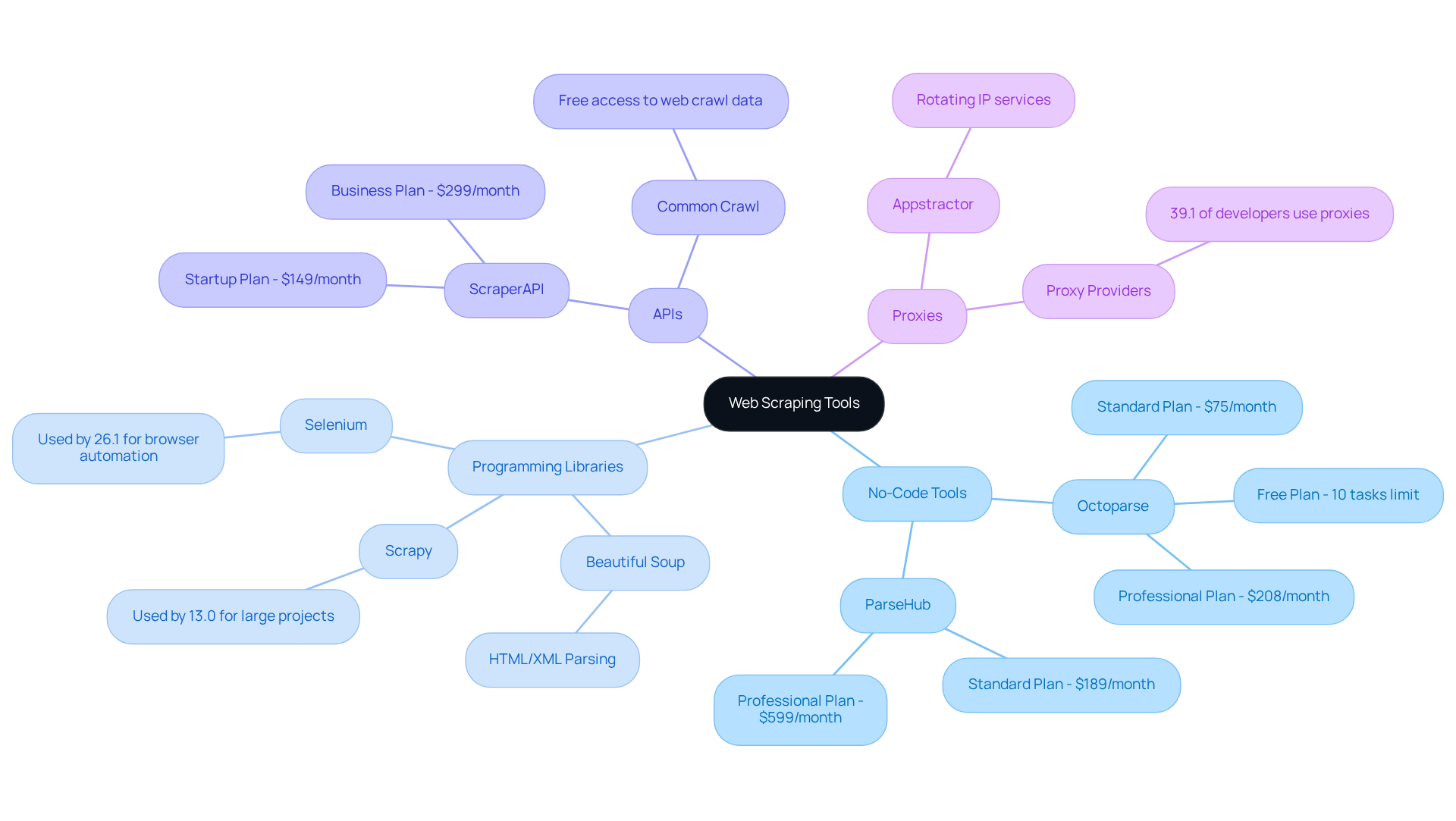

Choose the Right Tools for Web Scraping

Selecting the appropriate tools for web scraping is crucial for understanding how to extract information from a website successfully. Here are some popular options:

-

No-Code Tools: For beginners, platforms like Octoparse and ParseHub allow users to scrape data without any coding knowledge. These user-friendly interfaces come equipped with pre-built templates, making it easier for those new to how to extract information from a website. The growth of no-code web extraction tools is notable, with increasing adoption expected in 2026 as businesses look for efficient solutions on how to extract information from a website. According to industry reports, there is a strong demand for tools that demonstrate how to extract information from a website, with the web data extraction software market projected to grow significantly, with estimates ranging from USD 2.2B to USD 3.5B by 2026.

-

Programming Libraries: If you have coding skills, consider using libraries like Beautiful Soup (Python) or Scrapy. Beautiful Soup, specifically, is preferred for its simplicity in parsing HTML and XML documents, making it a popular option for those learning how to extract information from a website. In fact, Python dominates with a 69.6% adoption rate for scraping projects, showcasing its relevance in the field. Browser extensions like Web Scraper and Data Miner are tools that show how to extract information from a website directly from your browser. They are easy to use and suitable for quick tasks, providing a seamless experience for users.

-

APIs: Some websites offer APIs that allow you to access their data in a structured format. Always verify if an API is accessible before considering how to extract information from a website, as it is often more efficient and aligns with the website's policies.

-

Proxies: To avoid IP bans while collecting data, consider using Appstractor's premier proxy services that rotate IP addresses. With a worldwide self-repairing IP pool, Appstractor guarantees dependable assistance for extensive projects on how to extract information from a website and outstanding information extraction. Utilising these proxies can enhance the reliability of your data extraction efforts and ensure uninterrupted data collection, all while maintaining GDPR compliance.

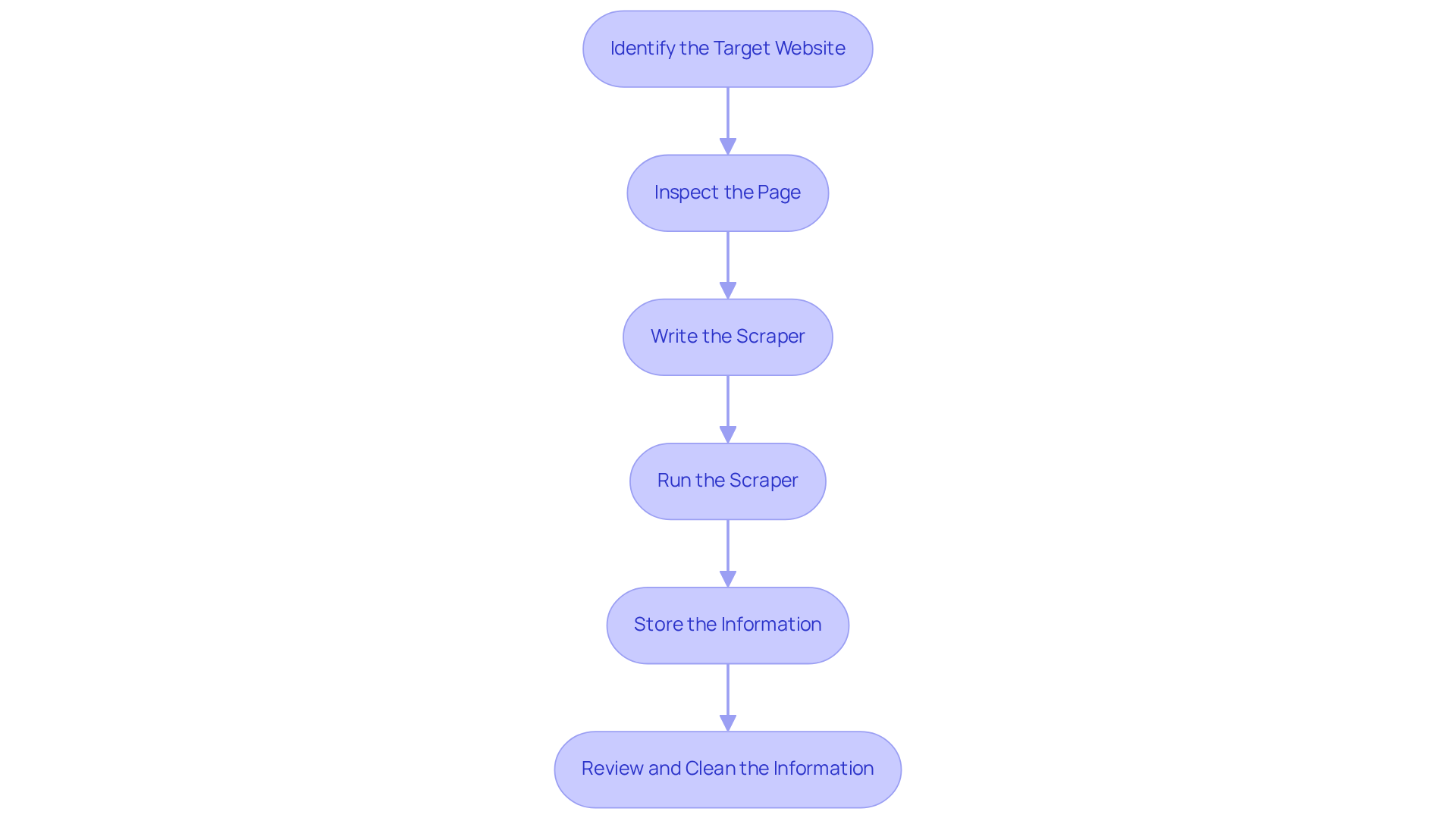

Follow a Step-by-Step Extraction Process

To successfully extract information from a website, follow these steps:

- Identify the Target Website: Select the website you wish to scrape and verify its scraping policy by checking the

robots.txtfile to ensure compliance with its terms. - Inspect the Page: Utilise your browser's developer tools, typically accessed by right-clicking and selecting 'Inspect', to analyse the HTML structure. Focus on identifying the specific elements that demonstrate how to extract information from a website.

- Write the Scraper: Depending on your approach:

- For no-code tools, adhere to the provided instructions to configure your scraping project.

- For programming libraries, create a script that sends an HTTP request to the target URL and parses the HTML to extract the required information using libraries like BeautifulSoup and requests.

- Run the Scraper: Execute your scraper while monitoring its performance. Ensure it accurately retrieves the intended information and effectively manages any errors that may arise. Consider utilising Appstractor's rotating proxy servers for self-serve IPs or the full-service choice for turnkey information delivery, which can improve the efficiency of your scraping efforts.

- Store the Information: After extraction, save the information in structured formats such as CSV or JSON, facilitating future analysis and accessibility. Appstractor supports various output formats, including JSON, CSV, and direct database inserts, allowing for seamless integration into your existing workflows.

- Review and Clean the Information: After extraction, evaluate the information for accuracy and completeness. Address any inconsistencies or errors to maintain high-quality results. With Appstractor's advanced information management solutions, you can ensure that your information is normalised and validated before delivery, optimising your quality.

In 2026, the success rates of web extraction projects vary significantly by method, especially regarding how to extract information from a website, with automated solutions showing higher efficiency and reliability. For instance, organisations employing structured approaches to inspect web pages for data extraction have achieved notable improvements in data quality and operational efficiency. As Antonello Zanini, a technical writer, emphasises, "No matter how sophisticated your news collection script is, most sites can still detect automated activity and block your access," highlighting the importance of thorough inspection and compliance in the data gathering process.

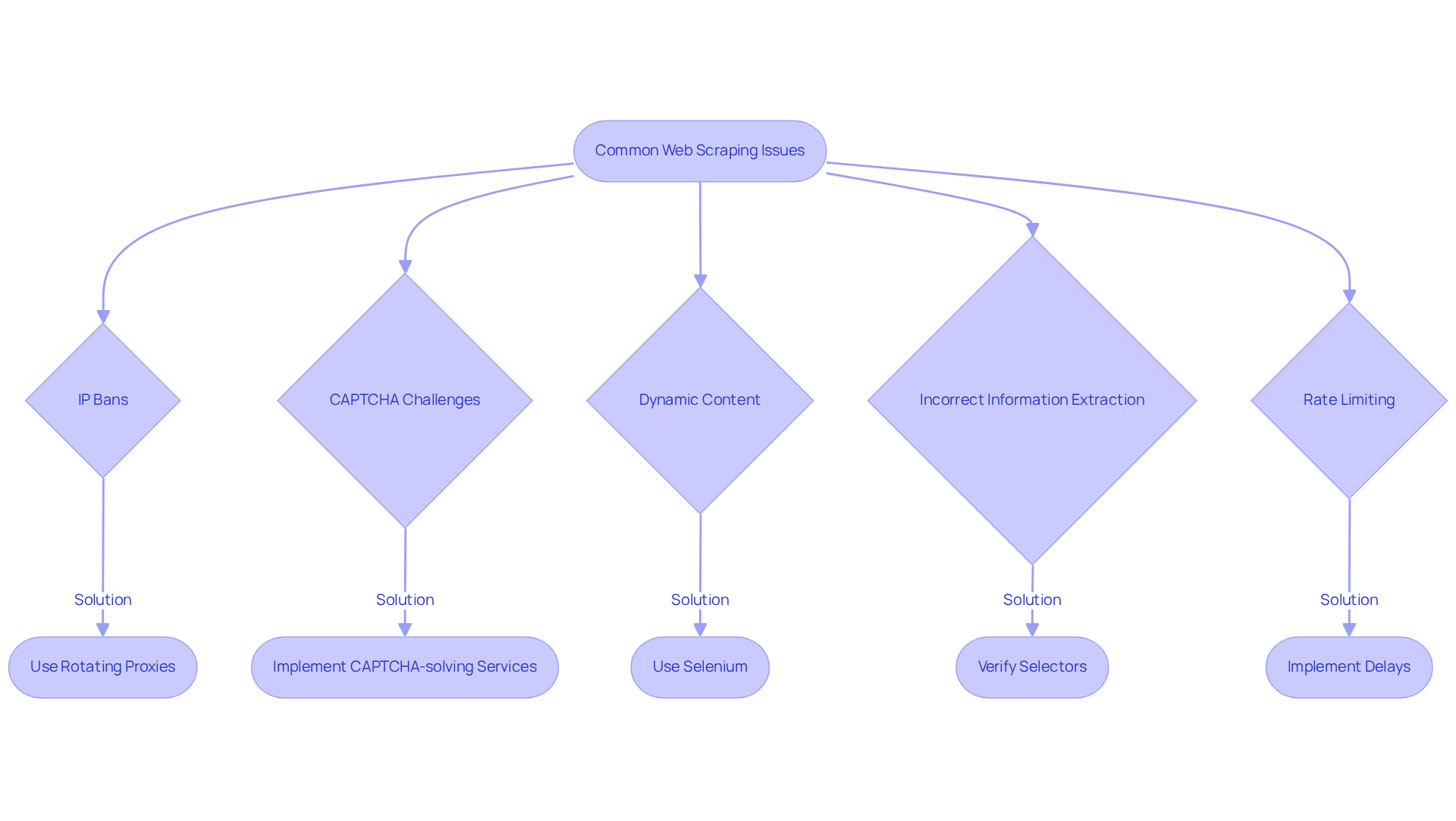

Troubleshoot Common Web Scraping Issues

Even experienced scrapers face challenges when learning how to extract information from a website. Below are some common issues along with strategies to troubleshoot them:

- IP Bans: When an IP address is banned, utilising rotating proxies becomes essential. This technique distributes requests across multiple IP addresses, significantly reducing the likelihood of detection and subsequent bans. Research shows that rotating proxies can effectively mask how to extract information from a website, facilitating smoother operations.

- CAPTCHA Challenges: Many websites implement CAPTCHAs to prevent automated scraping attempts. To navigate these barriers, it is essential to understand how to extract information from a website by using CAPTCHA-solving services or implementing strategic delays between requests to mimic human behaviour. Approximately 30% of websites employ CAPTCHA mechanisms, making it crucial to have a plan in place.

If the required information is loaded dynamically via JavaScript, knowing how to extract information from a website using tools like Selenium is necessary. These headless browsers can render JavaScript content, enabling successful retrieval from complex sites. - Incorrect Information Extraction: If your scraper retrieves inaccurate information, verify your selectors against the HTML structure of the page. Debugging tools can assist in inspecting the process of how to extract information from a website, ensuring accuracy.

- Rate Limiting: Websites often impose limits on the number of requests within a specific timeframe. To avoid triggering these limits and facing blocks, implement delays between requests. A timeout of 10 to 20 seconds is generally recommended to maintain a low profile while scraping.

Conclusion

In today’s data-driven landscape, mastering the art of extracting information from websites is crucial. This guide has illuminated the fundamental principles of web scraping, covering essential topics such as understanding HTML structures, HTTP requests, and navigating the legal and ethical landscape of data collection. By grasping these concepts, individuals and businesses can effectively harness the power of data, leading to informed decisions and strategic advancements.

Key insights discussed include:

- The importance of selecting the right tools for web scraping, whether through no-code platforms for beginners or programming libraries for advanced users.

- The step-by-step extraction process, which emphasises careful planning-from identifying target websites to troubleshooting common issues like IP bans and CAPTCHA challenges. Each of these components plays a critical role in ensuring successful and efficient data retrieval.

As the demand for web scraping tools continues to rise, it is imperative to stay informed about the latest techniques and best practices. Embracing these strategies not only enhances data collection efforts but also fosters a deeper understanding of the digital landscape. By applying the knowledge gained from this guide, individuals and organisations can unlock valuable insights and maintain a competitive edge in their respective fields.

Frequently Asked Questions

What is web scraping?

Web scraping is the automated process of extracting information from a website by retrieving a web page and using its markup structure to identify and extract the desired information.

Why is understanding HTML important for web scraping?

Understanding HTML is essential because websites are constructed using HTML tags (such as

What role do HTTP requests play in web scraping?

HTTP requests are used to access web pages during the web scraping process. Familiarity with techniques such as GET and POST is important, as these methods are used to request information from a server.

In what formats can extracted information be stored?

Extracted information can be stored in various formats, including JSON, CSV, Parquet, S3, GCS, BigQuery, or through direct database insertion.

How does Appstractor handle information privacy during web scraping?

Appstractor ensures that only billing metadata (such as IP, timestamp, and byte count) is retained during the scraping process, while the response body is never stored, maintaining transparency and confidentiality.

What solutions does Appstractor offer for efficient web scraping?

Appstractor provides advanced information mining solutions that automate web information extraction using rotating proxies and tailored service options for businesses, enhancing the efficiency of data retrieval efforts.

What legal and ethical considerations should be taken into account when web scraping?

It is crucial to review a website's robots.txt file to understand its data collection policies. Adhering to these guidelines helps avoid legal issues and ensures ethical data collection practises.

List of Sources

- Understand Web Scraping Fundamentals

- New AI web standards and scraping trends in 2026: rethinking robots.txt (https://dev.to/astro-official/new-ai-web-standards-and-scraping-trends-in-2026-rethinking-robotstxt-3730)

- The importance of web scraping in data journalism - Zyte #1 Web Scraping Service (https://zyte.com/blog/importance-web-scraping-data-journalism)

- State of Web Scraping 2026: Trends, Challenges & What’s Next (https://browserless.io/blog/state-of-web-scraping-2026)

- Why Researchers Should Web Scrape Popular News Sites (https://actowizsolutions.com/web-scraping-popular-news-sites-essential-for-researchers.php)

- Web Scraping Statistics & Trends You Need to Know in 2026 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- Choose the Right Tools for Web Scraping

- Web Scraping Statistics & Trends You Need to Know in 2026 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- 11 Best Web Scraping Tools You Should Know 2026 | Airbyte (https://airbyte.com/top-etl-tools-for-sources/web-scraping)

- 20 Data Science Quotes by Industry Experts (https://coresignal.com/blog/data-science-quotes)

- Best Web Scraping Tools in 2026 (https://scrapfly.io/blog/posts/best-web-scraping-tools)

- Follow a Step-by-Step Extraction Process

- How to Scrape News Articles With AI and Python (https://brightdata.com/blog/web-data/how-to-scrape-news-articles)

- Ultimate Guide to Web Scraping News Articles in 5 Steps (https://bardeen.ai/answers/how-to-web-scrape-news-articles)

- Web Scraping Statistics & Trends You Need to Know in 2026 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- Web Scraping Statistics & Trends You Need to Know in 2026 (https://dataprixa.com/web-scraping-statistics-trends)

- Troubleshoot Common Web Scraping Issues

- 8 Tips To Avoid Getting Blocked While Web Scraping (Updated) (https://scrapingdog.com/blog/how-to-avoid-getting-blocked-while-scraping)

- 6 Web Scraping Challenges & Practical Solutions in 2026 (https://research.aimultiple.com/web-scraping-challenges)

- DOs and DON’Ts of Web Scraping in 2026 (https://medium.com/@datajournal/dos-and-donts-of-web-scraping-in-2025-e4f9b2a49431)

- State of Web Scraping 2026: Trends, Challenges & What’s Next (https://browserless.io/blog/state-of-web-scraping-2026)

- Real-Time Data Scraping: The Ultimate Guide for 2026 - AI-Driven Data Intelligence & Web Scraping Solutions (https://hirinfotech.com/real-time-data-scraping-the-ultimate-guide-for-2026)