Introduction

Data scraping has become a vital tool for marketers aiming to leverage the extensive wealth of online information. By automating the extraction of data from various websites, professionals can uncover essential insights into market trends, competitor strategies, and customer preferences.

As the digital marketing landscape continues to evolve, marketers face new challenges and ethical considerations related to data scraping. How can they effectively navigate these complexities while maximising the benefits of their data-driven strategies?

This article explores best practises for mastering data scraping with Python, providing marketers with a structured roadmap to enhance their decision-making processes and maintain a competitive edge.

Understand the Fundamentals of Data Scraping

Data scraping with Python is an automated technique for extracting information from websites, transforming unstructured data into a structured format suitable for analysis. This process is vital for marketing professionals, as data scraping with Python provides insights into competitor pricing, customer reviews, and market trends, which are essential elements for data-driven decision-making. For instance, companies using data scraping with Python can track rival prices in real-time, allowing them to swiftly adjust their strategies to remain competitive in an unpredictable market.

Appstractor enhances marketplace performance through features such as:

- Seller reputation and reviews

- Listing availability alerts

- Brand sentiment tracking

These capabilities empower professionals to gain deeper insights into consumer behaviour and market dynamics, ultimately driving better decision-making. By leveraging seller reputation tracking, businesses can identify top-performing products and adjust their offerings accordingly.

Understanding the basics of HTML and CSS is crucial for efficient information extraction, particularly in the context of data scraping with Python, as these technologies dictate the arrangement of web pages. This knowledge enables marketers to navigate and obtain necessary information effectively. As Shishir Sutradhar observes, 'If your company is serious about growth, data scraping with Python for web data extraction is essential.' This underscores the importance of mastering these skills to fully utilise the potential of information extraction.

By 2026, the impact of data extraction on marketing decision-making is expected to increase significantly. Companies that implement data scraping with Python can quickly adapt to changing market conditions, enhancing their ability to respond to consumer demands and competitive pressures. With 97% of consumers using social media to shop locally, the capacity to gather real-time insights from various online sources will be a game-changer for marketers.

Moreover, the ethical implications and legal factors surrounding information extraction must not be overlooked. As companies increasingly rely on this technique, understanding compliance with protection regulations, such as GDPR, and adhering to website guidelines will be crucial to avoid potential pitfalls. By incorporating these practices, marketers can ensure that their information-gathering efforts are both effective and responsible.

Choose the Right Tools and Libraries for Efficient Scraping

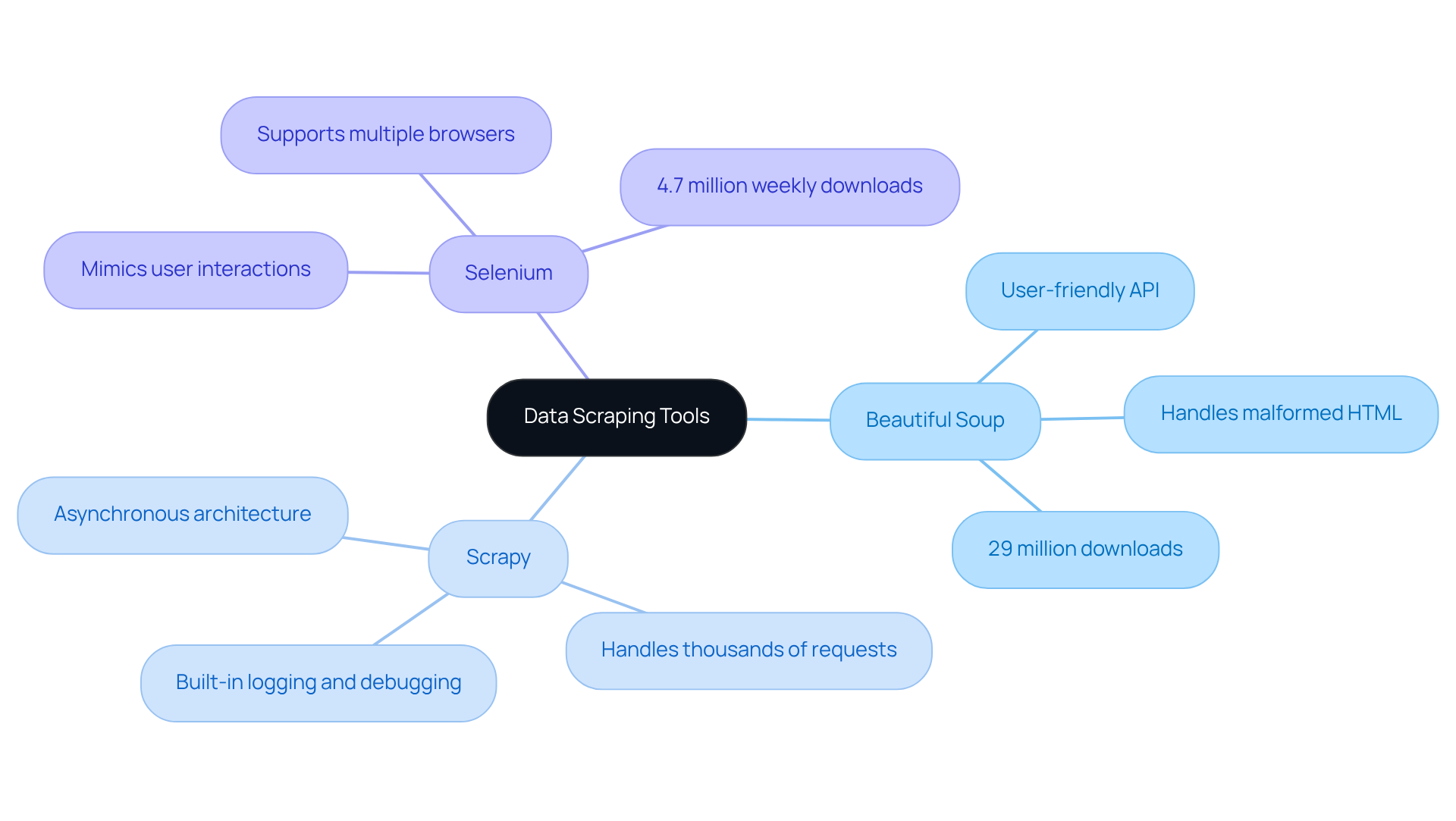

Choosing the appropriate tools and libraries is crucial for efficient data scraping with Python for data extraction. Among the most popular Python libraries for data scraping, Beautiful Soup, Scrapy, and Selenium each provide unique benefits suited to different data extraction needs.

-

Beautiful Soup excels in parsing HTML and XML documents, making it a go-to choice for projects involving static content. With approximately 29 million downloads, it is widely adopted for its user-friendly API and ability to handle malformed HTML efficiently.

-

Scrapy, conversely, is a powerful framework intended for large-scale data scraping projects, capable of handling thousands of simultaneous requests. Its asynchronous architecture allows for efficient data scraping, making it ideal for marketers dealing with extensive datasets. Scrapy's built-in logging and debugging tools further enhance the experience, ensuring reliability and ease of use.

-

For data scraping, Selenium stands out as the preferred option for dynamic content rendered by JavaScript. It supports various browsers and can mimic user interactions, making it appropriate for extracting data from complex web applications. With around 4.7 million weekly downloads, Selenium is recognised for its stability and extensive community support, although it may require more resources compared to other libraries.

Marketers should thoroughly assess their particular needs, including the complexity of the target website and the amount of information required, to choose the most suitable tool for data scraping. Additionally, utilising Appstractor's cloud-based data extraction services can significantly enhance scalability and alleviate the challenges associated with infrastructure management. Appstractor offers organised information delivery in formats like JSON, CSV, and Parquet, ensuring that users can seamlessly incorporate the extracted content into their workflows. Furthermore, the user manuals and FAQs available can guide users in effectively utilising Appstractor's services, making the process smoother and more efficient. As industry leaders emphasise, selecting the appropriate tools is essential for enhancing extraction efficiency and attaining successful results in data-driven marketing strategies.

Adhere to Ethical Guidelines and Legal Compliance in Scraping

Marketers must prioritise ethical guidelines and legal compliance when gathering information. This involves respecting the terms of service of websites, adhering to robots.txt directives, and avoiding the collection of personal information without consent. Familiarising oneself with regulations such as the General Data Protection Regulation (GDPR) is crucial to prevent hefty fines and legal issues.

For instance, in 2023, 32% of legal inquiries related to data extraction involved unauthorised use of personal or copyrighted information, underscoring the necessity for vigilance. Additionally, with bots representing 49.6% of all internet traffic in 2023, the frequency of data extraction activities highlights the need for compliance.

Marketers should implement practises such as rate limiting to prevent overwhelming target servers, ensuring that their data collection activities do not disrupt the normal functioning of websites. The joint declaration regarding information extraction released on 24 August 2023 emphasises the rising occurrences of information extraction and the necessity for ethical practises, further underscoring the significance of adherence.

By maintaining transparency and ethical standards, professionals can build trust with their audience and stakeholders.

Optimize Your Scraping Process for Better Data Quality

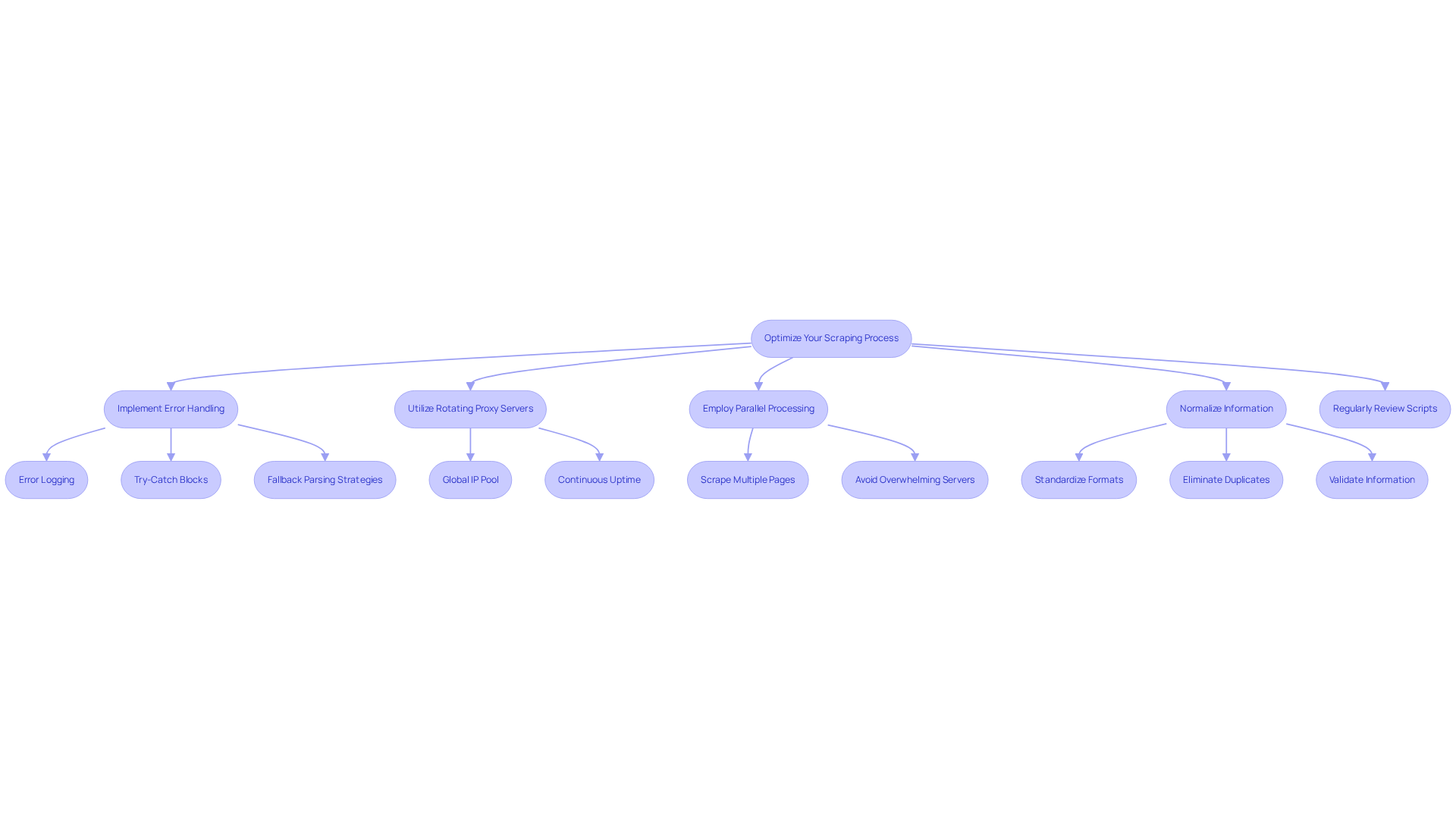

To enhance the extraction process, marketers should adopt several best practices that improve information quality. First, ensuring that data scraping with Python scripts is efficient and capable of handling errors gracefully is essential. Implementing robust error handling techniques - such as error logging, using try-catch blocks, and creating fallback parsing strategies - can significantly enhance the reliability of information collection. Statistics indicate that common quality issues in web extraction include:

- Missing values

- Inconsistent date formats

- Varying number formats

- HTML entities

- Encoding issues

- Duplicate records

- Incomplete information

- Structural changes in website layouts

These problems can undermine the integrity of datasets.

Employing Appstractor's sophisticated information extraction solutions, including data scraping with Python, Rotating Proxy Servers, and Full Service options, can further enhance the collection process. These services enable marketers to automate information collection efficiently while ensuring compliance with GDPR regulations. For instance, the rotating proxies provide a global self-repairing IP pool for continuous uptime, which is vital for maintaining data collection efficiency. Rotating Proxy Servers can be activated within 24 hours, allowing for rapid deployment in extraction tasks.

Moreover, utilising parallel processing can accelerate information gathering through data scraping with Python, enabling marketers to scrape multiple pages simultaneously without overwhelming servers. Normalisation of information is also crucial for preserving consistency across datasets; this involves standardising formats, eliminating duplicates, and validating information to ensure accuracy. Automated testing can assist in identifying problems early in the extraction process, allowing for prompt modifications to uphold information quality. Techniques such as async validation, caching, and sampling validation can help balance performance and quality.

Regularly reviewing and updating extraction scripts is vital for effective data scraping with Python to adapt to changes in website structures. Additionally, advertisers must be aware of the legal implications of web harvesting, including respecting robots.txt and complying with privacy regulations. By adhering to these best practices and utilising Appstractor's enterprise-level information extraction solutions - which include options for JSON, CSV, and direct database inserts - professionals can enhance the precision and dependability of their insights, ultimately leading to more informed decision-making and improved campaign results.

Implement Effective Data Management Practices After Scraping

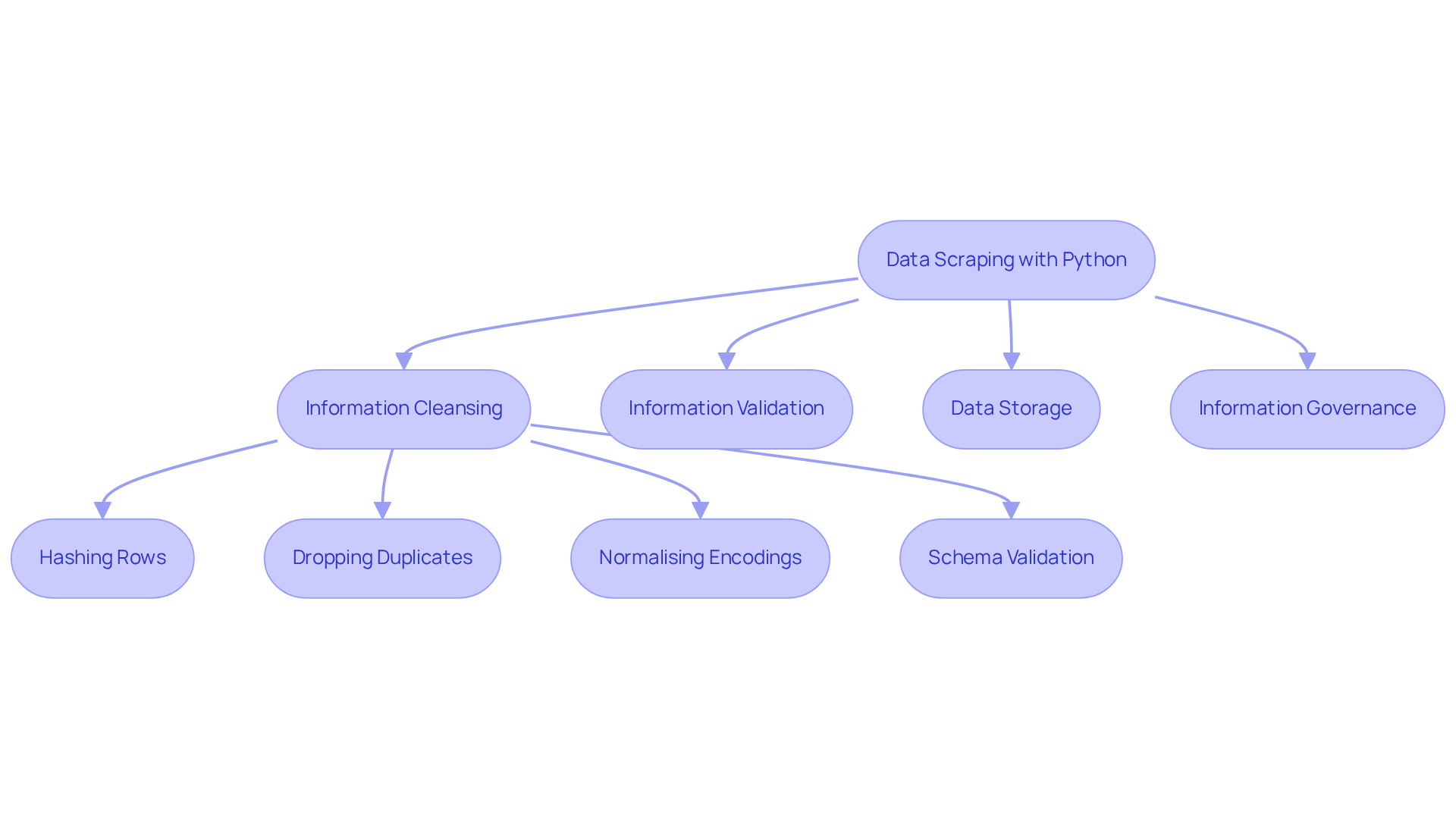

After data scraping with python, marketers must prioritise robust information management practises to maximise the usability of their collections. This begins with comprehensive information cleansing to eliminate inaccuracies, duplicates, and irrelevant entries. At Appstractor, we ensure clean, de-duplicated information by:

- Hashing rows

- Dropping duplicates

- Normalising encodings

- Conducting schema validation prior to delivery

Methods such as information validation and enrichment significantly enhance dataset quality, making it more valuable for insightful analysis. For instance, organisations that invest in information validation can experience returns that are tenfold, highlighting its importance in marketing effectiveness. Furthermore, the AI-driven web scraping sector, including data scraping with python, is projected to grow at a compound annual growth rate of 17.8%, underscoring the increasing reliance on efficient information management strategies.

Storing cleaned information in organised formats - such as JSON, CSV, Parquet, or directly in databases like BigQuery, S3, or GCS - streamlines access and analysis, facilitating informed decision-making. Establishing a comprehensive information governance framework is crucial for maintaining integrity and ensuring compliance with regulations like GDPR and CCPA. However, challenges in maintaining compliance, such as human errors and outdated records, must be addressed. Regular audits of the data are essential to verify accuracy and relevance, ensuring alignment with evolving marketing strategies. By implementing these practises, marketers can enhance the effectiveness of their campaigns and drive better engagement with their target audiences.

Conclusion

Data scraping with Python serves as a vital tool for marketers aiming to leverage information extraction for strategic decision-making. Mastering this technique enables professionals to gain insights into market trends and competitor activities, empowering them to respond swiftly to evolving consumer demands. Effectively scraping and managing data can significantly enhance marketing efforts and lead to improved business outcomes.

Key points discussed throughout the article include:

- Understanding the fundamentals of data scraping.

- Selecting the appropriate tools and libraries.

- Adhering to ethical guidelines.

- Optimising the scraping process for quality data.

Additionally, the importance of implementing effective data management practises post-scraping was emphasised, illustrating how these steps contribute to more informed marketing strategies and enhanced campaign results.

Ultimately, the landscape of data scraping is evolving. Marketers must stay informed about best practises and legal considerations to maximise the benefits of this powerful technique. By prioritising ethical practises and utilising the right tools, professionals can ensure their data scraping efforts are not only efficient but also responsible, paving the way for sustainable growth and success in their marketing endeavours.

Frequently Asked Questions

What is data scraping with Python?

Data scraping with Python is an automated technique for extracting information from websites and transforming unstructured data into a structured format suitable for analysis.

Why is data scraping important for marketing professionals?

Data scraping provides insights into competitor pricing, customer reviews, and market trends, which are essential for data-driven decision-making and allow companies to adjust their strategies in real-time.

What are some features of Appstractor that enhance marketplace performance?

Appstractor enhances marketplace performance through seller reputation and reviews, listing availability alerts, and brand sentiment tracking.

Why is knowledge of HTML and CSS important for data scraping?

Understanding HTML and CSS is crucial for efficiently extracting information, as these technologies dictate the arrangement of web pages, enabling marketers to navigate and obtain necessary information effectively.

What is the expected impact of data extraction on marketing decision-making by 2026?

The impact of data extraction on marketing decision-making is expected to increase significantly, allowing companies to quickly adapt to changing market conditions and respond to consumer demands.

What are the ethical and legal considerations in data scraping?

Companies must understand compliance with protection regulations, such as GDPR, and adhere to website guidelines to avoid potential pitfalls in their information-gathering efforts.

What are some popular Python libraries for data scraping?

Popular Python libraries for data scraping include Beautiful Soup, Scrapy, and Selenium, each offering unique benefits suited to different data extraction needs.

What is Beautiful Soup used for?

Beautiful Soup excels in parsing HTML and XML documents and is widely adopted for projects involving static content due to its user-friendly API and ability to handle malformed HTML.

When should Scrapy be used for data scraping?

Scrapy is ideal for large-scale data scraping projects as it can handle thousands of simultaneous requests efficiently, making it suitable for marketers dealing with extensive datasets.

What is the advantage of using Selenium for data scraping?

Selenium is preferred for extracting dynamic content rendered by JavaScript and can mimic user interactions, making it appropriate for complex web applications.

How can Appstractor enhance scalability in data scraping?

Appstractor offers cloud-based data extraction services that enhance scalability and alleviate challenges associated with infrastructure management, providing organised information delivery in various formats.

What resources does Appstractor provide to assist users?

Appstractor provides user manuals and FAQs to guide users in effectively utilising its services, making the data scraping process smoother and more efficient.

List of Sources

- Understand the Fundamentals of Data Scraping

- Importance Of Web Scraping for Marketing And Its 3 Major Use Cases (https://zyndoo.com/blog/blog-5/importance-of-web-scraping-for-marketing-and-its-3-major-use-cases-19)

- Web Scraping Trends for 2025 and 2026 (https://ficstar.medium.com/web-scraping-trends-for-2025-and-2026-0568d38b2b05?source=rss------ai-5)

- 'The net is tightening' on AI scraping: Annotated Q&A with Financial Times’ head of global public policy and platform strategy (https://digiday.com/media/the-net-is-tightening-on-ai-scraping-annotated-qa-with-financial-times-head-of-global-public-policy-and-platform-strategy)

- Data scraping, AI and the battle for the open web — Financier Worldwide (https://financierworldwide.com/data-scraping-ai-and-the-battle-for-the-open-web)

- State of Web Scraping 2026: Trends, Challenges & What’s Next (https://browserless.io/blog/state-of-web-scraping-2026)

- Choose the Right Tools and Libraries for Efficient Scraping

- 7 Best Python Web Scraping Libraries in 2026 - ZenRows (https://zenrows.com/blog/python-web-scraping-library)

- Top 7 Python Web Scraping Libraries (https://brightdata.com/blog/web-data/python-web-scraping-libraries)

- Top Python Web Scraping Libraries 2026 (https://capsolver.com/blog/web-scraping/best-python-web-scraping-libraries)

- Best Web Scraping Tools in 2026 (https://scrapfly.io/blog/posts/best-web-scraping-tools)

- Adhere to Ethical Guidelines and Legal Compliance in Scraping

- Real-Time Data Scraping: The Ultimate Guide for 2026 - AI-Driven Data Intelligence & Web Scraping Solutions (https://hirinfotech.com/real-time-data-scraping-the-ultimate-guide-for-2026)

- Importance and Best Practices of Ethical Web Scraping (https://secureitworld.com/article/ethical-web-scraping-best-practices-and-legal-considerations)

- Scraping the barrel – again? Privacy regulators issue statement on data scraping | Clifford Chance (https://cliffordchance.com/insights/resources/blogs/talking-tech/en/articles/2023/09/privacy-regulators-issue-statement-on-data-scraping.html)

- Global Crackdown on Unlawful Data Scraping: What UK Businesses Need to Know | Preiskel & Co (https://preiskel.com/global-crackdown-on-unlawful-data-scraping-what-uk-businesses-need-to-know)

- The State of Web Crawling in 2025: Key Statistics and Industry Benchmarks (https://thunderbit.com/blog/web-crawling-stats-and-industry-benchmarks)

- Optimize Your Scraping Process for Better Data Quality

- DOs and DON’Ts of Web Scraping in 2026 (https://medium.com/@datajournal/dos-and-donts-of-web-scraping-in-2025-e4f9b2a49431)

- News Scraping: Best Practices for Accurate and Timely Data (https://thunderbit.com/blog/news-scraping-best-practices)

- How to Ensure Web Scrapped Data Quality (https://scrapfly.io/blog/posts/how-to-ensure-web-scrapped-data-quality)

- Real-Time Data Scraping: The Ultimate Guide for 2026 - AI-Driven Data Intelligence & Web Scraping Solutions (https://hirinfotech.com/real-time-data-scraping-the-ultimate-guide-for-2026)

- Web Scraping Statistics & Trends You Need to Know in 2026 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- Implement Effective Data Management Practices After Scraping

- Why Clean Data Is Still the Backbone of Effective Marketing in the Digital Age - TTMC (https://ttmc.co.uk/knowledge/articles/why-clean-data-still-underpins-effective-marketing-and-business-growth)

- How Marketing Data Validation Is the Key to Successful Campaigns (https://marketingprofs.com/articles/2024/52469/marketing-data-validation-benefits-campaign-success)

- The Rise of AI in Web Scraping: 2024 Stats That Will Surprise You - ScrapingAPI.ai (https://scrapingapi.ai/blog/the-rise-of-ai-in-web-scraping)

- How Important Is Data Validation in Marketing? (https://televerde.com/how-important-is-data-validation-in-marketing)

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://kanhasoft.com/blog/web-scraping-statistics-trends-you-need-to-know-in-2025)