Introduction

Mastering data extraction is essential in today’s data-driven landscape. Businesses increasingly rely on insights derived from diverse information sources. This guide presents a comprehensive roadmap for leveraging Python's capabilities to streamline the extraction process. By doing so, users can efficiently gather and analyse both structured and unstructured data. However, with a multitude of tools and techniques available, navigating the complexities of data extraction poses a challenge. How can one ensure accuracy and efficiency in this critical task?

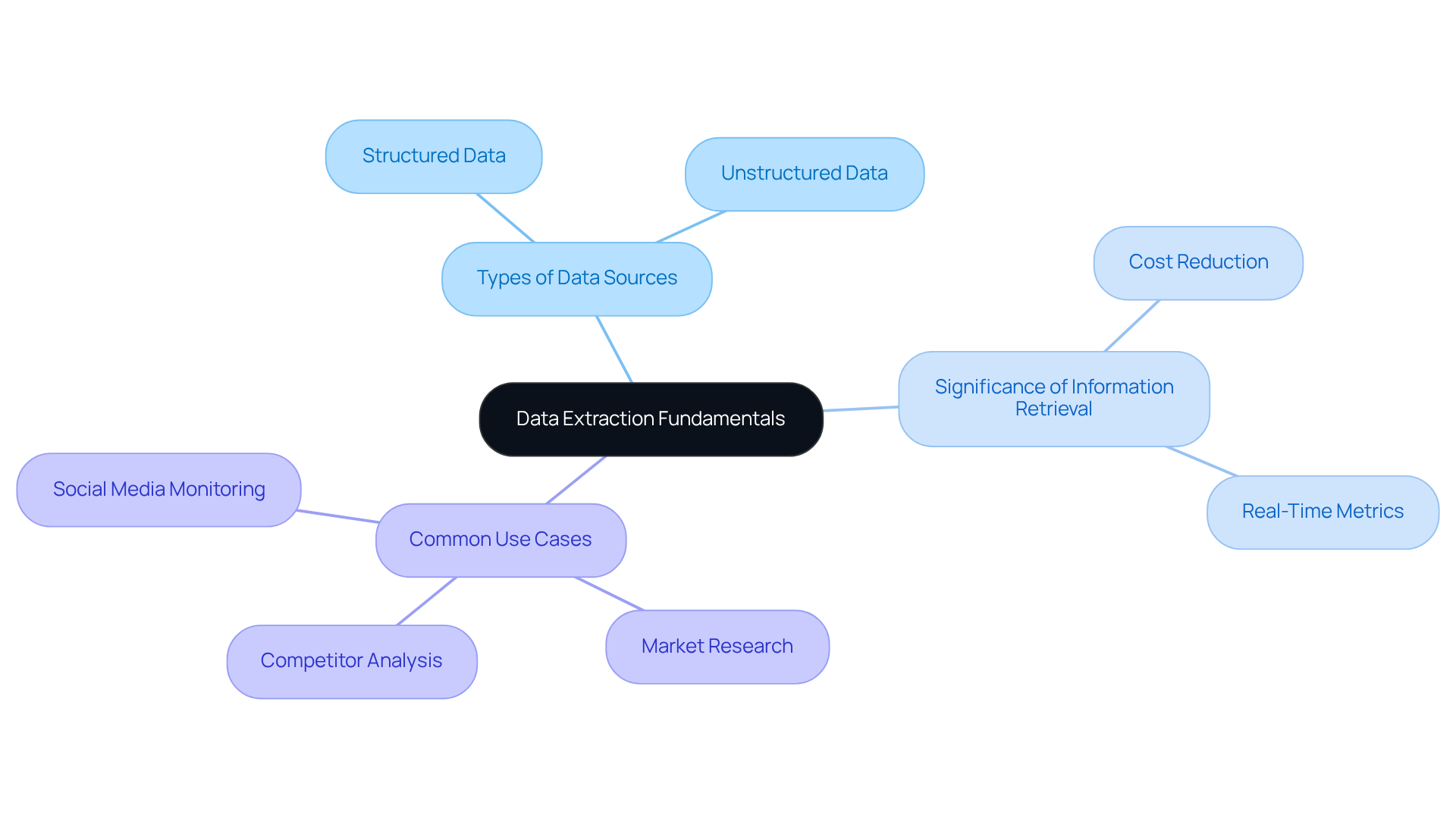

Understand Data Extraction Fundamentals

Information retrieval encompasses the gathering of information from diverse sources for processing and analysis. This includes both structured information from databases and unstructured information from documents and web pages. Key concepts include:

- Types of Data Sources: Structured data, such as that found in databases, is organised and easily searchable. In contrast, unstructured data, including text files and PDFs, lacks a predefined format, making it more challenging to analyse.

- Significance of Information Retrieval: Efficient information retrieval is crucial for enhancing decision-making and gaining insights into business activities. Organisations that implement automated data collection can achieve up to an 80% reduction in processing costs and access critical business metrics in real time, significantly improving their responsiveness to market changes.

- Common Use Cases: Data gathering is utilised in various scenarios, including market research, competitor analysis, and social media monitoring. For instance, e-commerce companies leverage real-time information scraping to monitor competitor pricing and inventory levels, enabling them to adapt strategies swiftly and maintain a competitive edge.

Understanding these fundamentals equips readers to navigate the complexities of the information retrieval process effectively.

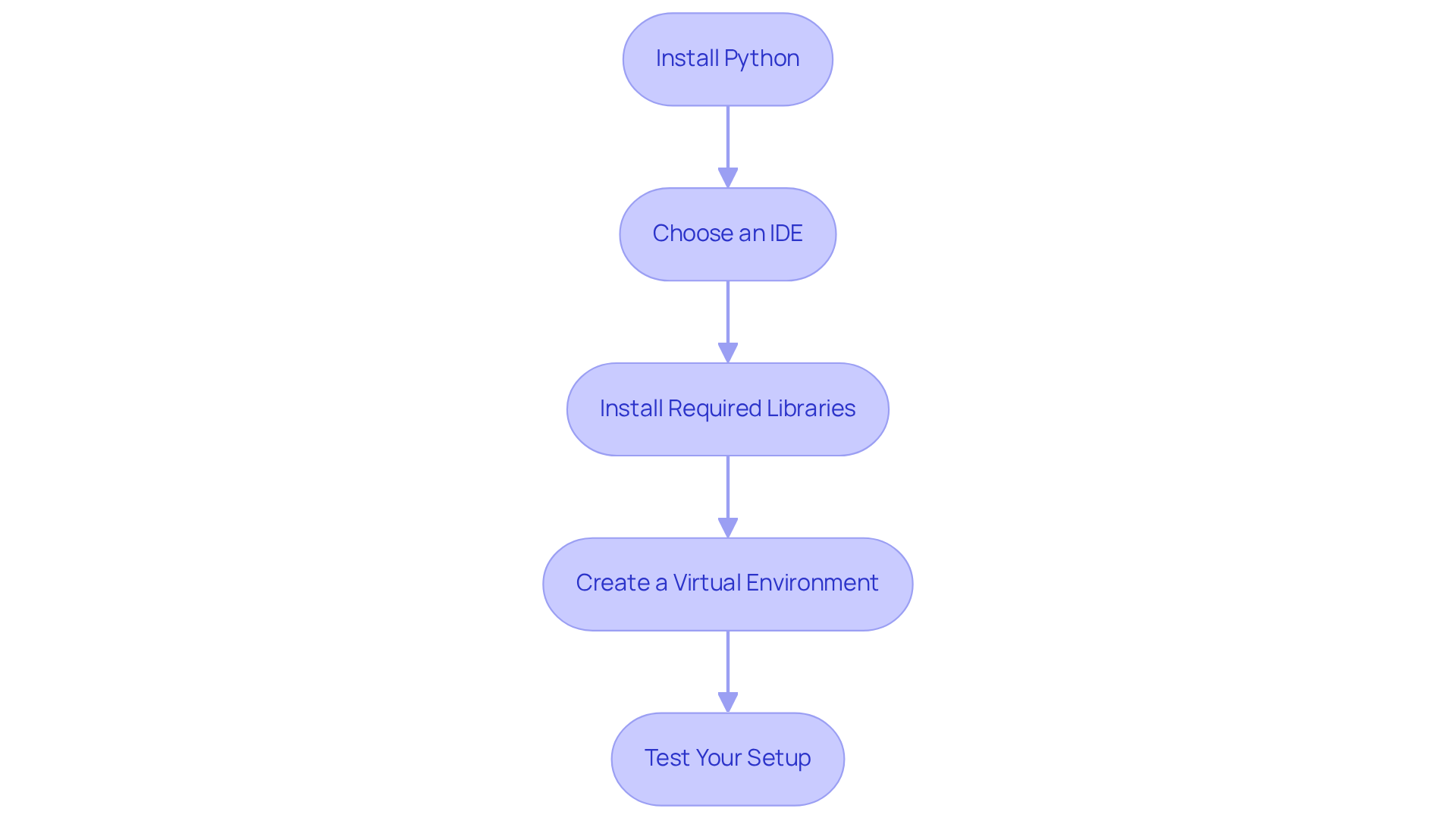

Set Up Your Python Environment and Tools

To effectively begin data extraction using Python, follow these essential steps to set up your environment:

-

Install Python: Download and install the latest version of Python from the official website. Ensure you check the box to add Python to your system PATH during installation.

-

Choose an IDE: Select an Integrated Development Environment (IDE) such as PyCharm, Visual Studio Code, or Jupyter Notebook. These are popular choices for coding in Python. Recent figures indicate that PyCharm and Visual Studio Code rank among the leading IDEs preferred by developers in 2026, with many users reporting improved productivity in information retrieval tasks.

-

Install Required Libraries: Utilize pip to install key libraries for data extraction:

requests: For making HTTP requests.BeautifulSoup: For parsing HTML and XML documents.pandas: For manipulation and analysis of information.PyPDF2orpdfplumber: For extracting information from PDF files.

-

Create a Virtual Environment: It is advisable to create a virtual environment to manage dependencies effectively. Use the command

python -m venv myenvand activate it to isolate your project’s libraries. Best practices suggest regularly updating your virtual environment to include the latest library versions, ensuring compatibility and security. -

Test Your Setup: Write a simple script to verify that your setup is functioning correctly. For instance, import the libraries you installed and print a success message to confirm everything is in order. As Michael Kennedy, a prominent figure in the Python community, emphasizes, ensuring your environment is correctly set up is crucial for a smooth development experience.

By adhering to these steps, you will create a robust environment ready for various tasks including data extraction using Python.

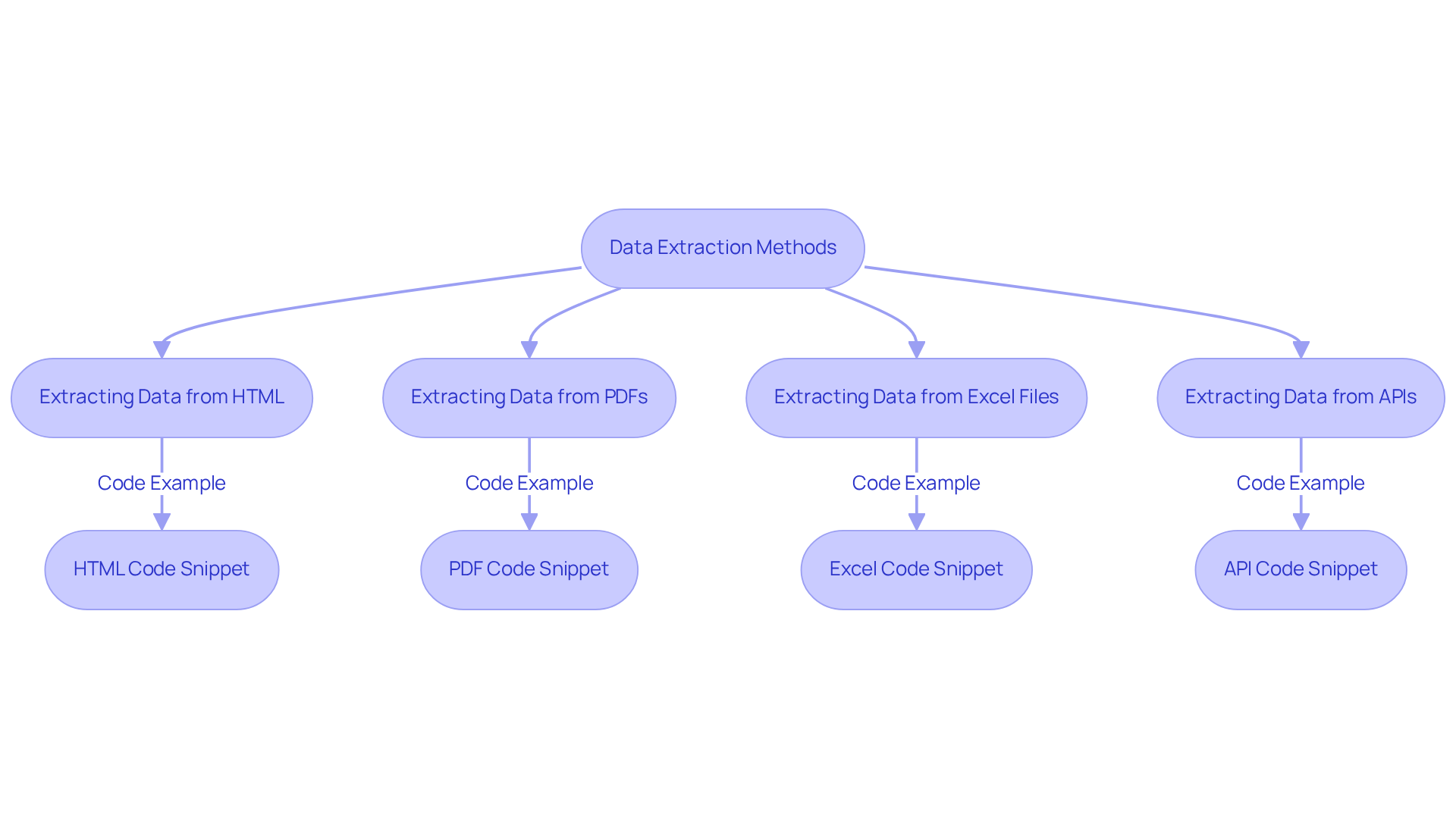

Execute Data Extraction from Different Document Types

Information extraction can be conducted on various document types. Below are methods for handling some common formats:

Extracting Data from HTML

- Utilise the

requestslibrary to fetch webpage content:import requests url = 'http://example.com' response = requests.get(url) html_content = response.text - Parse the HTML using

BeautifulSoup:from bs4 import BeautifulSoup soup = BeautifulSoup(html_content, 'html.parser') data = soup.find_all('tag_name') # Replace 'tag_name' with the actual tag

Extracting Data from PDFs

- Use

PyPDF2orpdfplumberto read PDF files:import pdfplumber with pdfplumber.open('file.pdf') as pdf: first_page = pdf.pages[0] text = first_page.extract_text()

Extracting Data from Excel Files

- Leverage

pandasto read Excel files:import pandas as pd df = pd.read_excel('file.xlsx') data = df['column_name'] # Replace 'column_name' with the actual column

Extracting Data from APIs

- Make API calls using

requests:api_url = 'http://api.example.com/data' response = requests.get(api_url) json_data = response.json()

By mastering these techniques, you will enhance your ability to extract valuable data from diverse sources, significantly improving your data analysis capabilities.

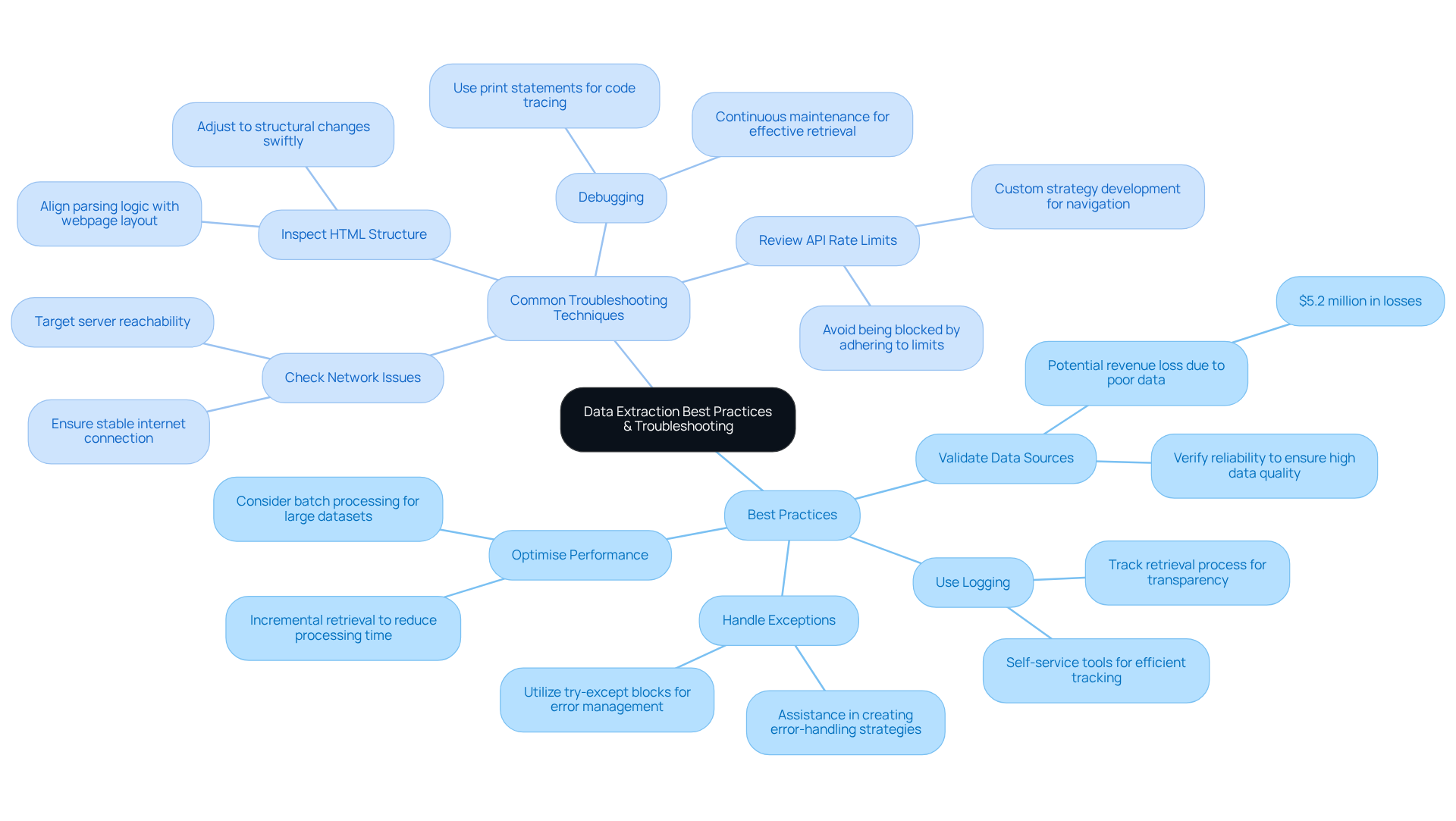

Implement Best Practices and Troubleshooting Techniques

To ensure successful data extraction, consider the following best practises and troubleshooting techniques, leveraging Appstractor's advanced rotating proxy server solutions:

-

Best Practises:

- Validate Data Sources: Always verify the reliability of your data sources to ensure high data quality. Poor data can lead to significant losses, with businesses potentially losing up to $5.2 million in revenue due to untapped data. Utilising rotating proxy servers from the service facilitates reliable access to various information sources.

- Use Logging: Implement logging in your scripts to track the retrieval process. This aids in issue identification and enhances operational transparency. The self-service tools from the company allow for efficient tracking of data retrieval activities.

- Handle Exceptions: Utilise try-except blocks to manage errors gracefully. This prevents script crashes and ensures smoother execution. With the managed services, you receive assistance in creating strong error-handling strategies.

- Optimise Performance: For large datasets, consider batch processing or asynchronous requests to improve efficiency. Incremental retrieval can significantly reduce processing time and resource usage. The rotating proxy servers from the company are crafted for scalability, ensuring optimal performance during high-demand information gathering tasks.

-

Common Troubleshooting Techniques:

- Check Network Issues: If your script fails to fetch data, ensure that your internet connection is stable and that the target server is reachable. Connectivity issues are a frequent cause of extraction failures. The service ensures 99.9% uptime, reducing the likelihood of interruptions impacting your operations.

- Inspect HTML Structure: If information extraction fails, examine the HTML structure of the webpage to ensure that your parsing logic aligns with the current layout. Alterations in structure can disrupt extraction. The company's services help in adjusting to these changes swiftly.

- Review API Rate Limits: When working with APIs, be aware of rate limits to avoid being blocked. This is a common issue that can halt data retrieval. The custom strategy development of this company aids in effectively navigating these limitations.

- Debugging: Use print statements or debugging tools to trace the flow of your code. This helps to pinpoint where failures occur and facilitates quicker resolutions. Continuous maintenance and assistance from the company guarantees effective retrieval processes.

By implementing these best practises and troubleshooting techniques, and utilising Appstractor's rotating proxy servers, you will enhance your data extraction using python skills and ensure more reliable outcomes.

Conclusion

In conclusion, mastering data extraction with Python is an essential skill that enables individuals and organisations to effectively harness information from diverse sources. By grasping the fundamentals of data extraction, establishing the right environment, and utilising effective techniques, users can derive insights that inform decision-making and improve operational efficiency.

This guide has covered key concepts, including the distinctions between structured and unstructured data, the significance of reliable data sources, and practical methods for extracting information from HTML, PDFs, and APIs. Furthermore, best practises and troubleshooting strategies have been emphasised to facilitate a seamless data extraction process, highlighting the importance of validation, error handling, and performance optimization.

As the demand for data-driven insights continues to escalate, adopting these techniques and tools will enable individuals and organisations to maintain a competitive edge. Whether for market research, competitor analysis, or operational enhancements, the capability to efficiently extract and analyse data using Python will prove to be a vital asset. It is imperative to leverage these insights and take decisive action, transforming raw data into strategic advantages.

Frequently Asked Questions

What is information retrieval?

Information retrieval is the gathering of information from various sources for processing and analysis, including both structured data from databases and unstructured data from documents and web pages.

What are the types of data sources mentioned in the article?

The article mentions two types of data sources: structured data, which is organised and easily searchable, and unstructured data, which lacks a predefined format, making it more challenging to analyse.

Why is efficient information retrieval important?

Efficient information retrieval is crucial for enhancing decision-making and gaining insights into business activities. It can lead to significant cost reductions and improved responsiveness to market changes.

How much can organisations reduce processing costs with automated data collection?

Organisations that implement automated data collection can achieve up to an 80% reduction in processing costs.

What are some common use cases for data gathering?

Common use cases for data gathering include market research, competitor analysis, and social media monitoring.

How do e-commerce companies utilise data gathering?

E-commerce companies use real-time information scraping to monitor competitor pricing and inventory levels, allowing them to quickly adapt their strategies and maintain a competitive edge.

List of Sources

- Understand Data Extraction Fundamentals

- 6 Tools That Excel at Unstructured Data Extraction in 2026 (https://nerdbot.com/2025/12/30/6-tools-that-excel-at-unstructured-data-extraction-in-2026)

- Automated Data Extraction: The Complete Guide for 2026 (https://solvexia.com/blog/automated-data-extraction)

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- Data Extraction Market Statistics, Analysis | Industry Forecast - 2027 (https://alliedmarketresearch.com/data-extraction-market-A06797)

- Real-Time Data Scraping: The Ultimate Guide for 2026 - AI-Driven Data Intelligence & Web Scraping Solutions (https://hirinfotech.com/real-time-data-scraping-the-ultimate-guide-for-2026)

- Set Up Your Python Environment and Tools

- Parsl: Parallel Scripting in Python (https://parsl-project.org/case_studies.html)

- Case Studies & Projects (https://antrixacademy.com/AboutUs/case_studies.html)

- The State of Python 2025: Trends and Survey Insights | The PyCharm Blog (https://blog.jetbrains.com/pycharm/2025/08/the-state-of-python-2025)

- Web Scraping with Python: Summary and Setup (https://carpentries-incubator.github.io/web-scraping-python)

- Execute Data Extraction from Different Document Types

- Parsl: Parallel Scripting in Python (https://parsl-project.org/case_studies.html)

- Web Scraping Statistics & Trends You Need to Know in 2025 (https://scrapingdog.com/blog/web-scraping-statistics-and-trends)

- Extract Data from a PDF to Excel: Key Insights for Success (https://chartexpo.com/blog/extract-data-from-a-pdf-to-excel)

- AI Web Scraping: The Ultimate 2026 Guide - AI-Driven Data Intelligence & Web Scraping Solutions (https://hirinfotech.com/ai-web-scraping-the-ultimate-2026-guide)

- Web Scraping Roadmap: Steps, Tools & Best Practices (2026) (https://brightdata.com/blog/web-data/web-scraping-roadmap)

- Implement Best Practices and Troubleshooting Techniques

- 23 Must-Read Quotes About Data [& What They Really Mean] (https://careerfoundry.com/en/blog/data-analytics/inspirational-data-quotes)

- Automated Data Extraction: The Complete Guide for 2026 (https://solvexia.com/blog/automated-data-extraction)

- 100 Essential Data Storytelling Quotes (https://effectivedatastorytelling.com/post/100-essential-data-storytelling-quotes)

- 15 quotes and stats to help boost your data and analytics savvy | MIT Sloan (https://mitsloan.mit.edu/ideas-made-to-matter/15-quotes-and-stats-to-help-boost-your-data-and-analytics-savvy)

- 20 Data Science Quotes by Industry Experts (https://coresignal.com/blog/data-science-quotes)