Introduction

The digital landscape is rich with valuable data; however, many organisations find it challenging to harness this potential due to ineffective extraction methods. Mastering the extraction of data from websites to Excel not only streamlines data analysis but also enables businesses to make informed decisions quickly.

With a variety of tools and techniques available, determining the best approach for specific needs can be daunting. This guide explores the intricacies of data extraction, providing insights and practical steps to fully leverage web data in Excel.

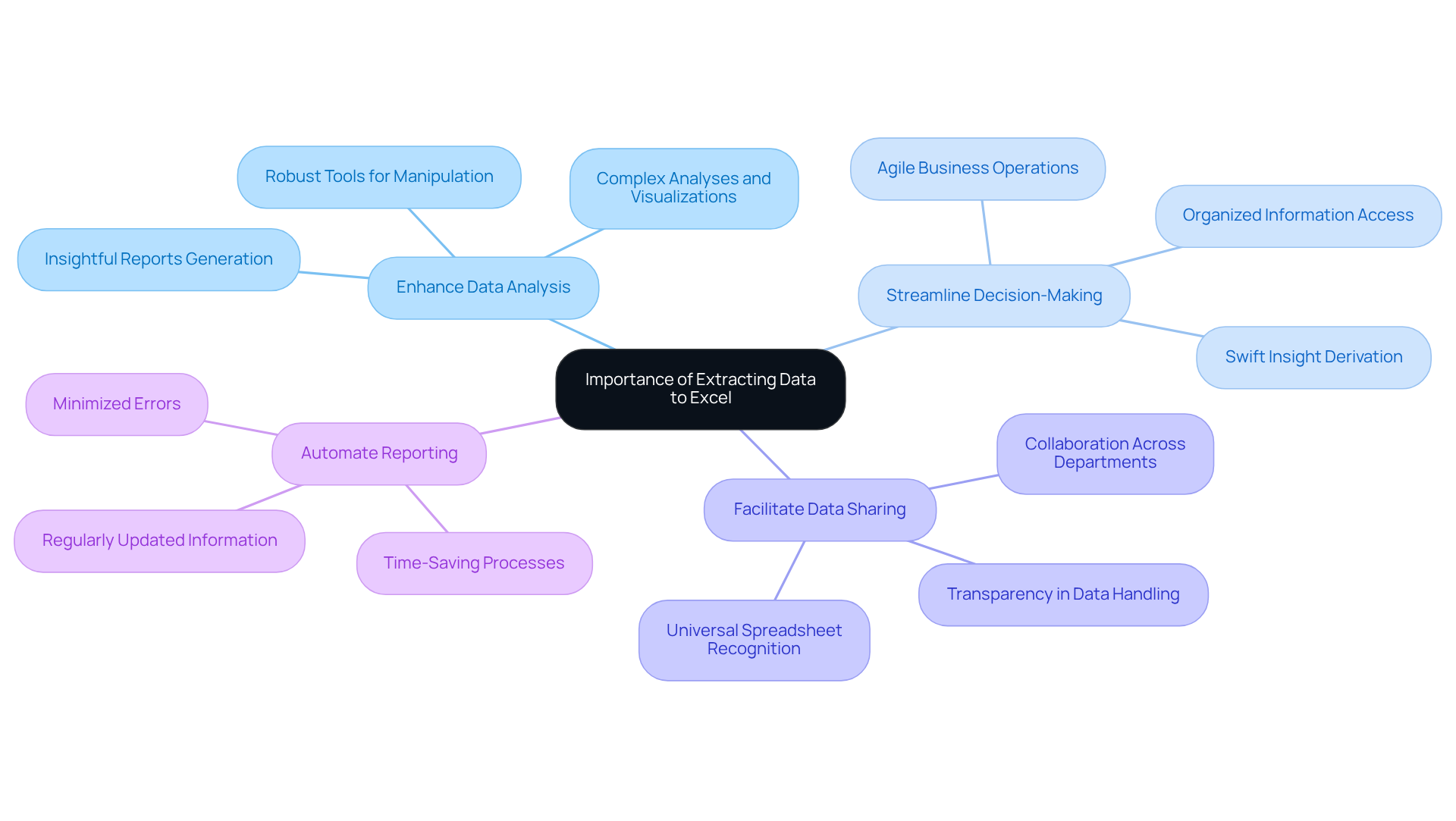

Understand the Importance of Extracting Data to Excel

The ability to extract data from websites to Excel represents a strategic advantage for businesses seeking to analyse and leverage insights effectively. By converting web data into Excel, organisations can achieve several key benefits:

- Enhance Data Analysis: Excel's robust tools empower users to manipulate data, conduct complex analyses, create visualisations, and generate insightful reports, facilitating a deeper understanding and actionable insights.

- Streamline Decision-Making: With organised information readily accessible, decision-makers can swiftly derive insights that inform strategic choices, leading to more agile and informed business operations.

- Facilitate Data Sharing: Spreadsheet files are universally recognised and easily shared among team members, fostering collaboration and transparency across departments.

- Automate Reporting: Regularly updated information in Excel can streamline reporting processes, saving time and minimising errors, which is crucial for maintaining operational efficiency.

These benefits underscore the importance of mastering information retrieval techniques, encouraging users to explore the methods that will be detailed in this guide.

Utilize No-Code Crawlers for Easy Data Extraction

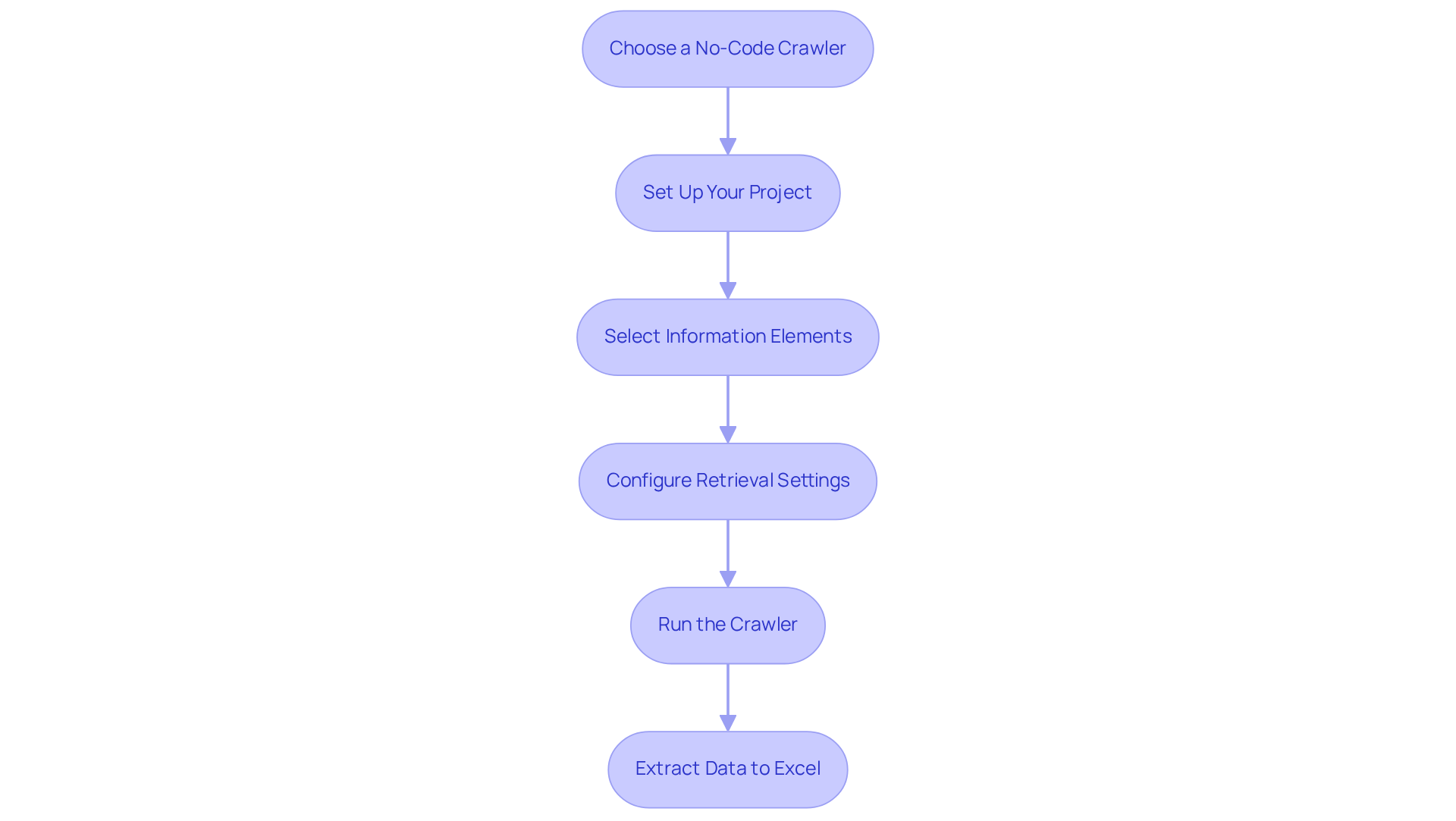

No-code crawlers are powerful tools for users seeking to extract data from website to excel without needing any coding knowledge. Here’s how to utilise them effectively:

- Choose a No-Code Crawler: Select user-friendly platforms such as Octoparse, ParseHub, or Web Scraper. These tools feature intuitive interfaces designed to help users extract data from website to excel effortlessly.

- Set Up Your Project: Start a new project within the chosen crawler and input the URL of the website you wish to scrape.

- Select Information Elements: Use the point-and-click interface to choose the specific information elements you want to extract. Most crawlers provide a preview of the information as you make selections, ensuring accuracy.

- Configure Retrieval Settings: Adjust settings such as pagination, formats, and scheduling to automate your collections effectively.

- Run the Crawler: Launch the crawler to begin the retrieval process. Once completed, you can extract data from website to excel for further analysis.

The emergence of no-code tools has transformed how users can extract data from website to excel, enabling them to gather valuable insights swiftly and effectively, regardless of their technical background. As companies increasingly adopt these solutions, the availability of information scraping continues to expand, making it an essential skill in today’s information-driven environment. Firms like Appstractor, with their MobileHorizons API, enhance the no-code approach by providing advanced information scraping solutions that include rotating proxies and full-service options. This synergy allows non-technical users to perform intricate web scraping tasks efficiently, uncovering hyper-local insights from native mobile applications.

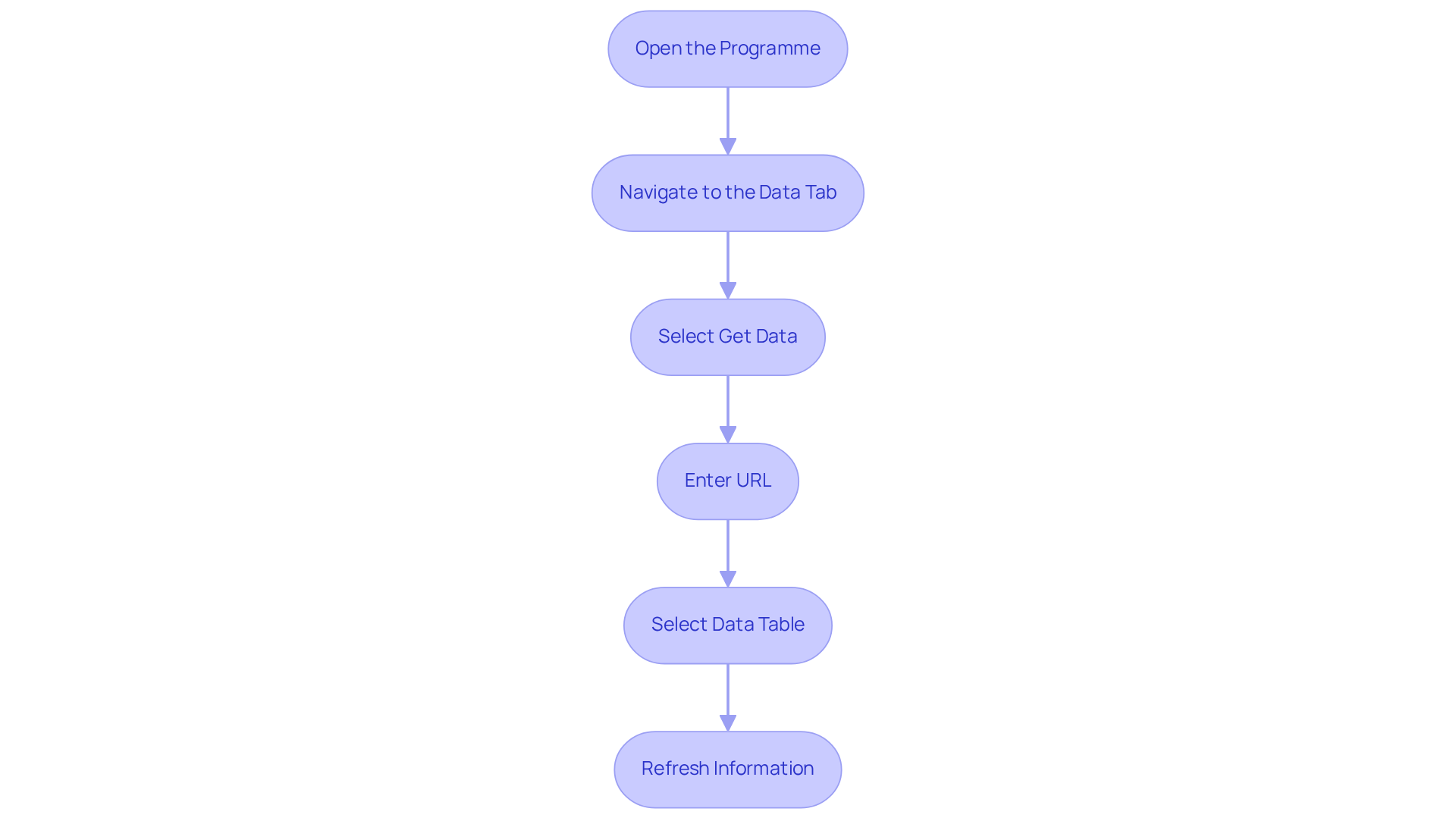

Implement Excel Web Queries for Data Scraping

Spreadsheet Web Queries enable users to efficiently retrieve information from the internet directly into their spreadsheets, enhancing analytical capabilities. Below is a clear guide to implementing this feature:

- Open the Programme: Launch Microsoft software and create a new workbook.

- Navigate to the Data Tab: Click on the 'Data' tab in the ribbon.

- Select Get Data: Choose 'Get Data' > 'From Other Sources' > 'From Web'.

- Enter URL: Input the URL of the webpage containing the desired information and click 'OK'.

- Select Data Table: In the Navigator pane, choose the table or information you wish to import, then click 'Load' to bring it into your spreadsheet.

- Refresh Information: Set up a refresh schedule to keep your information updated automatically, with options to refresh every 5 minutes or as needed.

By utilising Excel Web Queries, businesses can extract data from website to excel and streamline their information extraction processes while benefiting from real-time updates. To further enhance this capability, integrating Appstractor's advanced information mining solutions can significantly improve efficiency. With options such as Rotating Proxy Servers for self-serve IPs or Full Service for turnkey information delivery, users can gather and process data from various online sources seamlessly. This integration allows digital marketing professionals to leverage live web information effectively within their spreadsheets, ultimately enhancing their analytical capabilities.

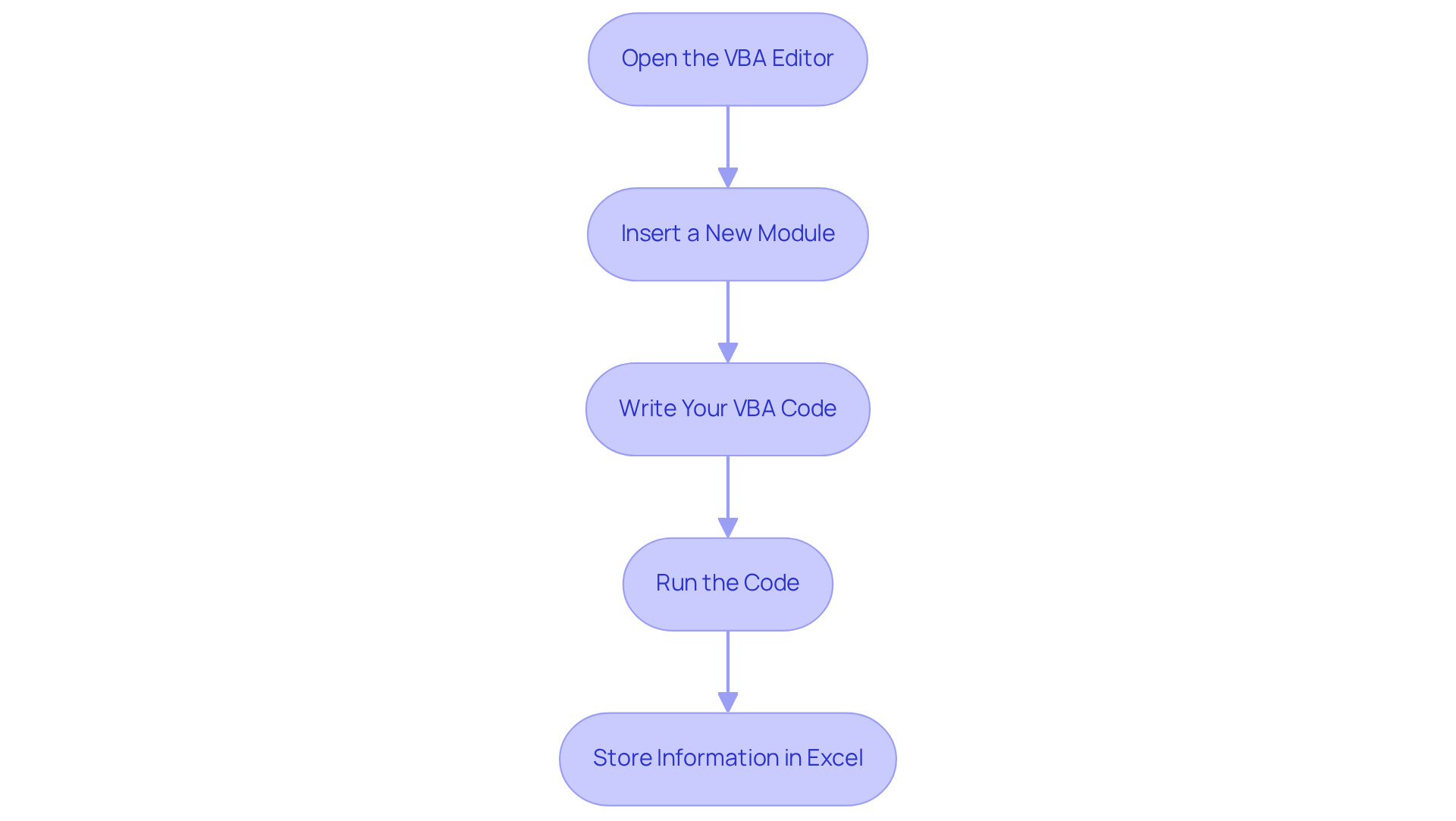

Leverage Excel VBA for Advanced Data Extraction

Utilising VBA for Information Extraction

Utilising VBA (Visual Basic for Applications) enables sophisticated automation in information extraction. Below is a comprehensive guide to effectively using VBA for this purpose:

- Open the VBA Editor: Press

ALT + F11in the spreadsheet application to access the Visual Basic for Applications editor. - Insert a New Module: In the Project Explorer, right-click on any item, select 'Insert', and then 'Module'. This is where your code will be written.

- Write Your VBA Code: Use the following sample code to initiate data scraping:

Sub WebScrape() Dim http As Object Dim html As Object Dim website As String website = "http://example.com" Set http = CreateObject("MSXML2.XMLHTTP") http.Open "GET", website, False http.send Set html = CreateObject("htmlfile") html.body.innerHTML = http.responseText ' Extract data here End Sub - Run the Code: After closing the VBA editor, execute your macro from the spreadsheet application to start the scraping process.

- Store Information in Excel: Adjust the code to specify where the extracted information should be saved within your Excel workbook.

While using VBA for information retrieval enhances flexibility and automates repetitive tasks, it is important to recognise its limitations. VBA is confined to Windows environments, which restricts its use on other operating systems. Additionally, the learning curve can be steep compared to other programming languages like Python, which may deter some users.

For more complex web scraping tasks, integrating third-party tools like Selenium can significantly enhance VBA's capabilities. This integration allows for better handling of dynamic content and advanced interactions. However, users should also be prepared to face challenges such as CAPTCHA resolution and advanced blocking techniques that can complicate the scraping process.

To further optimise the information extraction process, businesses can utilise Appstractor's automated web information extraction services alongside VBA. For instance, Appstractor's Rotating Proxy Servers can assist in managing IP rotation effortlessly, enabling users to extract information without facing blocks. Additionally, the Full Service option offers turnkey information delivery, enhancing the information gathered through VBA scripts. Appstractor's solutions accommodate multiple programming languages, such as Python and PHP, simplifying the scaling of information gathering efforts. Thus, VBA remains a dependable option for automating information collection tasks, particularly within the Microsoft ecosystem, while Appstractor provides advanced mining solutions to enhance the overall process.

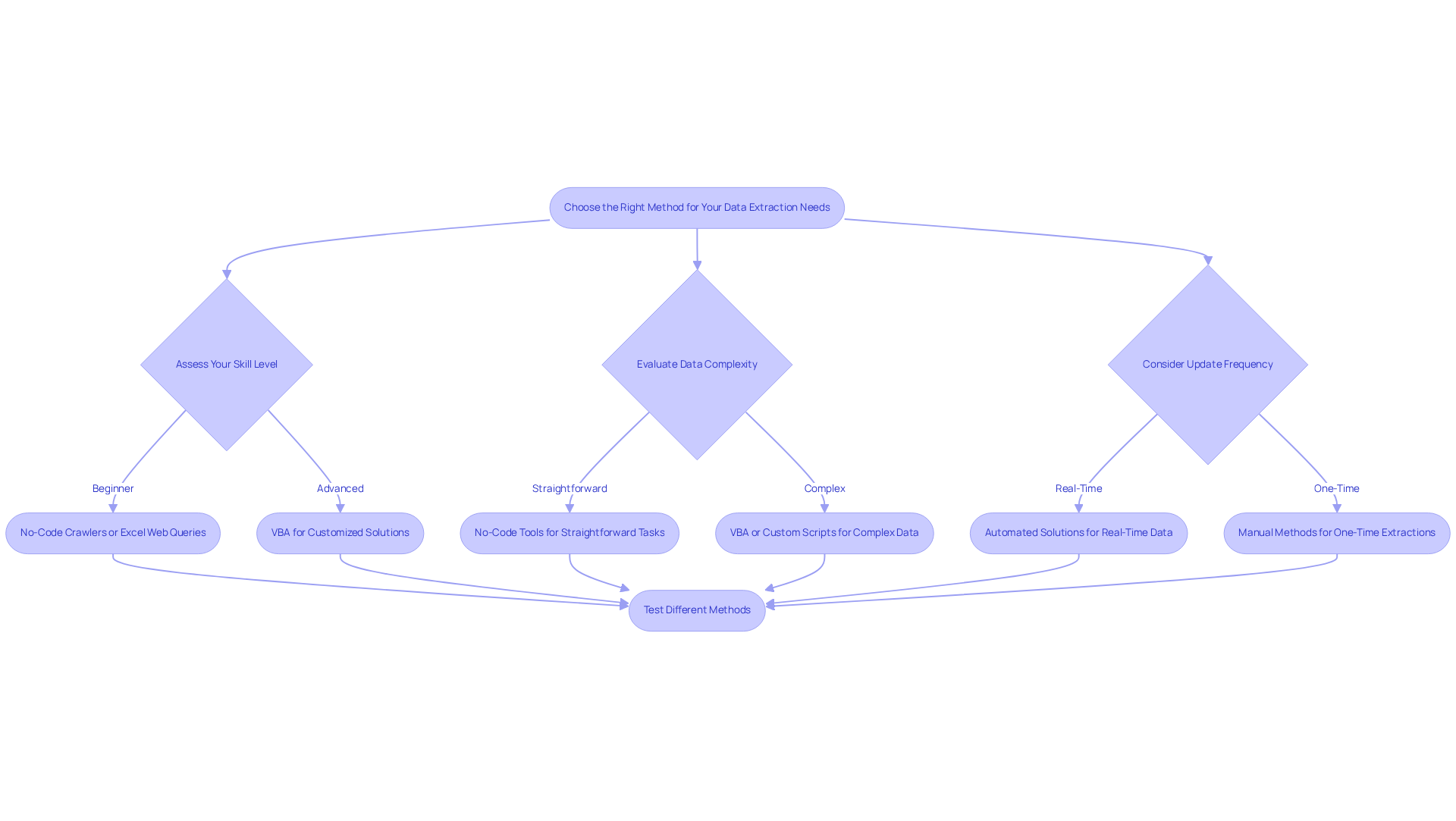

Choose the Right Method for Your Data Extraction Needs

Selecting the appropriate technique to extract data from website to Excel is essential and depends on several key factors, including technical skill level, data complexity, and update frequency. Here’s a guide to help you make an informed decision:

- Assess Your Skill Level: Beginners should consider no-code crawlers or Excel Web Queries, which are user-friendly and require minimal technical knowledge. Advanced users may find value in utilising VBA for more customised solutions.

- Evaluate Data Complexity: For straightforward extraction tasks, no-code tools are often sufficient. However, when handling intricate information structures or extensive datasets, utilising VBA or custom scripts becomes crucial to extract data from website to Excel with precision and effectiveness.

- Consider the frequency of updates: If real-time information is a necessity, automated solutions such as VBA or scheduled crawlers can be used to extract data from website to Excel. Conversely, for one-time extractions, manual methods may suffice, allowing for a more straightforward approach.

- Test Different Methods: Experimenting with various tools and techniques is vital. Each method has its unique strengths and weaknesses, and practical testing can yield insights tailored to your specific needs.

Furthermore, information mining is the process used to extract data from websites to Excel and gather valuable insights from online sources. Appstractor provides advanced information mining services that automate the collection and delivery of structured information from the web. By utilising techniques such as rotating proxy servers, full service, or hybrid options, Appstractor ensures clean, de-duplicated information delivery, enhancing the integrity and flexibility of your information management processes.

With the information sector experiencing a 36% growth rate, the significance of efficient information retrieval tools cannot be overstated. As Hilary Mason observes, curiosity and learning are fundamental to analytics, making it vital to select tools that encourage these attributes. Moreover, when choosing information retrieval tools, consider factors such as precision, scalability, and integration features to ensure they satisfy your organisational requirements. The ability to extract data from website to Excel through automated data extraction can lead to significant cost savings and efficiency improvements, reinforcing the necessity of making informed choices in this area.

Conclusion

In conclusion, the ability to extract data from websites to Excel is crucial for businesses seeking to gain valuable insights and make informed decisions. By mastering data extraction techniques, organisations can harness the power of Excel to improve their analytical capabilities, streamline reporting, and enhance collaboration across teams.

This guide has explored various methods, including:

- No-code crawlers

- Excel Web Queries

- VBA for advanced data extraction

Each approach presents unique advantages tailored to different skill levels and data complexities, allowing users to select the most effective strategy for their specific needs. The integration of tools like Appstractor further strengthens these methods, offering robust solutions for efficient information gathering and management.

The importance of proficient data extraction cannot be overstated. In a data-driven landscape, adopting the right techniques and tools can lead to significant improvements in operational efficiency and strategic insight. As organisations continue to evolve, embracing these data extraction methods will empower teams and position businesses for success in a competitive environment.

Frequently Asked Questions

Why is extracting data from websites to Excel important for businesses?

Extracting data to Excel provides a strategic advantage by enhancing data analysis, streamlining decision-making, facilitating data sharing, and automating reporting processes.

How does Excel enhance data analysis?

Excel's robust tools allow users to manipulate data, conduct complex analyses, create visualisations, and generate insightful reports, leading to a deeper understanding and actionable insights.

In what way does organised information in Excel aid decision-making?

Organised information in Excel enables decision-makers to swiftly derive insights that inform strategic choices, resulting in more agile and informed business operations.

What are the benefits of using spreadsheets for data sharing?

Spreadsheet files are universally recognised and easily shared among team members, which fosters collaboration and transparency across departments.

How does automating reporting in Excel improve operational efficiency?

Regularly updated information in Excel streamlines reporting processes, saving time and minimising errors, which is crucial for maintaining operational efficiency.

What are no-code crawlers and how do they assist in data extraction?

No-code crawlers are user-friendly tools that allow individuals to extract data from websites to Excel without needing coding knowledge, making data extraction accessible to everyone.

What are some examples of no-code crawlers?

Examples of no-code crawlers include Octoparse, ParseHub, and Web Scraper, which feature intuitive interfaces for easy data extraction.

How do you set up a project in a no-code crawler?

To set up a project, start a new project within the chosen crawler and input the URL of the website you wish to scrape.

What is the process for selecting information elements in a no-code crawler?

Users can select specific information elements using a point-and-click interface, with most crawlers providing a preview of the information to ensure accuracy.

What additional features can be configured in a no-code crawler?

Users can adjust settings such as pagination, formats, and scheduling to automate their data collection effectively.

What happens after running the crawler?

Once the crawler is launched and the retrieval process is completed, users can extract the collected data to Excel for further analysis.

How do firms like Appstractor enhance the no-code data extraction process?

Firms like Appstractor enhance the no-code approach by providing advanced information scraping solutions that include rotating proxies and full-service options, allowing non-technical users to perform intricate web scraping tasks efficiently.

List of Sources

- Understand the Importance of Extracting Data to Excel

- 23 Must-Read Quotes About Data [& What They Really Mean] (https://careerfoundry.com/en/blog/data-analytics/inspirational-data-quotes)

- 200 Inspirational Quotes About Data and Analytics [2026] (https://digitaldefynd.com/IQ/inspirational-quotes-about-data-and-analytics)

- Data Extraction Market Statistics, Analysis | Industry Forecast - 2027 (https://alliedmarketresearch.com/data-extraction-market-A06797)

- 5 Stats That Show How Data-Driven Organizations Outperform Their Competition (https://keboola.com/blog/5-stats-that-show-how-data-driven-organizations-outperform-their-competition)

- Utilize No-Code Crawlers for Easy Data Extraction

- No-Code Transformations Usage Trends — 45 Statistics Every Business Leader Should Know in 2026 (https://integrate.io/blog/no-code-transformations-usage-trends)

- No Code Statistics - Market Growth & Predictions (Updated 2026) (https://codeconductor.ai/blog/no-code-statistics)

- Top Web Crawler Tools in 2026 (https://scrapfly.io/blog/posts/top-web-crawler-tools)

- 35 Must-Know Low-Code Statistics and Facts for 2026! (https://kissflow.com/low-code/low-code-trends-statistics)

- Implement Excel Web Queries for Data Scraping

- 23 Must-Read Quotes About Data [& What They Really Mean] (https://careerfoundry.com/en/blog/data-analytics/inspirational-data-quotes)

- XY Spreadsheet Solutions | Case Studies (https://xyspreadsheets.co.uk/case_studies.php)

- Excel Quotes for Motivation, Success and Work Wisdom Up (https://helloswanky.com/blogs/quotes/excel-quotes?srsltid=AfmBOor3TnLWnElBI8oNZWpOifzBaN9oPiCM4wW_qNgkCBE_ANTKUknz)

- How to Extract Data from Website to Excel with Web Query (https://oxylabs.io/blog/web-scraping-excel-web-query)

- How to scrape data from a website into Excel - Browse AI (https://browse.ai/blog/scrape-data-from-website-into-excel)

- Leverage Excel VBA for Advanced Data Extraction

- VBA Web Scraping: Extracting Data with Excel VBA (https://iproyal.com/blog/vba-web-scraping)

- VBA Web Scraping to Excel (Step-by-Step Guide) (https://oxylabs.io/blog/web-scraping-excel-vba)

- VBA Web Scraping Guide: Step-by-Step (https://brightdata.com/blog/how-tos/web-scraping-with-vba)

- Choose the Right Method for Your Data Extraction Needs

- 23 Must-Read Quotes About Data [& What They Really Mean] (https://careerfoundry.com/en/blog/data-analytics/inspirational-data-quotes)

- Automated Data Extraction: The Complete Guide for 2026 (https://solvexia.com/blog/automated-data-extraction)

- 6 Tips for Choosing Data Extraction Tools | IBML (https://ibml.com/blog/data-extraction-tools-6-tips-for-finding-the-best)

- 15 quotes and stats to help boost your data and analytics savvy | MIT Sloan (https://mitsloan.mit.edu/ideas-made-to-matter/15-quotes-and-stats-to-help-boost-your-data-and-analytics-savvy)

- 101 Data Science Quotes (https://dataprofessor.beehiiv.com/p/101-data-science-quotes)