Introduction

Web crawling is a crucial component in the discovery and indexing of digital content, yet its significance is often overlooked by many marketers. By understanding the fundamentals of web crawling, digital marketers can enhance their visibility and refine their data extraction strategies. However, the complexities of managing crawlers and optimising website performance raise an important question: how can marketers ensure they are not only visible but also accurately interpreted by search engines? This article explores best practises that equip marketers to tackle the challenges of web crawling and optimise their online presence.

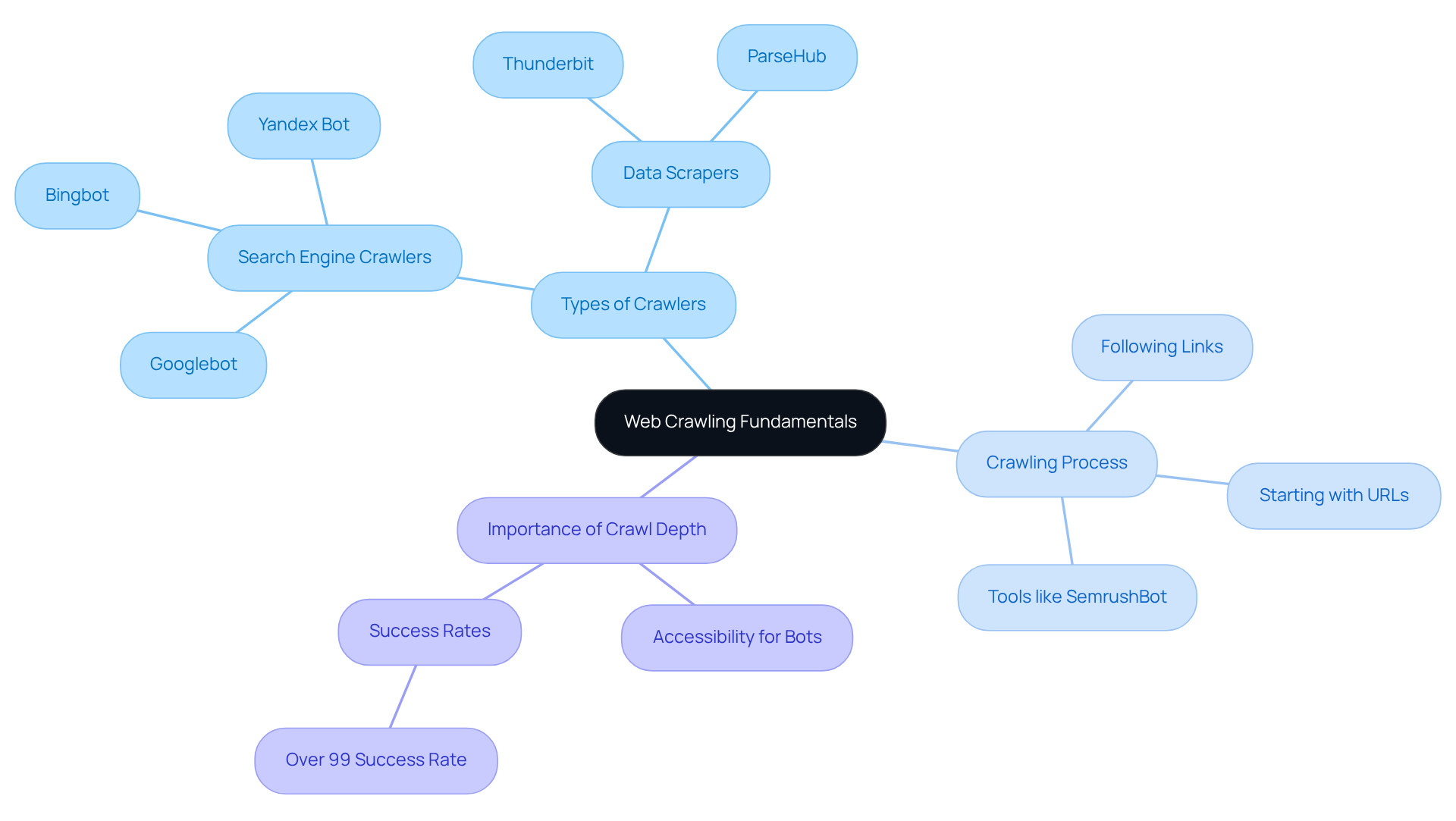

Understand Web Crawling Fundamentals

The automated process of crawling the web is utilised by search engines and various tools to systematically browse the internet and index content. For digital marketers aiming to optimise their websites for enhanced visibility and effective data extraction, understanding the process of crawling the web is crucial. Key concepts include:

-

Types of Crawlers: Various crawlers serve distinct functions. Search engine crawlers, such as Googlebot, index websites, while data scrapers focus on retrieving specific information from sites. In 2023, approximately 65% of global enterprises utilised tools for crawling the web and extracting data, underscoring their significance in digital strategies.

-

Crawling Process: Crawlers start with a list of URLs and follow links on those pages to discover new content. This systematic approach is essential for effectively crawling the web and maintaining up-to-date search engine indexes. For instance, the efficiency of tools like SemrushBot and AhrefsBot in collecting information about web links and SEO metrics illustrates their role in enhancing performance. Leveraging cloud infrastructure management, such as that provided by Appstractor, can streamline this process, offering flexible scaling and automated updates that boost efficiency.

-

Importance of Crawl Depth: The depth of crawling significantly affects how much content is indexed. Marketers must ensure that essential pages are easily accessible to bots that are crawling the web, thereby improving their visibility. Research indicates that properly configured bots can achieve success rates exceeding 99%, emphasising the importance of strategic web architecture.

By mastering these fundamentals, marketers can develop more effective content strategies and enhance their web performance in search results. As SEO specialist Shuai Guan emphasises, understanding web spiders is vital for improving digital presence, as the line between web exploration and data engineering continues to blur.

Optimize Your Website for Crawlers

To ensure that web crawlers can effectively index your site, consider the following best practices:

- Use Clean URLs: Ensure that URLs are descriptive and free of unnecessary parameters. This simplifies comprehension for bots regarding the material of each document.

- Implement a Sitemap: A well-organised sitemap assists bots in locating all sections of your site. Submitting your sitemap to search engines facilitates indexing.

- Optimise Page Load Speed: Fast-loading pages enhance user experience and facilitate crawling the web, allowing crawlers to index more content in less time. Utilise tools like Google PageSpeed Insights to identify areas for improvement.

- Mobile Optimization: With the rise of mobile browsing, ensure your platform is mobile-friendly. Google prioritises mobile-first indexing, meaning that the mobile version of your site is considered the primary version.

- Structured Data: Implement schema markup to facilitate crawling the web, aiding crawlers in understanding the context of your content. This can enhance your visibility in search results through rich snippets.

By adhering to these practices, marketers can significantly improve their platform's performance in search engine results.

Control Crawler Access with Technical Tools

To effectively manage crawler access, consider implementing the following tools and techniques:

-

Robots.txt File: This essential file directs crawlers on which pages to crawl and which to ignore. Proper configuration can safeguard sensitive areas of your site, ensuring that only the intended content is indexed. Misconfigurations can result in unintended exposure of essential content, emphasising the necessity for careful management. For instance, John Mueller highlights that merely prohibiting a URL in robots.txt does not stop it from being indexed if it is connected from other reachable sources. Therefore, using the noindex meta tag alongside allowing bot access is crucial for effective indexing control.

-

Meta Tags: Utilising meta tags, particularly the 'noindex' directive, is crucial for controlling which content appears in search results. This is particularly advantageous for managing duplicate content or low-value pages that may weaken your overall SEO performance. Rachel Costello observes that although meta tags are not an infallible means for indexing control, they serve an important function in directing bot behaviour. Statistics indicate that effective use of meta tags can significantly enhance a webpage's visibility and indexing efficiency.

-

Rate Limiting: Implementing rate limiting is an effective strategy to manage the number of requests a web scraper can make to your server. This practise helps prevent server overload, ensuring that your platform remains responsive and accessible to users while maintaining optimal performance.

-

User-Agent Identification: Recognising and handling various bots based on their user-agent strings enables customised responses to particular agents. This focused method improves oversight of how bots engage with your platform, guaranteeing that vital content is emphasised while less important pages are suitably handled.

By utilising these tools, digital marketers can enhance overall SEO effectiveness through improved bot access and webpage performance while crawling the web. Additionally, being aware of common pitfalls, such as the misconception that disallowing a URL in robots.txt will prevent it from being indexed, can help marketers avoid misapplication of these tools.

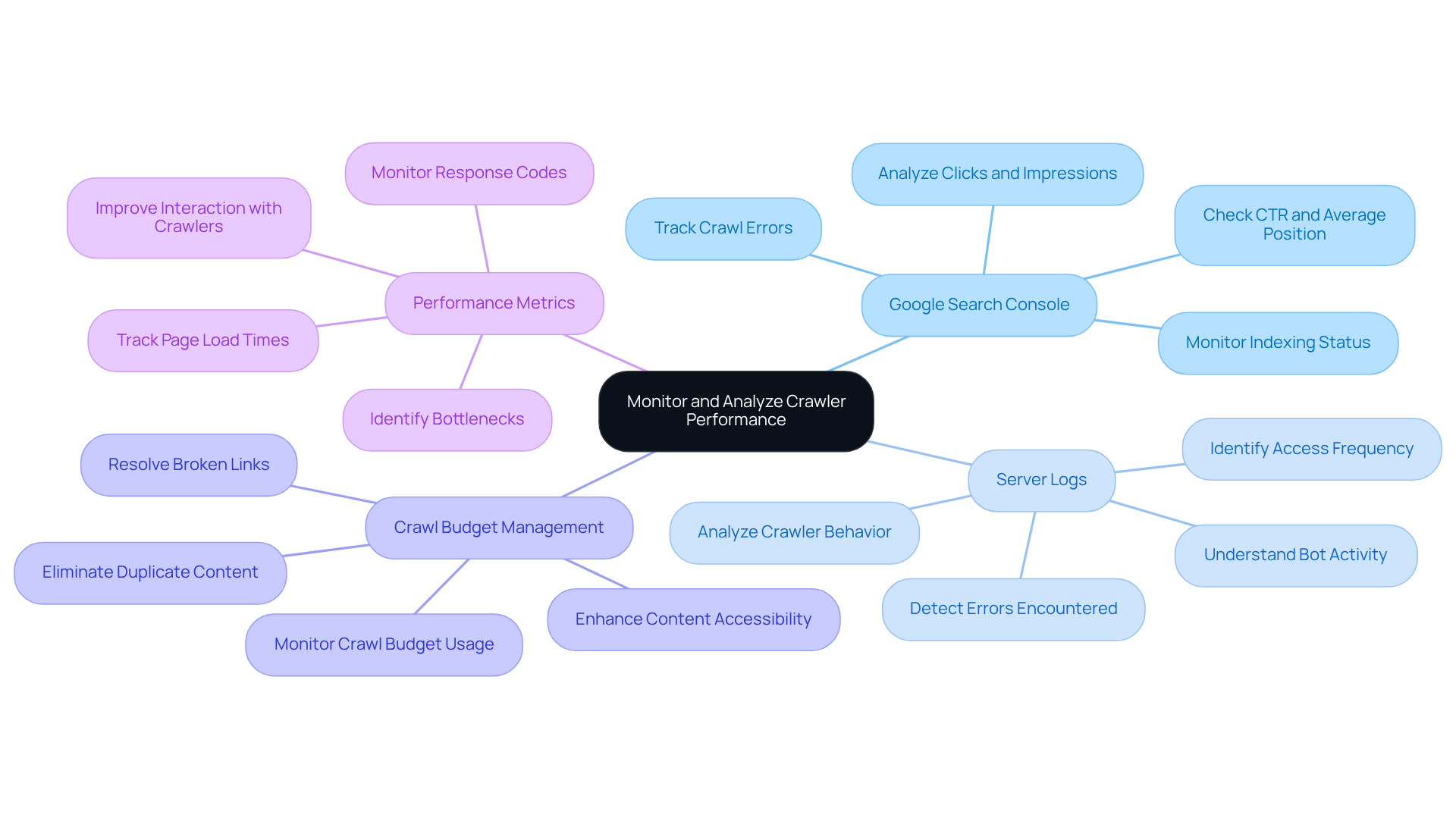

Monitor and Analyze Crawler Performance

To effectively monitor and analyse crawler performance, consider implementing the following strategies:

- Google Search Console: Leverage this essential tool to track how Google crawls and indexes your site. It offers valuable insights into crawl errors, indexing status, and overall search performance, enabling you to identify areas for improvement.

- Server Logs: Conduct a thorough analysis of server logs to gain a deeper understanding of crawler behaviour. This data shows which sections are accessed, the frequency of visits, and any errors encountered during the process of crawling the web, offering a clear picture of how well your platform is performing.

- Crawl Budget Management: Keep a close eye on your crawl budget to ensure that search engines efficiently index your most essential content. Identify and resolve problems that may be wasting this budget, such as broken links or duplicate content, to enhance your platform's efficiency in crawling the web.

- Performance Metrics: Regularly track key performance metrics, including page load times and response codes. This data assists in identifying bottlenecks and enhancing your platform for improved interaction with crawlers, ultimately boosting your visibility in retrieval outcomes by effectively crawling the web.

By adopting these monitoring practises, marketers can extract valuable insights into their site's performance, enabling informed decisions that enhance search visibility.

Conclusion

Mastering web crawling is crucial for digital marketers aiming to boost their online presence and enhance search engine visibility. By grasping the intricacies of crawler operations, marketers can adopt strategies that optimise their websites for improved indexing and performance.

Key insights emphasise the significance of:

- Clean URLs

- Effective sitemaps

- Mobile optimization

These foundational practises ensure that crawlers can efficiently access and index content. Furthermore, controlling crawler access through tools like robots.txt and meta tags, along with monitoring performance via Google Search Console and server logs, empowers marketers to refine their strategies and proactively address potential issues.

Ultimately, the importance of web crawling in digital marketing is paramount. As the online content landscape evolves, staying informed about best practises and utilising the right tools will not only enhance visibility but also foster meaningful engagement. Embracing these strategies is essential for any marketer striving to succeed in a competitive digital environment.

Frequently Asked Questions

What is web crawling?

Web crawling is the automated process used by search engines and various tools to systematically browse the internet and index content.

Why is understanding web crawling important for digital marketers?

Understanding web crawling is crucial for digital marketers as it helps them optimise their websites for better visibility and effective data extraction.

What are the different types of crawlers?

There are various types of crawlers, including search engine crawlers, like Googlebot, which index websites, and data scrapers that focus on retrieving specific information from sites.

How prevalent is the use of web crawling tools among enterprises?

In 2023, approximately 65% of global enterprises utilised tools for crawling the web and extracting data, highlighting their importance in digital strategies.

How does the crawling process work?

Crawlers begin with a list of URLs and follow links on those pages to discover new content, which is essential for maintaining up-to-date search engine indexes.

What role do tools like SemrushBot and AhrefsBot play in web crawling?

Tools like SemrushBot and AhrefsBot efficiently collect information about web links and SEO metrics, enhancing performance in web crawling.

What is crawl depth, and why is it important?

Crawl depth refers to how deeply crawlers can access content on a website. It significantly affects how much content is indexed, making it essential for marketers to ensure that important pages are easily accessible to crawling bots.

What success rates can properly configured bots achieve?

Research indicates that properly configured bots can achieve success rates exceeding 99%, emphasising the importance of strategic web architecture.

How can understanding web crawling improve digital presence?

By mastering web crawling fundamentals, marketers can develop more effective content strategies and enhance their web performance in search results, as highlighted by SEO specialist Shuai Guan.

List of Sources

- Understand Web Crawling Fundamentals

- The State of Web Crawling in 2025: Key Statistics and Industry Benchmarks (https://thunderbit.com/blog/web-crawling-stats-and-industry-benchmarks)

- 15 Important & Inspiring SEO Quotes - SEOptimer (https://seoptimer.com/blog/seo-quotes)

- 15 Most Common Web Crawlers in [year]: What You Need to Know (https://elementor.com/blog/most-common-web-crawlers)

- 45 Inspiring SEO Quotes For Successful SEO Campaign (https://correctdigital.com/inspiring-seo-quotes)

- Optimize Your Website for Crawlers

- Top 10 Technical SEO Tips Every News Website Must Follow (https://tabscap.com/blog/top-10-technical-seo-tips-every-news-website-must-follow)

- 15 Important & Inspiring SEO Quotes - SEOptimer (https://seoptimer.com/blog/seo-quotes)

- 57 SEO Statistics That Matter In 2025 (And What To Do About Them) (https://digitalsilk.com/digital-trends/top-seo-statistics)

- Top 20 SEO Quotes By Experts To Boost Your Ranking in 2025 (https://graffiti9.com/blog/seo-quotes-to-boost-your-ranking)

- 45 Inspiring SEO Quotes For Successful SEO Campaign (https://correctdigital.com/inspiring-seo-quotes)

- Control Crawler Access with Technical Tools

- A Guide to Robots.txt (https://lumar.io/learn/seo/crawlability/robots-txt)

- Robots.txt file: what it is, what it is for and how to set it up without errors (https://seozoom.com/file-robots-txt-seo-guide)

- From Googlebot to GPTBot: Who’s crawling your site in 2025 (https://blog.cloudflare.com/from-googlebot-to-gptbot-whos-crawling-your-site-in-2025)

- 20 Timeless Quotes From SEO Experts to Apply to Your SEO Campaign - Heroes of Digital (https://heroesofdigital.com/seo/seo-quotes-to-guide-your-seo-campaign)

- What are the most important meta tags? An overview (https://ionos.co.uk/digitalguide/websites/web-development/the-most-important-meta-tags-and-their-functions)

- Monitor and Analyze Crawler Performance

- Site Monitoring Using Google Search Console (https://itineris.co.uk/blog/site-monitoring-using-google-search-console)

- Crawl Stats report - Search Console Help (https://support.google.com/webmasters/answer/9679690?hl=en)

- How to Monitor Crawler Logs and Set Up Alerts for Bot Activity? (https://hostarmada.com/blog/crawler-logs-analysis)

- Introducing Google News performance report | Google Search Central Blog | Google for Developers (https://developers.google.com/search/blog/2021/01/google-news-performance-report)