Introduction

Web scraping has emerged as a powerful tool for businesses looking to leverage the vast amounts of data generated by food delivery services. The online food delivery market is projected to soar to $1.40 trillion, making it crucial for businesses to understand how to gather and analyze this data effectively. This presents a significant opportunity for strategic advantage.

However, navigating the complexities of data extraction, compliance, and ethical considerations raises critical questions. How can businesses ensure they are scraping data efficiently while adhering to legal guidelines?

This article delves into best practices for web scraping food delivery data, equipping readers with the knowledge to optimize their data strategies and drive informed decision-making.

Identify Target Platforms and Data Points for Effective Scraping

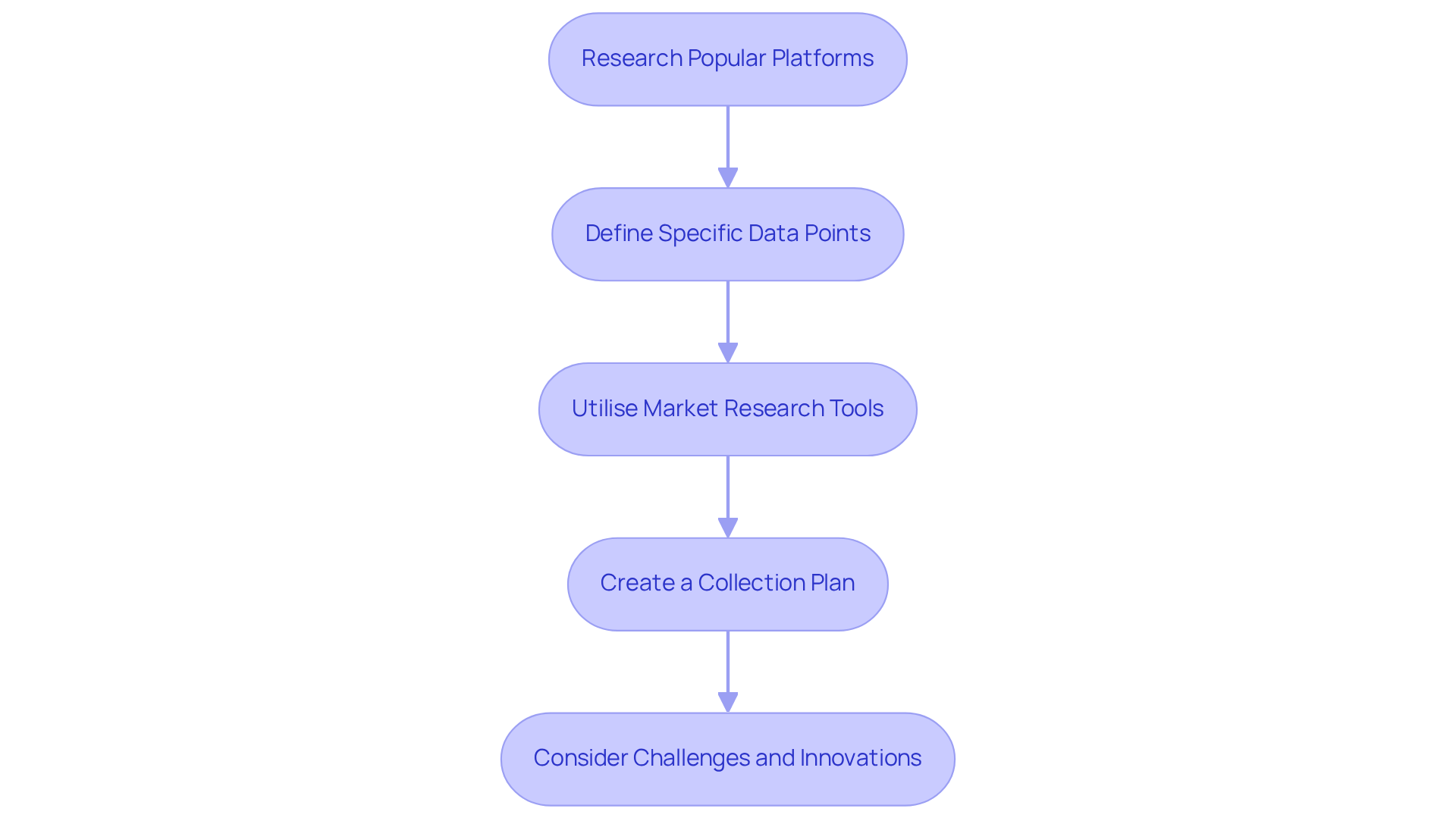

To efficiently gather food service information, web scraping food delivery data is crucial to recognise the main services leading the market, such as Uber Eats, DoorDash, Grubhub, and Zomato. Each platform offers distinct data points, including menu items, pricing, arrival times, and customer reviews. Follow these steps for a successful scraping strategy:

- Research Popular Platforms: Analyse which food delivery services align with your business objectives. For instance, if your emphasis is on pricing strategies, venues with varied restaurant selections will provide more valuable insights.

- Define Specific Data Points: Clearly outline the specific information you need, such as:

- Menu items and descriptions

- Pricing structures

- Delivery fees

- Customer ratings and reviews

- Promotional offers

- Utilise Market Research Tools: Employ tools that help identify trends and popular items across these platforms, ensuring your scraping efforts align with current market demands. Notably, the online food delivery market is projected to reach $1.40 trillion, emphasising the importance of timely information. As highlighted by industry specialists, '89% of users select services that provide advanced tracking capabilities,' underscoring the significance of accuracy.

- Create a Collection Plan: Develop a structured plan detailing which platforms to scrape and the specific information points to focus on. This methodical approach will enhance the effectiveness of your data-gathering activities through web scraping food delivery data, enabling you to obtain valuable insights that can influence your marketing strategies. Additionally, it is essential to consider the challenges faced by restaurants, such as high commission fees, which 72% of restaurants cite as a significant challenge. Incorporating insights on how AI and automation are transforming food delivery services can further enrich your data collection strategy.

Implement Technical Strategies for Efficient Data Extraction

To maximise the efficiency of your web scraping efforts with Appstractor, consider the following technical strategies:

-

Use Proxies: Implement Appstractor's rotating proxies to avoid IP bans and ensure continuous access to target websites. With 10.2% of global web traffic attributed to scrapers, high-traffic platforms often restrict access based on scraping activity. Appstractor's rotating proxies help mitigate this risk by providing a different IP address for each request, reducing the likelihood of detection. Rotating Proxy Servers can be activated within 24 hours, allowing for quick deployment.

-

User-Agent Rotation: Change the user-agent string in your requests to mimic various browsers and devices. This technique is crucial for bypassing basic anti-scraping measures that websites may employ. Success stories emphasise that scrapers utilising user-agent rotation have significantly enhanced their information extraction rates by presenting themselves as legitimate users.

-

Headless Browsers: Utilise headless browsers like Puppeteer or Selenium to scrape dynamic content reliant on JavaScript. These tools render pages as a regular browser would, allowing you to extract data that may not be immediately visible in the HTML source. This is particularly effective for food delivery platforms that often utilise web scraping food delivery data to load content dynamically.

-

Error Handling: Implement robust error management to address unexpected issues during data extraction, such as timeouts or alterations in website structure. This adaptability ensures that your scraping process can continue without significant interruptions, preserving information integrity and availability.

-

Information Storage Solutions: Select suitable information storage solutions capable of managing the volume and structure of the scraped content, such as databases or cloud storage. Appstractor provides adaptable choices for information delivery, including JSON, CSV, and direct database inserts, facilitating easy access and analysis. Full Service projects kick off in 5-7 business days, while Hybrid audits take 1-4 days, providing tailored solutions to meet your needs.

Analyze Scraped Data for Insights and Strategic Decisions

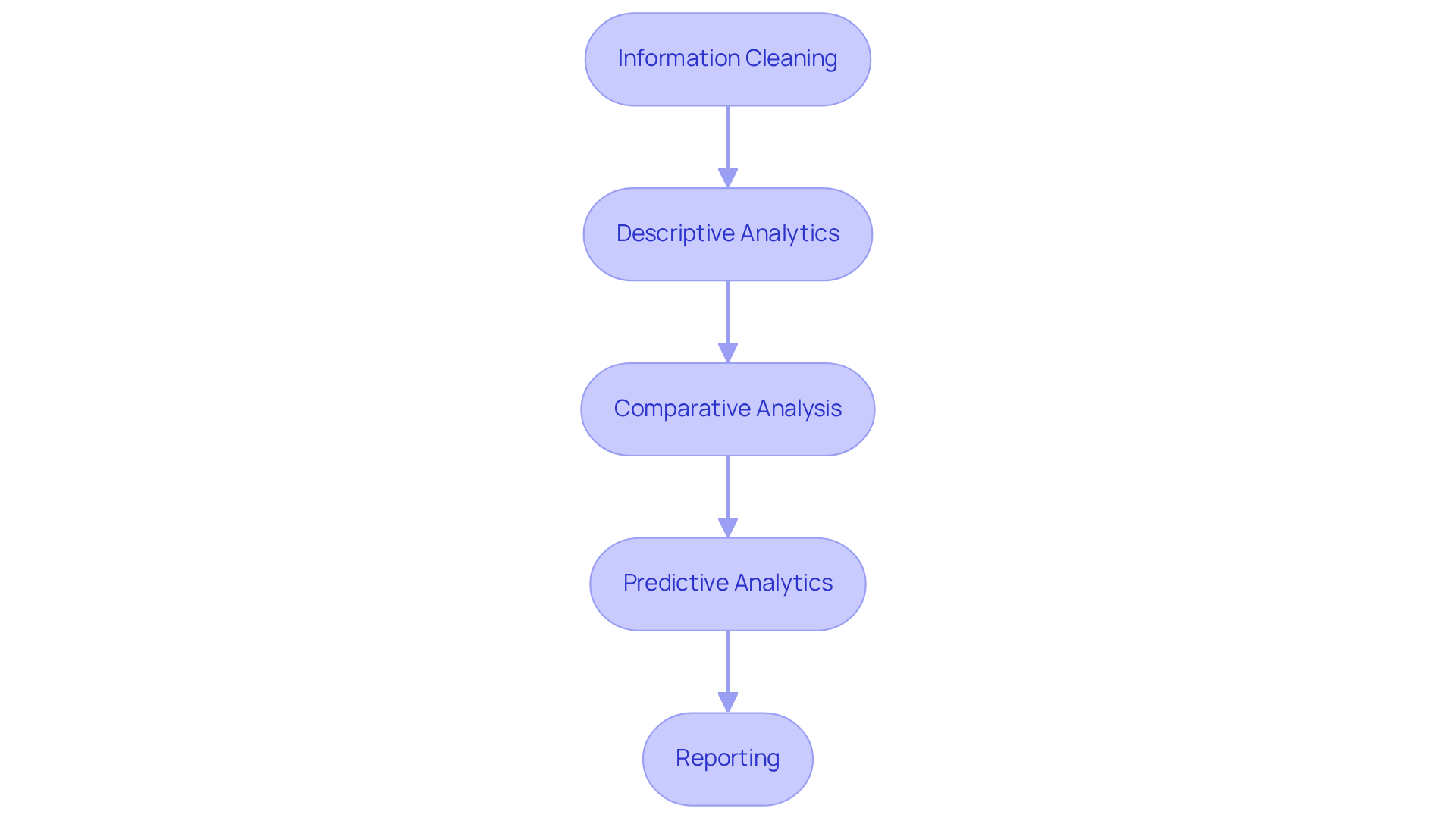

Once you have gathered the information, effective analysis is crucial for deriving actionable insights. Here are key practises to follow:

-

Information Cleaning: Before analysis, cleanse the information by eliminating duplicates, rectifying errors, and standardising formats. This guarantees insights are founded on precise information, backed by AWS's strong management capabilities.

-

Descriptive Analytics: Utilise descriptive analytics to summarise the information, revealing trends in pricing, customer preferences, and popular menu items. Tools like Excel or sophisticated visualisation software improve clarity, with the added advantage of cloud accessibility for your team.

-

Comparative Analysis: Conduct comparative analysis to benchmark your findings against competitors. This includes analysing pricing strategies, shipping times, and customer feedback, emphasising areas for enhancement while leveraging AWS's cost management features to optimise resources.

-

Predictive Analytics: Utilise predictive analytics to anticipate future trends based on historical information. This approach aids in making proactive decisions regarding menu adjustments, pricing strategies, and targeted marketing efforts, supported by the flexible scaling of AWS cloud infrastructure.

-

Reporting: Develop comprehensive reports that encapsulate your findings and insights. Tailor these reports to meet the needs of various stakeholders within your organisation, ensuring that insights are actionable and relevant, facilitated by the automated updates and security features of your cloud management system.

By following these practises, businesses can leverage web scraping food delivery data to gain a deeper understanding of market dynamics and customer behaviour, driving strategic decisions that enhance operational efficiency and growth.

Ensure Compliance with Legal Guidelines in Web Scraping

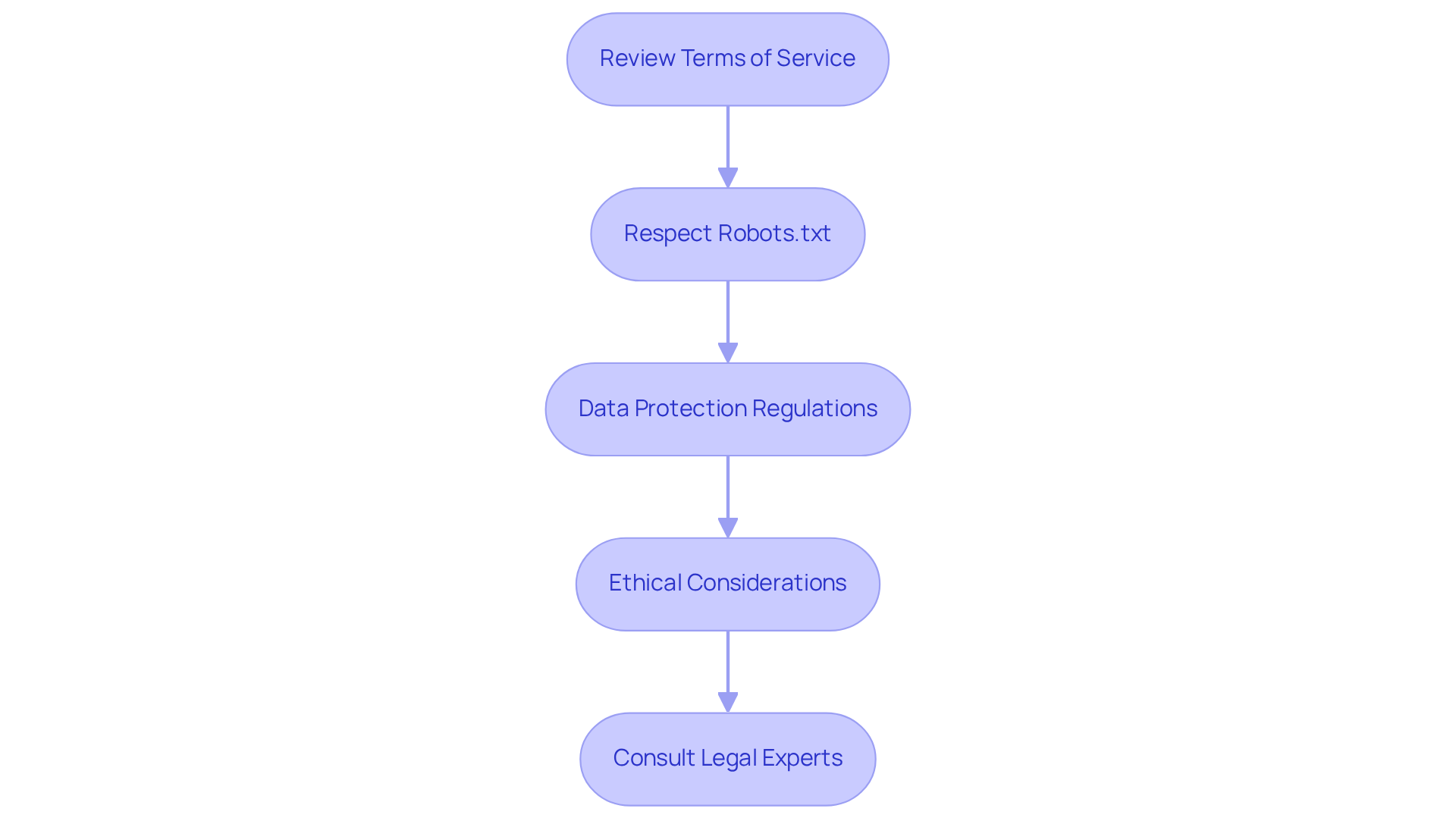

Adhering to legal guidelines is crucial when engaging in web scraping food delivery data. To ensure your activities are lawful, consider the following best practices:

-

Review Terms of Service: Always check the terms of service of the websites you intend to scrape. Numerous sites explicitly forbid data extraction, and violating these terms can lead to serious legal repercussions, including account termination, IP blocking, cease-and-desist letters, or breach of contract litigation. As Omar Laoudai notes, "Violating these terms can lead to account termination, IP blocking, cease-and-desist letters, or breach of contract litigation."

-

Respect Robots.txt: Adhere to the directives specified in the robots.txt file of the target website. This file indicates which parts of the site can be accessed by web crawlers and which should be avoided, helping to mitigate unauthorized access claims.

-

Data Protection Regulations: Be aware of data protection laws such as GDPR or CCPA that govern the collection and use of personal data. Ensure that your practices for web scraping food delivery data do not infringe on these regulations to avoid penalties, which can reach up to €20 million or 4% of global annual revenue for violations.

-

Ethical Considerations: Consider the ethical implications of your data collection activities. Avoid collecting sensitive information and respect user privacy. This not only protects your business but also fosters trust with your audience, which is crucial in today’s data-driven landscape.

-

Consult Legal Experts: If in doubt, consult with legal experts who specialize in data privacy and technology law to ensure that your scraping practices are compliant with current regulations. Joséphine Beaufour emphasizes that "the controller must assess on a case-by-case basis whether such safeguards are required, depending on the specific modalities of the processing.

Conclusion

Web scraping food delivery data is crucial for businesses striving to remain competitive in the fast-paced food service industry. By accurately identifying target platforms and key data points, companies can gain valuable insights that shape their marketing strategies and operational decisions. This systematic approach to web scraping not only improves data collection efforts but also aligns them with market trends and consumer preferences.

This article has highlighted various best practices, emphasizing the importance of employing technical strategies such as proxies and user-agent rotation to facilitate efficient data extraction. Furthermore, the necessity of thorough data analysis and adherence to legal guidelines is paramount. By cleaning and analyzing the scraped data, businesses can extract actionable insights that inform strategic decisions, all while upholding ethical standards and legal requirements.

Ultimately, the capability to scrape and analyze food delivery data effectively enables businesses to navigate the competitive landscape with assurance. As the online food delivery market continues to expand, embracing these best practices will not only improve operational efficiency but also cultivate a data-driven culture that prioritises informed decision-making. Engaging with these strategies ensures that companies remain agile and responsive to the ever-evolving demands of the food delivery sector.

Frequently Asked Questions

Why is web scraping food delivery data important?

Web scraping food delivery data is crucial for recognising the main services leading the market, such as Uber Eats, DoorDash, Grubhub, and Zomato, and for gathering valuable insights that can influence marketing strategies.

What types of data points should I focus on when scraping food delivery platforms?

You should focus on specific data points such as menu items and descriptions, pricing structures, delivery fees, customer ratings and reviews, and promotional offers.

How can I identify which food delivery platforms to analyze?

Research popular platforms and analyze which food delivery services align with your business objectives, particularly if you are focusing on aspects like pricing strategies or restaurant selections.

What tools can help in market research for food delivery data?

Utilise market research tools that help identify trends and popular items across food delivery platforms, ensuring your scraping efforts align with current market demands.

What is the projected size of the online food delivery market?

The online food delivery market is projected to reach $1.40 trillion, highlighting the importance of timely information in this sector.

What is the significance of advanced tracking capabilities in food delivery services?

Advanced tracking capabilities are significant because 89% of users select services that provide these features, underscoring the need for accuracy in data collection.

How should I create a collection plan for web scraping?

Develop a structured plan detailing which platforms to scrape and the specific information points to focus on to enhance the effectiveness of your data-gathering activities.

What challenges do restaurants face in the food delivery market?

A significant challenge faced by restaurants is high commission fees, which 72% of restaurants cite as a major issue.

How can insights on AI and automation improve my data collection strategy?

Incorporating insights on how AI and automation are transforming food delivery services can enrich your data collection strategy, providing deeper insights into market trends and operational efficiencies.

List of Sources

- Identify Target Platforms and Data Points for Effective Scraping

- UK Food Delivery Market Statistics (2025) (https://zego.com/food-delivery-statistics)

- Deliverect UK | Top Food Delivery Statistics 2025: Market Trends & Insights (https://deliverect.com/en-gb/blog/trending/top-statistics-on-food-delivery-to-know-in-2025)

- Food Delivery App Revenue and Usage Statistics (2025) (https://businessofapps.com/data/food-delivery-app-market)

- Food Culture in Cities in 2025: What It Is Like and Why Food Delivery Apps Matter (https://europeanbusinessreview.com/food-culture-in-cities-in-2025-what-it-is-like-and-why-food-delivery-apps-matter)

- Top Food Delivery Trends And Statistics For 2025 (https://routific.com/blog/food-delivery-trends)

- Implement Technical Strategies for Efficient Data Extraction

- The Ultimate Guide to Scalable Web Scraping in 2025: Tools, Proxies, and Automation Workflows (https://dev.to/wisdomudo/the-ultimate-guide-to-scalable-web-scraping-in-2025-tools-proxies-and-automation-workflows-4j6l)

- Web Scraping Report 2025: Market Trends, Growth & Key Insights (https://promptcloud.com/blog/state-of-web-scraping-2025-report)

- Top Web Scraping Challenges in 2025 (https://scrapingbee.com/blog/web-scraping-challenges)

- DOs and DON’Ts of Web Scraping in 2025 (https://medium.com/@datajournal/dos-and-donts-of-web-scraping-in-2025-e4f9b2a49431)

- Web Scraping in 2025: What Worked, What Broke, What’s Next (https://oxylabs.io/blog/web-scraping-in-2025-what-worked-what-broke-whats-next)

- Analyze Scraped Data for Insights and Strategic Decisions

- Web Scraping Report 2025: Market Trends, Growth & Key Insights (https://promptcloud.com/blog/state-of-web-scraping-2025-report)

- The 2025 Web Scraping Industry Report - Business Leaders (https://zyte.com/learn/2025-industry-report-leaders)

- Clymin (https://clymin.ai/blog/10-essential-scraping-strategies-for-business-growth-in-2025-html)

- Web Scraping for Business Growth: Executive Guide 2025 (https://groupbwt.com/blog/web-scraping-for-business-growth)

- Ensure Compliance with Legal Guidelines in Web Scraping

- Web Scraping for AI Development: The CNIL builds on EDPB Guidance to open the door, cautiously | Clifford Chance (https://cliffordchance.com/insights/resources/blogs/talking-tech/en/articles/2025/06/web-scraping-for-ai-development--the-cnil-builds-on-edpb-guidanc.html)

- Is Web Scraping Legal? Laws, Compliance & Best Practices (https://infomineo.com/services/data-analytics/is-web-scraping-legal-laws-compliance-best-practices)

- Development of an AI system: CNIL issues guidelines regarding collection of data via web scraping (https://hoganlovells.com/en/publications/development-of-an-ai-system-cnil-issues-guidelines-regarding-collection-of-data-via-web-scraping)

- The state of web scraping in the EU | IAPP (https://iapp.org/news/a/the-state-of-web-scraping-in-the-eu)

- Is Web Scraping Legal? Navigating Terms of Service and Best Practices (https://ethicalwebdata.com/2025/01/27/is-web-scraping-legal-navigating-terms-of-service-and-best-practices)